Data Quality Management Market Size By Region (North America, Europe, Asia-Pacific, Latin America, Middle East and Africa), By Statistics, Trends, Outlook and Forecast 2026 to 2033 (Financial Impact Analysis)

ID : MRU_ 438827 | Date : Dec, 2025 | Pages : 251 | Region : Global | Publisher : MRU

Data Quality Management Market Size

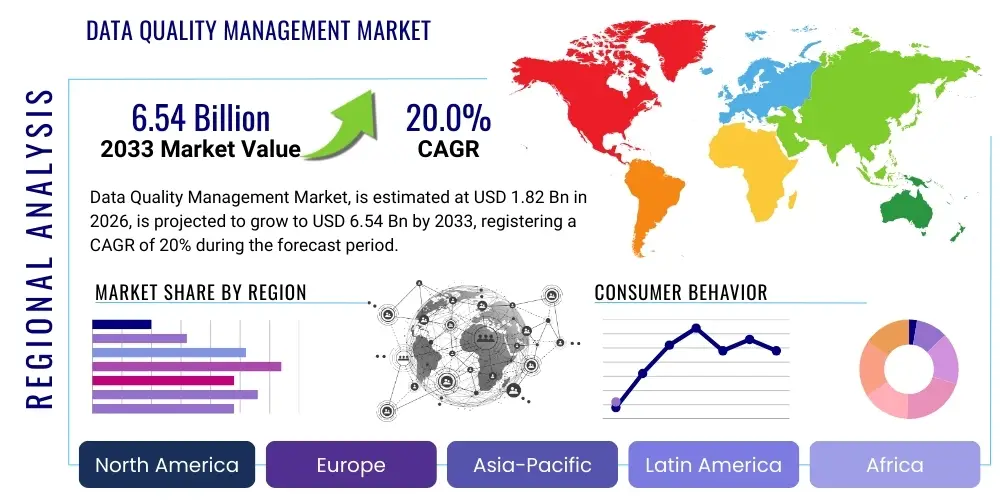

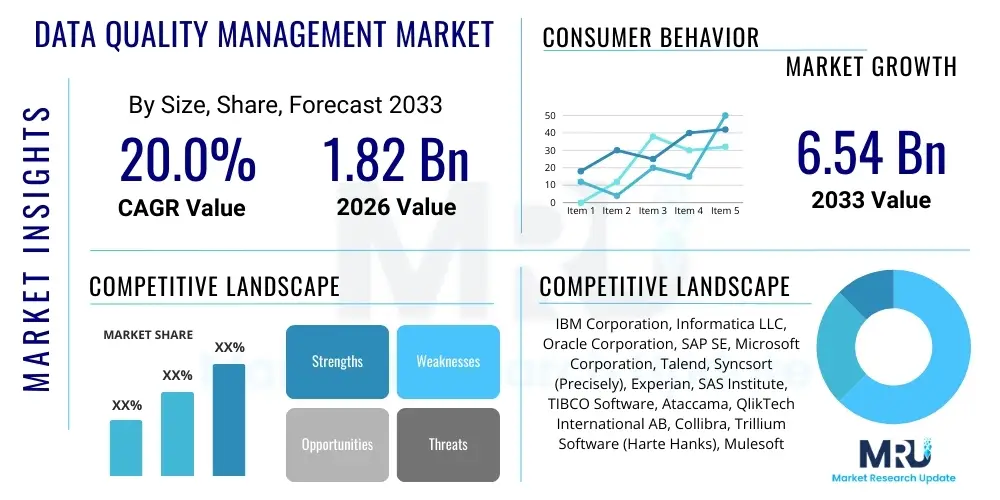

The Data Quality Management Market is projected to grow at a Compound Annual Growth Rate (CAGR) of 20.0% between 2026 and 2033. The market is estimated at USD 1.82 Billion in 2026 and is projected to reach USD 6.54 Billion by the end of the forecast period in 2033.

Data Quality Management Market introduction

The Data Quality Management (DQM) market encompasses the technologies, processes, and methodologies required to ensure the accuracy, completeness, consistency, relevance, and uniformity of enterprise data. In the current data-driven economy, poor data quality results in significant operational inefficiencies, flawed strategic decisions, and failure to comply with stringent regulatory mandates such as GDPR, CCPA, and HIPAA. DQM solutions address these challenges by providing tools for data profiling, cleansing, standardization, validation, monitoring, and enrichment, thereby forming the cornerstone of successful data governance frameworks across all organizational scales.

Major applications of DQM span across critical business functions, including customer relationship management (CRM), supply chain optimization, financial reporting, and risk management. The product offerings within this market range from standalone data quality tools to comprehensive data integration and governance suites, often deployed via cloud-based Software as a Service (SaaS) models, which offer scalability and reduced infrastructure costs. Vertical adoption is notably high in sectors like Banking, Financial Services, and Insurance (BFSI), Healthcare, and Telecommunications, where the sheer volume and sensitivity of data necessitate stringent quality controls.

The market is primarily driven by the exponential growth in data volume (Big Data), the increasing reliance on advanced analytics and Artificial Intelligence (AI) which demand high-fidelity data inputs, and the escalating need for regulatory adherence globally. Furthermore, the push towards digital transformation and the subsequent migration of legacy systems to the cloud inherently introduce data quality challenges, making DQM solutions indispensable for organizations aiming to maintain competitive advantage and achieve operational excellence.

Data Quality Management Market Executive Summary

The Data Quality Management (DQM) market is poised for significant expansion, driven predominantly by global digital transformation initiatives and the critical necessity for trustworthy data in AI and machine learning processes. Business trends indicate a strong pivot towards cloud-native DQM solutions, facilitating easier integration with modern data stacks (data lakes and data warehouses) and promoting real-time data quality monitoring rather than periodic checks. Organizations are increasingly seeking solutions that offer automated data remediation and predictive quality features, moving away from manual cleansing processes. The convergence of DQM with broader data governance, master data management (MDM), and data observability platforms is defining the next generation of market offerings, establishing DQM as an integrated, continuous function rather than an isolated IT project.

Regionally, North America remains the dominant market due to the early adoption of advanced technologies, the presence of major DQM vendors, and rigorous regulatory standards like the U.S. financial regulations. However, the Asia Pacific (APAC) region is projected to exhibit the highest Compound Annual Growth Rate (CAGR), fueled by rapid urbanization, massive data generation from emerging economies (China, India), and significant government investments in digital infrastructure and smart city projects. Europe maintains a strong market presence, largely propelled by mandatory compliance with GDPR, which places intense pressure on organizations to ensure high data accuracy and integrity when handling consumer data.

Segment trends highlight the dominance of the Solution segment over Services, although professional services related to implementation, consultation, and managed quality assurance are also growing rapidly due to the complexity of integrating DQM tools into existing enterprise architecture. Furthermore, the deployment segment is shifting heavily towards the cloud, leveraging its agility and cost-effectiveness. The end-user analysis confirms that the BFSI and Healthcare sectors are the primary consumers, prioritizing data quality to minimize financial risk, prevent fraud, and ensure accurate patient care and claims processing, respectively, making these verticals high-priority targets for DQM solution providers.

AI Impact Analysis on Data Quality Management Market

Users frequently inquire about how Artificial Intelligence (AI) can revolutionize the typically labor-intensive aspects of Data Quality Management, focusing on questions such as "Can AI automate data cleansing entirely?" or "How does machine learning improve data validation accuracy?" Key themes emerging from these concerns center on automation, prediction, and scalability. Users are eager to understand how AI and Machine Learning (ML) move DQM beyond rule-based systems, enabling proactive identification of anomalies, automated data profiling in complex, unstructured datasets, and predicting future quality degradation based on usage patterns. The consensus expectation is that AI will transform DQM from a reactive function, fixing issues after they occur, into a proactive, embedded data observability framework that ensures high-quality data trustlessly and continuously.

The integration of AI into DQM tools introduces significant market dynamics. AI algorithms are crucial for tackling challenges associated with Big Data, particularly concerning variety and velocity. Traditional DQM tools struggle with identifying subtle inconsistencies across massive, heterogeneous data lakes. ML models, however, excel at pattern recognition, allowing them to accurately resolve entity identification challenges (e.g., matching customer records across disparate systems) and automatically suggesting appropriate data standardization rules without human intervention, leading to substantial reductions in the total cost of quality.

Furthermore, the future competitive landscape of the DQM market will be defined by vendors who can successfully leverage AI for prescriptive data quality management. This includes using deep learning models to understand semantic meaning within unstructured text (like customer feedback or incident reports) and deriving quality metrics. By enabling sophisticated outlier detection and automated root cause analysis, AI not only improves the efficiency of quality processes but also elevates the reliability of downstream AI applications themselves, creating a self-reinforcing quality loop that is essential for mission-critical operations.

- AI enhances data profiling by automatically detecting data types, patterns, and anomalies in massive datasets.

- Machine Learning (ML) facilitates advanced matching and linkage algorithms, improving the accuracy of entity resolution across diverse sources.

- Predictive DQM uses ML to forecast potential data quality decay and proactively suggest maintenance or governance interventions.

- Natural Language Processing (NLP) enables the quality assessment and cleansing of unstructured text data, expanding DQM scope beyond structured records.

- Intelligent rule generation allows DQM systems to learn and adapt validation rules automatically based on observed data integrity, minimizing manual rule maintenance.

- Automation of data remediation workflows significantly reduces the time and labor required for data cleansing and standardization processes.

- AI supports self-service data quality functionalities, allowing business users to monitor and resolve localized quality issues without relying solely on IT teams.

DRO & Impact Forces Of Data Quality Management Market

The Data Quality Management (DQM) market is heavily influenced by dynamic forces characterized by strong drivers rooted in digital necessity and regulatory mandates, moderated by restraints concerning complexity and cost, yet offering substantial growth opportunities through technological integration. The primary driver is the pervasive demand for reliable, accurate data as the foundational input for advanced technologies like AI, IoT, and Big Data analytics, ensuring that strategic decisions are based on trusted intelligence. This critical need is counterbalanced by significant restraints, particularly the high initial investment required for sophisticated DQM platforms and the inherent challenges associated with integrating these tools into diverse, legacy IT infrastructures, which often involve complex data silos and resistance to change.

The key driving forces include stringent global data privacy regulations (GDPR, CCPA), which mandate accurate personal data handling; the increasing operational costs associated with poor data quality (estimated to cost businesses billions annually in waste and missed opportunities); and the widespread implementation of cloud-based data warehouses and data lakes that necessitate robust, scalable quality controls. Opportunities are primarily centered around the integration of AI/ML for automated, continuous data quality monitoring, the expansion into specialized data quality areas such as location intelligence and social media data validation, and catering to the growing demand for DQM solutions tailored for hybrid and multi-cloud environments, providing a seamless quality experience across distributed systems.

Restraints also include the shortage of skilled data governance professionals capable of effectively deploying and managing complex DQM frameworks, leading many businesses to rely on expensive external services. Furthermore, achieving consensus on 'data quality standards' across different departmental stakeholders within a large organization often presents a political and organizational hurdle, complicating the successful implementation of enterprise-wide DQM initiatives. These interacting forces collectively dictate the market trajectory, rewarding vendors who offer integrated, intuitive, and highly automated solutions that minimize complexity and maximize return on investment (ROI) by mitigating operational risk.

Segmentation Analysis

The Data Quality Management (DQM) market segmentation provides a granular view of solution uptake across various dimensions, including component type, deployment model, organization size, application, and vertical. This stratification is essential for understanding purchasing patterns and identifying high-growth sub-segments. The market is primarily bifurcated into Solutions (Software tools) and Services (Consulting, Integration, Maintenance), with solutions accounting for the larger revenue share due to recurring software license fees. However, the Services segment exhibits a robust growth rate, reflecting the increasing demand for specialized expertise required to navigate complex data environments and customize quality frameworks.

- By Component: Solutions (Software), Services (Professional Services, Managed Services)

- By Deployment Model: On-Premise, Cloud

- By Organization Size: Large Enterprises, Small and Medium-sized Enterprises (SMEs)

- By Application: Data Cleansing, Data Validation, Data Profiling, Data Monitoring, Data Standardization, Data Enrichment, Data Matching

- By Vertical: BFSI (Banking, Financial Services, and Insurance), Telecommunications and IT, Retail and E-commerce, Healthcare and Life Sciences, Manufacturing, Government and Public Sector, Others (Media, Education, Energy)

Value Chain Analysis For Data Quality Management Market

The Data Quality Management value chain initiates with upstream activities centered on data acquisition and raw data processing, where data sourcing from transactional systems, IoT devices, or third-party providers occurs. This stage heavily relies on data integration tools and ingestion pipelines to prepare the input for quality assessment. The complexity at this initial stage is increasing due to the proliferation of diverse data formats (structured, unstructured, semi-structured) and the need for real-time data streaming, emphasizing the role of robust extract, transform, load (ETL) or extract, load, transform (ELT) processes integrated with quality checks.

The midstream phase constitutes the core DQM operations, including data profiling (discovering data characteristics and anomalies), standardization (applying consistent formats), cleansing (fixing errors and inconsistencies), and data matching/linking (creating unified customer or product views). Major DQM vendors primarily focus their intellectual property and R&D efforts on optimizing proprietary algorithms for these core functions, especially leveraging machine learning for automated remediation. This phase often involves close collaboration between DQM software providers and data governance consulting firms that implement the tailored quality rules and standards.

Downstream activities involve the distribution channel and the utilization of quality-assured data. The distribution channel primarily utilizes both direct sales (for major enterprise contracts) and indirect partnerships (through system integrators, value-added resellers, and cloud service providers like AWS, Azure, and Google Cloud). Finally, the consumption of high-quality data occurs in end-user applications such as business intelligence (BI), CRM, MDM, operational systems, and regulatory reporting. The success of the DQM platform is ultimately measured by the improved accuracy and reliability of these downstream analytical and operational outputs, solidifying the continuous feedback loop back to the upstream ingestion process for continuous quality improvement.

Data Quality Management Market Potential Customers

The primary end-users and potential buyers of Data Quality Management solutions are organizations across nearly every industry vertical that face challenges related to high data volume, regulatory obligations, and the critical dependence of their core business functions on accurate information. These customers typically range from large, multinational corporations managing petabytes of customer and operational data to mid-sized firms undergoing digital transformation and seeking to establish foundational data governance practices. The common thread among these potential customers is the realization that flawed data directly translates into tangible financial losses, compliance risks, and erosion of customer trust.

Specific high-priority customer segments include Financial Institutions (banks, insurance companies, investment firms) which utilize DQM extensively for risk modeling, fraud detection, anti-money laundering (AML) compliance, and accurate customer lifetime value (CLV) calculation. Healthcare and Life Sciences organizations are also crucial customers, deploying DQM to ensure accurate patient records, optimize clinical trials, and comply with strict regulations like HIPAA and ensuring reliable drug efficacy data. These sectors prioritize data integrity to avoid potentially life-threatening errors and ensure regulatory safety.

Furthermore, E-commerce and Retail companies are increasingly adopting DQM solutions, primarily for customer data consolidation (creating a 360-degree customer view), inventory management accuracy, and personalized marketing efforts. Government agencies represent another vital segment, needing DQM for efficient public service delivery, national security, and managing vast demographic and administrative datasets accurately. Essentially, any organization reliant on data for complex operational decision-making, regulatory reporting, or high-stakes customer interactions represents a prime potential customer for DQM solutions.

| Report Attributes | Report Details |

|---|---|

| Market Size in 2026 | USD 1.82 Billion |

| Market Forecast in 2033 | USD 6.54 Billion |

| Growth Rate | 20.0% CAGR |

| Historical Year | 2019 to 2024 |

| Base Year | 2025 |

| Forecast Year | 2026 - 2033 |

| DRO & Impact Forces |

|

| Segments Covered |

|

| Key Companies Covered | IBM Corporation, Informatica LLC, Oracle Corporation, SAP SE, Microsoft Corporation, Talend, Syncsort (Precisely), Experian, SAS Institute, TIBCO Software, Ataccama, QlikTech International AB, Collibra, Trillium Software (Harte Hanks), Mulesoft (Salesforce), Pitney Bowes, Reltio, Alteryx, Tamr, Zetaly |

| Regions Covered | North America, Europe, Asia Pacific (APAC), Latin America, Middle East, and Africa (MEA) |

| Enquiry Before Buy | Have specific requirements? Send us your enquiry before purchase to get customized research options. Request For Enquiry Before Buy |

Data Quality Management Market Key Technology Landscape

The technological landscape of the Data Quality Management market is rapidly evolving, moving beyond simple batch processing tools to incorporate sophisticated, real-time, and predictive capabilities. Core technological pillars include advanced parsing and standardization engines, which utilize comprehensive linguistic and geographical dictionaries to standardize disparate data formats efficiently, especially critical for address and name verification. Crucially, the move towards distributed computing frameworks, such as Apache Hadoop and Spark, is enabling DQM platforms to handle the volume and velocity of Big Data, performing complex quality checks and transformations in parallel across vast data lake environments without performance degradation.

A significant technological shift involves the integration of Machine Learning (ML) and Artificial Intelligence (AI) to enhance data quality processes. ML algorithms are deployed for automated data profiling, allowing systems to learn expected data patterns and quickly flag anomalous deviations that traditional rule-based methods might miss. Furthermore, sophisticated probabilistic and deterministic matching algorithms, often utilizing graph database technology, are becoming standard for entity resolution and master data management (MDM) integration. These technologies help organizations achieve a single, trusted view of core entities (customers, products, suppliers) by accurately linking fragmented records across siloed systems, significantly boosting the accuracy of business intelligence.

The rise of Data Observability platforms is also redefining the DQM technology stack. These platforms utilize continuous monitoring and automated alerting, leveraging technologies like stream processing (e.g., Kafka) to track data lineage and quality metrics in real time as data moves through pipelines. This proactive approach shifts the focus from fixing data errors after they have polluted downstream systems to preventing them at the source. Moreover, the prevalence of API-based DQM services allows seamless integration into modern microservices architecture and cloud environments, facilitating embedded data quality checks at the point of data entry or transaction, ensuring quality assurance is an inseparable component of application development.

Regional Highlights

- North America: North America holds the largest market share, driven by high technological maturity, the early and widespread adoption of cloud computing, and the presence of highly regulated industries (BFSI and Healthcare) that mandate strict data governance. The region benefits from substantial investment in R&D by major DQM vendors (e.g., Informatica, IBM) and a strong emphasis on leveraging data for competitive advantage. Compliance requirements such as CCPA and sector-specific financial regulations further fuel the demand for sophisticated, enterprise-grade DQM solutions capable of handling massive, complex datasets.

- Europe: Europe represents the second-largest market, primarily propelled by the strict enforcement of the General Data Protection Regulation (GDPR). GDPR mandates accountability and accuracy concerning personal data, forcing organizations across all industries to invest heavily in DQM tools for data discovery, lineage tracking, and compliance monitoring. Western European countries, particularly the UK, Germany, and France, exhibit high adoption rates. The trend in Europe focuses heavily on data residency and ensuring quality standards align with national regulatory specifics.

- Asia Pacific (APAC): APAC is projected to be the fastest-growing region during the forecast period. This rapid growth is attributed to aggressive digital transformation initiatives, massive data generation from large populations and high mobile penetration, and government-backed smart city projects in countries like China, India, and Japan. While data quality maturity varies, the substantial growth in cloud adoption and the increasing need for reliable business data to support emerging AI applications are key accelerators. Infrastructure investment remains a critical factor for DQM penetration in this diverse region.

- Latin America (LATAM): The LATAM market is characterized by emerging adoption and high growth potential. Economic instability and lower IT budgets often restrict large-scale DQM deployment, but the increasing presence of multinational corporations and growing awareness regarding data sovereignty and consumer protection laws (similar to Brazil’s LGPD) are creating pockets of high demand, particularly in the BFSI sector in Brazil and Mexico.

- Middle East and Africa (MEA): MEA shows nascent growth, focused primarily within Gulf Cooperation Council (GCC) countries (UAE, Saudi Arabia) driven by government efforts to diversify economies away from oil and invest in smart initiatives. DQM adoption is concentrated in the telecommunications and financial services sectors. Challenges include diverse regulatory environments and a relatively smaller pool of specialized IT talent, making managed DQM services highly attractive.

Top Key Players

The market research report includes a detailed profile of leading stakeholders in the Data Quality Management Market.- IBM Corporation

- Informatica LLC

- Oracle Corporation

- SAP SE

- Microsoft Corporation

- Talend

- Syncsort (Precisely)

- Experian

- SAS Institute

- TIBCO Software

- Ataccama

- QlikTech International AB

- Collibra

- Trillium Software (Harte Hanks)

- Mulesoft (Salesforce)

- Pitney Bowes

- Reltio

- Alteryx

- Tamr

- Zetaly

Frequently Asked Questions

Analyze common user questions about the Data Quality Management market and generate a concise list of summarized FAQs reflecting key topics and concerns.What is the primary driver accelerating the adoption of Data Quality Management solutions?

The primary driver is the accelerating demand for trustworthy data to support AI, Machine Learning, and sophisticated business analytics, combined with stringent global regulatory requirements (e.g., GDPR, CCPA) that mandate accurate and compliant handling of personal data. Poor data quality directly impedes digital transformation and exposes organizations to significant compliance risk.

How is the adoption of cloud computing affecting the Data Quality Management market?

Cloud computing is profoundly impacting the DQM market by shifting deployment from on-premise to cloud-based Software as a Service (SaaS) models. This facilitates enhanced scalability, easier integration with cloud data warehouses and lakes, and supports real-time, continuous data quality monitoring across distributed, hybrid environments, making DQM more accessible and agile.

Which industry vertical is the largest consumer of Data Quality Management tools?

The Banking, Financial Services, and Insurance (BFSI) vertical is the largest consumer. Financial institutions require DQM to meet rigorous compliance mandates (e.g., AML, Basel III), conduct accurate risk assessments, prevent fraudulent transactions, and consolidate massive customer data sets for accurate reporting and personalization.

What role does Artificial Intelligence play in modern Data Quality Management?

AI transforms DQM by enabling automation and prediction. Machine Learning algorithms automate complex tasks like data profiling, anomaly detection, and entity resolution, moving DQM systems beyond rigid rule-based checks. AI also facilitates predictive data quality, forecasting potential degradation and proactively suggesting preventative governance actions.

What are the most common challenges faced during the implementation of DQM solutions?

Key implementation challenges include high initial investment costs for enterprise-grade solutions, the difficulty of integrating DQM tools into complex, siloed legacy IT infrastructures, and organizational resistance, often stemming from the lack of skilled data governance professionals and difficulties in achieving consensus on uniform data quality standards across departments.

To check our Table of Contents, please mail us at: sales@marketresearchupdate.com

Research Methodology

The Market Research Update offers technology-driven solutions and its full integration in the research process to be skilled at every step. We use diverse assets to produce the best results for our clients. The success of a research project is completely reliant on the research process adopted by the company. Market Research Update assists its clients to recognize opportunities by examining the global market and offering economic insights. We are proud of our extensive coverage that encompasses the understanding of numerous major industry domains.

Market Research Update provide consistency in our research report, also we provide on the part of the analysis of forecast across a gamut of coverage geographies and coverage. The research teams carry out primary and secondary research to implement and design the data collection procedure. The research team then analyzes data about the latest trends and major issues in reference to each industry and country. This helps to determine the anticipated market-related procedures in the future. The company offers technology-driven solutions and its full incorporation in the research method to be skilled at each step.

The Company's Research Process Has the Following Advantages:

- Information Procurement

The step comprises the procurement of market-related information or data via different methodologies & sources.

- Information Investigation

This step comprises the mapping and investigation of all the information procured from the earlier step. It also includes the analysis of data differences observed across numerous data sources.

- Highly Authentic Source

We offer highly authentic information from numerous sources. To fulfills the client’s requirement.

- Market Formulation

This step entails the placement of data points at suitable market spaces in an effort to assume possible conclusions. Analyst viewpoint and subject matter specialist based examining the form of market sizing also plays an essential role in this step.

- Validation & Publishing of Information

Validation is a significant step in the procedure. Validation via an intricately designed procedure assists us to conclude data-points to be used for final calculations.

×

Request Free Sample:

Related Reports

Select License

Why Choose Us

We're cost-effective and Offered Best services:

We are flexible and responsive startup research firm. We adapt as your research requires change, with cost-effectiveness and highly researched report that larger companies can't match.

Information Safety

Market Research Update ensure that we deliver best reports. We care about the confidential and personal information quality, safety, of reports. We use Authorize secure payment process.

We Are Committed to Quality and Deadlines

We offer quality of reports within deadlines. We've worked hard to find the best ways to offer our customers results-oriented and process driven consulting services.

Our Remarkable Track Record

We concentrate on developing lasting and strong client relationship. At present, we hold numerous preferred relationships with industry leading firms that have relied on us constantly for their research requirements.

Best Service Assured

Buy reports from our executives that best suits your need and helps you stay ahead of the competition.

Customized Research Reports

Our research services are custom-made especially to you and your firm in order to discover practical growth recommendations and strategies. We don't stick to a one size fits all strategy. We appreciate that your business has particular research necessities.

Service Assurance

At Market Research Update, we are dedicated to offer the best probable recommendations and service to all our clients. You will be able to speak to experienced analyst who will be aware of your research requirements precisely.

Contact With Our Sales Team

Customer Testimonials

The content of the report is always up to the mark. Good to see speakers from expertise authorities.

Privacy requested , Managing Director

A lot of unique and interesting topics which are described in good manner.

Privacy requested, President

Well researched, expertise analysts, well organized, concrete and current topics delivered in time.

Privacy requested, Development Manager