Distributed Data Grid Market Size By Region (North America, Europe, Asia-Pacific, Latin America, Middle East and Africa), By Statistics, Trends, Outlook and Forecast 2026 to 2033 (Financial Impact Analysis)

ID : MRU_ 432890 | Date : Dec, 2025 | Pages : 258 | Region : Global | Publisher : MRU

Distributed Data Grid Market Size

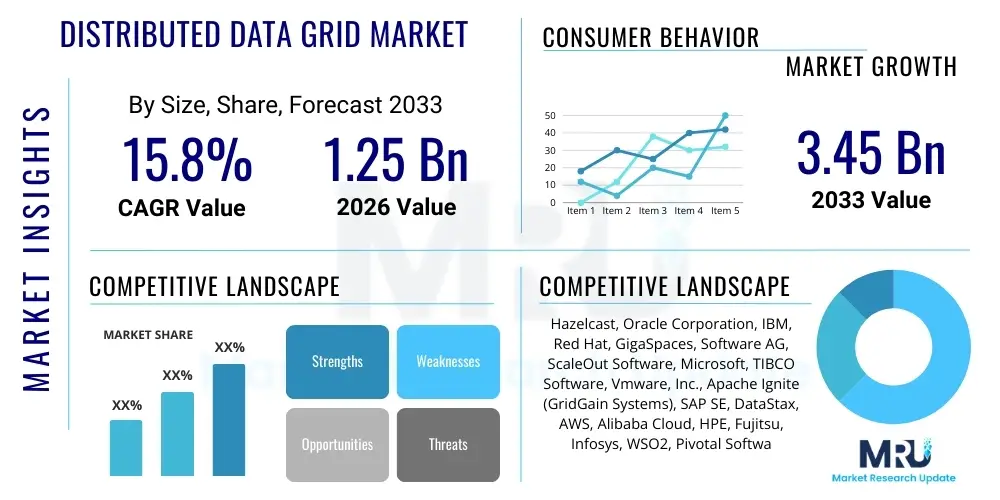

The Distributed Data Grid Market is projected to grow at a Compound Annual Growth Rate (CAGR) of 15.8% between 2026 and 2033. The market is estimated at USD 1.25 Billion in 2026 and is projected to reach USD 3.45 Billion by the end of the forecast period in 2033.

Distributed Data Grid Market introduction

The Distributed Data Grid (DDG) Market encompasses software solutions and frameworks designed to manage and process massive volumes of data across multiple servers or nodes in a clustered environment. DDGs act primarily as an ultra-fast data cache layer, residing between the application and persistent data stores, optimizing transactional performance, and significantly reducing latency. This architecture enables enterprise applications to handle high concurrency and throughput requirements essential for real-time operations, providing consistency and fault tolerance through replication and sharding mechanisms. The core product description involves in-memory computing platforms that offer distributed caching, compute services, and real-time streaming data processing capabilities, crucial for modern, data-intensive workloads.

Major applications of DDGs span across various industries, including high-frequency trading in financial services, personalization engines in retail and e-commerce, real-time fraud detection, and telecommunications network optimization. These systems are invaluable where instant access to volatile, frequently accessed data is critical for business continuity and competitive advantage. The ability of DDGs to horizontally scale ensures that even rapidly growing data sets and user loads do not compromise performance, making them foundational to microservices architectures and cloud-native deployments. Furthermore, the inherent resilience of a DDG, achieved through automatic data synchronization and failover, guarantees high availability, a paramount concern for mission-critical systems.

The driving factors for market expansion are multifaceted, primarily fueled by the exponential proliferation of Big Data, the increasing adoption of cloud computing models, and the pervasive need for low-latency data processing in customer-facing applications. Benefits realized by adopting DDGs include enhanced application scalability, reduced dependence on slow disk I/O operations, improved operational efficiency, and the facilitation of complex real-time analytics. As businesses continue their digital transformation journeys, demanding instant response times for critical operations like inventory management, price optimization, and customer relationship management, the strategic importance of robust distributed data infrastructures becomes undeniable, propelling sustained market demand.

Distributed Data Grid Market Executive Summary

The Distributed Data Grid (DDG) market is witnessing robust growth, driven primarily by the transition of enterprise workloads to hybrid and multi-cloud environments and the imperative for sub-millisecond latency in critical business processes. Key business trends indicate a shift towards integrating DDG capabilities with broader in-memory computing platforms and specialized offerings tailored for real-time analytics and event streaming. Companies are increasingly prioritizing open-source DDG solutions, such as Apache Ignite and Hazelcast, which offer flexibility and cost-efficiency, compelling established proprietary vendors to innovate their licensing and service models. Furthermore, strategic partnerships between DDG providers and major cloud service providers (CSPs) are accelerating deployment and managed service adoption, simplifying operational complexity for end-users seeking highly available and scalable data management layers.

From a regional perspective, North America maintains the largest market share due to the early and high concentration of technologically advanced enterprises, particularly in the financial services and technology sectors, which require ultra-low latency for competitive trading and complex data processing. The Asia Pacific (APAC) region is projected to exhibit the highest Compound Annual Growth Rate (CAGR), fueled by aggressive digital transformation initiatives in emerging economies like China and India, coupled with rapid urbanization and expansion of e-commerce platforms. Europe follows as a mature market, emphasizing regulatory compliance (like GDPR) alongside performance gains, driving demand for DDG solutions that securely manage high volumes of personal and transactional data within strict regulatory frameworks. Adoption in Latin America, Middle East, and Africa (MEA) is progressively accelerating, particularly within the telecommunications and government sectors seeking infrastructure modernization.

Analyzing segment trends, the Services component segment is expected to grow faster than the Software segment, reflecting the increasing need for professional services related to complex DDG deployment, integration with legacy systems, performance tuning, and continuous managed support. In terms of application, the Financial Services segment remains the dominant consumer due to its critical dependence on real-time fraud detection, risk analysis, and high-speed transaction processing. The cloud deployment model is rapidly gaining traction over on-premise solutions, as organizations leverage the scalability and elasticity offered by public and private clouds. This trend is reinforcing the need for DDGs that seamlessly integrate with containerization technologies like Kubernetes, facilitating agile development and deployment of microservices supported by high-speed data access.

AI Impact Analysis on Distributed Data Grid Market

Common user questions regarding AI's impact on the Distributed Data Grid market frequently center on how DDGs can efficiently handle the massive, dynamic data streams generated by AI/ML training models, and whether DDGs can serve as a high-speed feature store for real-time inference. Users are concerned about optimizing data pipelines to feed AI models instantly, querying the necessity of integrating specialized vector databases within the DDG ecosystem, and understanding how AI can enhance the operational efficiency, self-healing, and auto-scaling capabilities of the grid itself. Key themes revolve around performance bottlenecks during simultaneous training and serving, data consistency requirements for model accuracy, and the architectural shifts necessary to support both operational and analytical real-time workloads within a unified data fabric. The central expectation is that DDGs must evolve to become fundamental components in the MLOps infrastructure, providing sub-millisecond access to features for crucial decision-making processes.

- AI algorithms heavily rely on DDGs to access real-time features and operational data for instant model inference and decision-making, minimizing prediction latency.

- DDGs serve as high-throughput, low-latency feature stores, caching pre-processed data required for AI/ML training and serving pipelines.

- The rise of specialized AI workloads drives demand for DDGs capable of handling multimodal data types, including vectors for efficient similarity searches.

- AI and Machine Learning are increasingly being used within DDG platforms for self-optimization, predictive maintenance, and intelligent load balancing across nodes.

- Integration of DDGs with event streaming platforms (like Kafka) accelerates the flow of real-time data needed for continuous AI model retraining and monitoring.

DRO & Impact Forces Of Distributed Data Grid Market

The Distributed Data Grid Market is subject to significant dynamic forces encompassing robust drivers, inherent restraints, and compelling opportunities that collectively dictate its trajectory. The primary driving force is the ubiquitous demand for real-time data processing and analytics across virtually all sectors, underpinned by the surge in data generated from IoT devices, mobile applications, and high-frequency business transactions. Organizations can no longer tolerate the latency associated with traditional disk-based databases, making DDGs an essential layer for performance acceleration. Furthermore, the mass adoption of microservices architectures and cloud-native application development, where stateless services require fast access to shared, session-based data, intrinsically links the success of these modern architectures to the underlying DDG infrastructure, thereby mandating market expansion.

Conversely, several restraints impede broader market penetration. The complexity associated with implementing, configuring, and maintaining a high-availability, distributed environment remains a significant hurdle, requiring specialized technical expertise that may be scarce, particularly in mid-sized enterprises. Initial high deployment costs and the necessary migration effort to integrate DDGs with existing legacy data infrastructure also pose financial barriers. Moreover, concerns related to data consistency and ensuring atomicity across a widely distributed cache, especially in highly regulated environments, necessitate advanced management tools and rigorous testing, contributing to implementation reluctance among some organizations sensitive to data integrity risks.

The market is poised for considerable opportunity, particularly through the proliferation of hybrid cloud and edge computing paradigms. DDGs are ideally suited to address the performance requirements of edge devices and geographically distributed applications by enabling localized data caching and processing, reducing reliance on centralized cloud resources. There is a burgeoning opportunity in integrating DDG capabilities with next-generation technologies like serverless computing and WebAssembly (Wasm), allowing for highly scalable, transient compute processes that require instant data access. The impact forces indicate a high influence from technology adoption and low-latency demands (high drivers), mitigated somewhat by operational complexity and vendor lock-in concerns (moderate restraints), ultimately leading to significant expansion as organizations prioritize digital resilience and real-time operations, leveraging opportunities in cloud and edge environments.

Segmentation Analysis

The Distributed Data Grid (DDG) market is meticulously segmented based on components, deployment models, and application verticals, allowing for targeted solutions that address specific enterprise needs. Segmentation by component separates the market into Software (the core platform and licenses) and Services (consulting, implementation, and managed services), reflecting the necessary balance between technology acquisition and operational support. Deployment segmentation distinguishes between Cloud-based (including public, private, and hybrid cloud models) and On-premise installations, charting the industry migration toward flexible, consumption-based architectures. Application segmentation reveals the diverse industry utility of DDGs, with financial services being the most mature adopter, followed closely by sectors like retail, telecommunications, and healthcare, all of which benefit immensely from improved operational latency and scalability. Understanding these segments is crucial for vendors to tailor product features, pricing strategies, and go-to-market approaches to capture specific pockets of high growth.

- Component

- Software (Platform and Licenses)

- Services (Professional Services, Managed Services, Support)

- Deployment Model

- On-Premise

- Cloud (Public, Private, Hybrid)

- Application

- Financial Services (BFSI)

- Retail and E-commerce

- Telecommunications

- Healthcare and Life Sciences

- Manufacturing

- Government and Defense

- IT and IT Enabled Services (ITES)

Value Chain Analysis For Distributed Data Grid Market

The value chain for the Distributed Data Grid market initiates with upstream activities focused on core technology development, intellectual property creation, and software design. This phase involves extensive research and development (R&D) into optimization algorithms, clustering protocols, and data consistency mechanisms to ensure high performance and resilience. Key participants in the upstream segment are specialized software developers, open-source communities (like Apache), and internal R&D units of major technology companies, who establish the fundamental architecture of the grid. Their ability to innovate in areas like memory utilization, sharding techniques, and seamless integration with emerging data sources directly influences the final product’s capabilities and competitive standing in the market.

Midstream activities involve the aggregation, packaging, and distribution of the DDG solutions. This includes bundling the core software with advanced features such as security modules, monitoring tools, and management dashboards. Vendors focus on ensuring platform compatibility with diverse operating systems, virtualization technologies, and container orchestration tools like Kubernetes. The distribution channel primarily consists of direct sales teams for large enterprise contracts, supplemented by indirect channels involving Value-Added Resellers (VARs), system integrators (SIs), and strategic partnerships with cloud service providers (CSPs). These channels are essential for reaching disparate customer bases and providing localized support during implementation and integration phases.

Downstream activities center on deployment, professional services, and ongoing support, directly engaging the end-users. This stage involves complex integration with existing enterprise applications, data migration from traditional databases, and performance tuning tailored to specific use cases, such as transaction caching or session management. Direct channels are often utilized for premium support and consultancy, especially for mission-critical deployments in BFSI and Telecom. Indirect channels, primarily through certified SIs, play a crucial role in providing implementation expertise and training, ensuring that the deployed DDG achieves its maximum performance potential and meets the high-availability requirements stipulated by the enterprise.

Distributed Data Grid Market Potential Customers

The primary potential customers for Distributed Data Grid solutions are large enterprises and technologically advanced organizations characterized by high transaction volumes, extremely stringent latency requirements, and a mandate for continuous operational availability. End-users typically include Financial Services firms—especially investment banks and trading platforms—which utilize DDGs for high-frequency trading, real-time risk calculations, and instantaneous fraud detection. Another significant customer base lies within the Retail and E-commerce sector, where DDGs manage vast inventories, process rapid order updates, and provide personalized customer experiences by caching session data and user preferences across geographically dispersed servers, ensuring system responsiveness during peak traffic events.

Telecommunications companies represent a critical buyer segment, using DDGs for real-time customer session management, network traffic analysis, and billing systems, requiring performance levels impossible to achieve with disk-based systems alone. Healthcare providers and insurance companies are also becoming increasingly important customers, deploying DDGs to handle real-time medical imaging data, electronic health record (EHR) lookups, and fast claims processing, balancing the need for speed with strict data privacy and regulatory compliance (HIPAA). Essentially, any organization undergoing digital transformation and migrating towards a microservices architecture that requires immediate access to operational data is a prime candidate for DDG adoption, highlighting a broad, cross-industry appeal driven by performance necessity.

| Report Attributes | Report Details |

|---|---|

| Market Size in 2026 | USD 1.25 Billion |

| Market Forecast in 2033 | USD 3.45 Billion |

| Growth Rate | 15.8% CAGR |

| Historical Year | 2019 to 2024 |

| Base Year | 2025 |

| Forecast Year | 2026 - 2033 |

| DRO & Impact Forces |

|

| Segments Covered |

|

| Key Companies Covered | Hazelcast, Oracle Corporation, IBM, Red Hat, GigaSpaces, Software AG, ScaleOut Software, Microsoft, TIBCO Software, Vmware, Inc., Apache Ignite (GridGain Systems), SAP SE, DataStax, AWS, Alibaba Cloud, HPE, Fujitsu, Infosys, WSO2, Pivotal Software (VMware). |

| Regions Covered | North America, Europe, Asia Pacific (APAC), Latin America, Middle East, and Africa (MEA) |

| Enquiry Before Buy | Have specific requirements? Send us your enquiry before purchase to get customized research options. Request For Enquiry Before Buy |

Distributed Data Grid Market Key Technology Landscape

The technological core of the Distributed Data Grid market is defined by several key components, primarily centering on In-Memory Computing (IMC) and sophisticated clustering algorithms. DDGs leverage Random Access Memory (RAM) for data storage, which provides orders of magnitude faster access times compared to traditional disk-based systems. Essential technologies utilized include high-performance networking protocols to facilitate rapid communication between distributed nodes and advanced data partitioning (sharding) techniques to ensure even distribution of the workload and data across the cluster. These underlying technological mechanisms guarantee scalability and fault tolerance, enabling the grid to transparently handle node failures while maintaining data consistency and availability, a feature critical for mission-critical applications operating 24/7. Furthermore, transactional integrity across distributed nodes is often maintained using sophisticated two-phase commit protocols or eventual consistency models, optimized for speed.

Modern DDGs are increasingly adopting integrated technologies to enhance their functionality beyond simple caching. This includes incorporating support for concurrent compute paradigms, allowing applications to execute processing logic directly on the data node where the data resides (Compute-on-Data), dramatically reducing network traffic and latency associated with moving large datasets. They are also tightly integrated with emerging technologies such as event streaming platforms (e.g., Apache Kafka) and stream processing engines (e.g., Apache Flink or Spark Streaming). This integration transforms the DDG from a static cache into a dynamic, real-time data ingestion and processing layer, capable of supporting complex event processing and continuous data transformations necessary for real-time analytics, machine learning feature serving, and IoT data processing. The convergence of caching, computing, and streaming within a single, highly distributed architecture is defining the cutting edge of the DDG landscape.

The operational technology landscape is heavily influenced by cloud-native standards and containerization. DDG products must demonstrate native compatibility with Kubernetes for orchestration and scaling, enabling seamless deployment in hybrid and multi-cloud environments. Security technologies are also paramount, involving advanced encryption mechanisms for data both in-flight and at rest, alongside fine-grained access control lists (ACLs) to satisfy stringent compliance requirements like GDPR and CCPA. The adoption of open-source frameworks, notably Apache Ignite and Hazelcast, continues to drive innovation, fostering community contributions and rapid feature development, especially around connecting to disparate data sources like relational databases, NoSQL stores, and object storage systems, ensuring the DDG can serve as a unified, high-speed data access layer for diverse enterprise systems.

Regional Highlights

- North America: This region dominates the Distributed Data Grid market share, driven by the early adoption of advanced technologies, the presence of major technological innovation hubs, and the high concentration of financial institutions and e-commerce giants demanding ultra-low latency solutions. The stringent performance requirements in sectors like high-frequency trading and sophisticated risk management models necessitate robust DDG implementation. Regulatory environments, while demanding high compliance, also push for technological sophistication in data management. The widespread utilization of hybrid cloud strategies further fuels the DDG market here, as organizations require persistent, high-speed data consistency across both on-premise data centers and public cloud infrastructure.

- Europe: The European market is characterized by a high emphasis on data protection and governance, particularly under regulations like GDPR. Demand for DDGs is strong in the BFSI and Telecommunications sectors, where scalability and compliance must be achieved simultaneously. European organizations are focused on utilizing DDGs to enhance operational efficiency, manage large customer databases securely, and support cross-border real-time services. Germany, the UK, and France are the major contributors, with adoption increasingly driven by the need to modernize legacy systems and integrate high-performance data layers into complex industrial IoT and manufacturing processes.

- Asia Pacific (APAC): APAC is anticipated to exhibit the fastest growth rate (CAGR) globally. This rapid expansion is primarily attributed to massive digital transformation investments in countries such as China, India, Japan, and South Korea. The booming e-commerce market, rapid proliferation of mobile applications, and extensive government initiatives promoting smart cities are generating unprecedented volumes of real-time data, creating a critical need for DDGs. Furthermore, the increasing establishment of regional data centers and the growing deployment of cloud services by local and international vendors significantly accelerate the adoption of cloud-native DDG solutions.

- Latin America (LATAM): The DDG market in LATAM is maturing, with increasing investments observed in Brazil and Mexico. The primary drivers include the modernization of the banking infrastructure and the expansion of digital public services. Organizations are leveraging DDGs to improve online transaction speeds, enhance fraud detection mechanisms, and support scalable mobile financial services. While infrastructure constraints sometimes limit large-scale adoption, the growing availability of localized cloud regions is progressively lowering the barrier to entry for cloud-based DDG deployments.

- Middle East and Africa (MEA): Growth in the MEA region is spurred mainly by substantial governmental spending on smart initiatives and infrastructure development, particularly in the UAE, Saudi Arabia, and South Africa. Telecommunications and oil and gas sectors are key adopters, utilizing DDGs for real-time sensor data processing, asset monitoring, and optimizing network performance. The focus is on leveraging DDG technology to leapfrog older infrastructural stages, accelerating the integration of advanced technologies like IoT and high-performance computing necessary for diversifying regional economies away from traditional reliance on resources.

Top Key Players

The market research report includes a detailed profile of leading stakeholders in the Distributed Data Grid Market.- Hazelcast

- Oracle Corporation

- IBM

- Red Hat (An IBM Company)

- GigaSpaces

- Software AG

- ScaleOut Software

- Microsoft Corporation

- TIBCO Software

- Vmware, Inc.

- Apache Ignite (Supported by GridGain Systems)

- SAP SE

- DataStax

- Amazon Web Services (AWS)

- Alibaba Cloud

- Hewlett Packard Enterprise (HPE)

- Fujitsu

- Infosys

- WSO2

- Pivotal Software (VMware)

Frequently Asked Questions

Analyze common user questions about the Distributed Data Grid market and generate a concise list of summarized FAQs reflecting key topics and concerns.What is the primary technical benefit of implementing a Distributed Data Grid (DDG)?

The primary technical benefit of implementing a DDG is achieving ultra-low latency data access and massive horizontal scalability. By caching data in-memory across a cluster of servers, DDGs bypass the performance bottlenecks of disk I/O and enable applications to handle high throughput and concurrent transactions vital for real-time operations.

How does the Distributed Data Grid market address data consistency issues?

DDGs address data consistency through various sophisticated mechanisms, including data replication, sharding, and utilizing consistency models ranging from strict transactional consistency (typically for smaller updates) to eventual consistency, which is optimized for high-speed read operations and improved application availability across the distributed nodes.

Which industry vertical is currently the largest consumer of Distributed Data Grid solutions?

The Financial Services (BFSI) vertical is currently the largest consumer of DDG solutions. This dominance is due to the critical need for real-time processing in applications like high-frequency trading, instant payment processing, algorithmic risk management, and immediate fraud detection, where latency must be minimized to maintain competitive edge and regulatory compliance.

Is the Cloud deployment model dominating the Distributed Data Grid Market?

Yes, the Cloud deployment model, particularly Hybrid Cloud, is rapidly gaining dominance in the Distributed Data Grid Market. Organizations favor cloud elasticity and cost-efficiency, driving demand for DDGs that seamlessly integrate with containerization and orchestration technologies like Kubernetes across public and private infrastructure.

How do Distributed Data Grids support Machine Learning and AI applications?

DDGs support ML/AI applications by serving as an extremely fast, high-throughput feature store. They cache pre-processed features and model parameters in memory, allowing AI models to retrieve necessary data instantaneously for real-time inference and predictions, thereby eliminating performance delays in critical decision loops.

To check our Table of Contents, please mail us at: sales@marketresearchupdate.com

Research Methodology

The Market Research Update offers technology-driven solutions and its full integration in the research process to be skilled at every step. We use diverse assets to produce the best results for our clients. The success of a research project is completely reliant on the research process adopted by the company. Market Research Update assists its clients to recognize opportunities by examining the global market and offering economic insights. We are proud of our extensive coverage that encompasses the understanding of numerous major industry domains.

Market Research Update provide consistency in our research report, also we provide on the part of the analysis of forecast across a gamut of coverage geographies and coverage. The research teams carry out primary and secondary research to implement and design the data collection procedure. The research team then analyzes data about the latest trends and major issues in reference to each industry and country. This helps to determine the anticipated market-related procedures in the future. The company offers technology-driven solutions and its full incorporation in the research method to be skilled at each step.

The Company's Research Process Has the Following Advantages:

- Information Procurement

The step comprises the procurement of market-related information or data via different methodologies & sources.

- Information Investigation

This step comprises the mapping and investigation of all the information procured from the earlier step. It also includes the analysis of data differences observed across numerous data sources.

- Highly Authentic Source

We offer highly authentic information from numerous sources. To fulfills the client’s requirement.

- Market Formulation

This step entails the placement of data points at suitable market spaces in an effort to assume possible conclusions. Analyst viewpoint and subject matter specialist based examining the form of market sizing also plays an essential role in this step.

- Validation & Publishing of Information

Validation is a significant step in the procedure. Validation via an intricately designed procedure assists us to conclude data-points to be used for final calculations.

×

Request Free Sample:

Related Reports

Select License

Why Choose Us

We're cost-effective and Offered Best services:

We are flexible and responsive startup research firm. We adapt as your research requires change, with cost-effectiveness and highly researched report that larger companies can't match.

Information Safety

Market Research Update ensure that we deliver best reports. We care about the confidential and personal information quality, safety, of reports. We use Authorize secure payment process.

We Are Committed to Quality and Deadlines

We offer quality of reports within deadlines. We've worked hard to find the best ways to offer our customers results-oriented and process driven consulting services.

Our Remarkable Track Record

We concentrate on developing lasting and strong client relationship. At present, we hold numerous preferred relationships with industry leading firms that have relied on us constantly for their research requirements.

Best Service Assured

Buy reports from our executives that best suits your need and helps you stay ahead of the competition.

Customized Research Reports

Our research services are custom-made especially to you and your firm in order to discover practical growth recommendations and strategies. We don't stick to a one size fits all strategy. We appreciate that your business has particular research necessities.

Service Assurance

At Market Research Update, we are dedicated to offer the best probable recommendations and service to all our clients. You will be able to speak to experienced analyst who will be aware of your research requirements precisely.

Contact With Our Sales Team

Customer Testimonials

The content of the report is always up to the mark. Good to see speakers from expertise authorities.

Privacy requested , Managing Director

A lot of unique and interesting topics which are described in good manner.

Privacy requested, President

Well researched, expertise analysts, well organized, concrete and current topics delivered in time.

Privacy requested, Development Manager