ETL Tools Market Size By Region (North America, Europe, Asia-Pacific, Latin America, Middle East and Africa), By Statistics, Trends, Outlook and Forecast 2026 to 2033 (Financial Impact Analysis)

ID : MRU_ 432568 | Date : Dec, 2025 | Pages : 253 | Region : Global | Publisher : MRU

ETL Tools Market Size

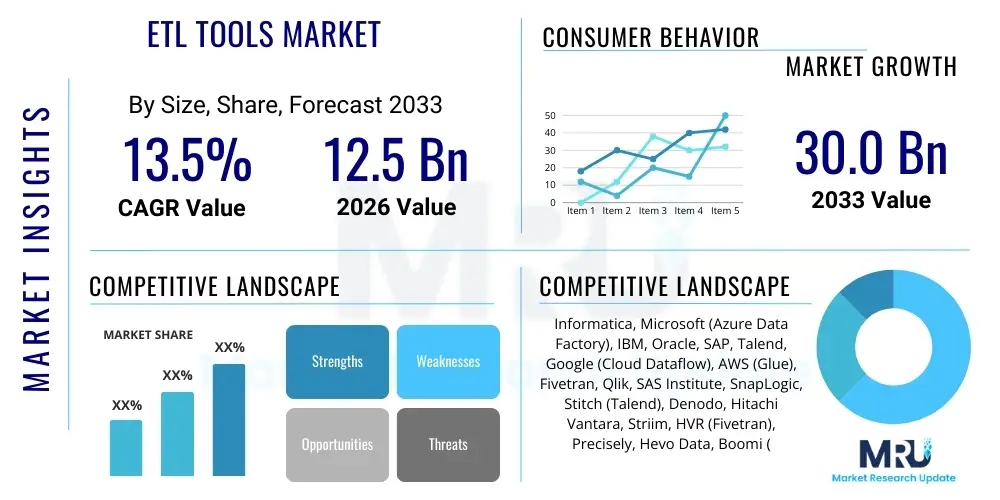

The ETL Tools Market is projected to grow at a Compound Annual Growth Rate (CAGR) of 13.5% between 2026 and 2033. The market is estimated at $12.5 Billion in 2026 and is projected to reach $30.0 Billion by the end of the forecast period in 2033.

ETL Tools Market introduction

The Extract, Transform, Load (ETL) tools market encompasses software solutions designed to facilitate the complex process of moving raw data from disparate sources, cleansing and restructuring it, and finally loading it into a destination system, typically a data warehouse or data lake, for analytical processing. These tools are foundational to modern business intelligence, ensuring data quality, consistency, and accessibility across the enterprise. The core function is to consolidate data silos and create a unified, reliable source of truth, thereby enabling organizations to make informed, data-driven decisions swiftly.

Modern ETL solutions have evolved significantly from traditional batch processing systems. The emergence of cloud-native architectures has popularized ELT (Extract, Load, Transform), where data is first loaded into the cloud data warehouse and transformation occurs natively, leveraging the immense computational power of platforms like Snowflake, AWS Redshift, and Google BigQuery. This shift has driven increased demand for flexible, scalable, and pay-as-you-go ETL/ELT solutions. The primary applications span data migration during system upgrades, operational reporting, regulatory compliance, and constructing complex analytical models essential for competitive advantage.

Key benefits driving the adoption of robust ETL tools include enhanced data governance, reduction in manual coding effort through visual interfaces, improved speed of data integration, and compliance with stringent industry regulations like GDPR and HIPAA. Furthermore, the exponential growth in data volume (Big Data) and variety necessitates automated, efficient integration workflows, positioning ETL tools as indispensable infrastructure components for any organization aiming to maximize the value derived from its digital assets. The continuous need for real-time data streaming and operational analytics further cements the market's robust growth trajectory, pushing vendors to incorporate capabilities such as Change Data Capture (CDC) and streaming ingestion.

ETL Tools Market Executive Summary

The ETL Tools Market is experiencing robust acceleration, primarily propelled by the enterprise-wide shift toward cloud data warehousing and the relentless demand for real-time data processing capabilities across various organizational functions. Major business trends highlight a decisive move away from legacy, on-premise ETL systems towards flexible, scalable cloud-native and serverless ELT architectures, minimizing infrastructure overhead and accelerating time-to-insight. This modernization wave is also characterized by the growing importance of data governance and data observability features embedded within integration platforms, addressing concerns related to data security and regulatory compliance, particularly in highly regulated sectors like BFSI and Healthcare.

Regionally, North America maintains its dominance, driven by early adoption of advanced analytics, the presence of major technological innovation hubs, and significant investment in hybrid cloud environments by large enterprises. However, the Asia Pacific (APAC) region is projected to register the fastest growth rate, fueled by rapid digital transformation initiatives, increasing penetration of public cloud services, and the burgeoning volume of digital consumers across developing economies like India and China. Europe remains a key market, focusing intensely on data sovereignty and ensuring integration tools comply strictly with regional data protection frameworks, thereby driving demand for sophisticated data lineage and masking features.

Segment trends reveal that the Cloud Deployment segment is overwhelmingly leading the market expansion, outstripping traditional on-premise solutions due to superior scalability, reduced total cost of ownership (TCO), and ease of integration with other cloud services. Among end-users, the Banking, Financial Services, and Insurance (BFSI) sector is the largest consumer, requiring high-throughput, secure, and compliant data pipelines for fraud detection, personalized banking, and risk management. The trend also points toward specialized, vertical-specific ETL tools that offer pre-built connectors and industry-standard transformation logic, streamlining deployment and maximizing value for niche applications.

AI Impact Analysis on ETL Tools Market

User queries regarding the impact of Artificial Intelligence (AI) and Machine Learning (ML) on ETL tools frequently center on themes of full automation, predictive data quality, skill obsolescence, and the complexity of managing ML data pipelines (MLOps). Users are concerned about whether AI will completely replace traditional data engineers by autonomously designing and maintaining data flows, and how AI integration platforms will manage the exponential complexity of unstructured data sources. Key expectations revolve around leveraging AI for intelligent data cataloging, automatic schema inference, and proactive identification and resolution of data integration errors before they impact downstream analytics. The demand is high for tools that can self-optimize transformation logic based on performance metrics and anticipate changes in source systems, minimizing maintenance overhead.

AI is fundamentally transforming ETL processes by embedding intelligence throughout the data integration lifecycle, shifting the paradigm from reactive error handling to proactive optimization and self-service capabilities. Traditional ETL required extensive manual effort for mapping, coding transformations, and establishing data lineage; AI now automates large portions of these tasks through capabilities such as natural language processing (NLP) for metadata enrichment and ML algorithms for predicting optimal resource allocation for complex jobs. This automation drastically reduces the time required to onboard new data sources and improves the overall efficiency of the data team, allowing data engineers to focus on higher-value architectural tasks rather than routine maintenance.

Furthermore, AI-driven data quality and governance are becoming standard features in modern ETL platforms. ML models can analyze historical data quality issues to establish baselines, detect anomalies in incoming data streams in real-time, and suggest or execute corrective transformation rules without human intervention. This shift ensures that the data flowing into analytical systems is highly trustworthy. The integration of AI also supports the emerging field of feature engineering for ML models, where dedicated ETL/ELT pipelines are built specifically to prepare and manage the voluminous, structured, and unstructured datasets required to train enterprise-grade machine learning applications.

- AI enables intelligent data mapping and schema harmonization by automatically learning relationships between data sources.

- Predictive data quality management uses machine learning to detect and fix data errors in real-time during the ingestion phase.

- AI-driven automation reduces manual coding, accelerates the development of data pipelines, and minimizes human error.

- Self-optimizing ETL jobs leverage reinforcement learning to dynamically adjust resource utilization and execution paths based on workload patterns.

- The rise of specialized MLOps pipelines within ETL tools facilitates the management and tracking of features used in production machine learning models.

- Enhanced metadata management utilizes NLP and computer vision (for unstructured data) to automatically catalog and understand data assets.

- AI assists in proactive governance and compliance by automatically flagging sensitive data fields requiring masking or specific handling.

DRO & Impact Forces Of ETL Tools Market

The ETL Tools Market is heavily influenced by dynamic forces centered around rapid technological adoption and the growing complexity of enterprise data environments. Drivers, such as the explosive proliferation of Big Data and the pervasive migration of analytical workloads to cloud platforms, necessitate robust and scalable integration solutions. Restraints primarily involve challenges related to data security and privacy concerns, particularly when handling multi-cloud deployments and complying with divergent global regulations, alongside the high initial investment and complexity associated with integrating legacy systems with modern cloud-native tools. Opportunities are abundant in the areas of real-time data streaming integration, the adoption of Data Fabric and Data Mesh architectures, and the increasing demand for self-service ETL capabilities that empower citizen data integrators.

Key drivers include the imperative for businesses across all sectors to gain immediate, actionable insights, pushing the requirement for low-latency data ingestion techniques like Change Data Capture (CDC). The widespread adoption of SaaS applications (e.g., Salesforce, ServiceNow) generates siloed operational data, making ETL tools essential for consolidation and holistic analysis. Furthermore, the sheer versatility and cost-efficiency offered by hyperscale cloud vendors (AWS, Azure, GCP) encourage companies to move their entire data stack to the cloud, significantly boosting demand for cloud-native ELT solutions that can leverage cloud infrastructure elasticity for massive data transformations.

However, the market faces significant hurdles. Data governance and regulatory compliance remain persistent restraints; as data moves between different geopolitical zones, ensuring that ETL processes adhere to localized data sovereignty and privacy laws requires sophisticated auditing and masking features, adding complexity and cost. Additionally, the shortage of skilled data engineers capable of mastering and deploying complex, modern integration platforms poses an ongoing challenge to rapid market expansion. Despite these restraints, the market is poised for growth driven by disruptive opportunities, particularly in integrating operational technology (OT) data from IoT and edge devices, and utilizing serverless computing to make ETL processes highly elastic and consumption-based, fundamentally lowering the barrier to entry for small and medium enterprises (SMEs).

Segmentation Analysis

The ETL Tools market segmentation provides critical insights into the varying needs and adoption patterns across deployment models, components, end-user industries, and organization sizes. The market is increasingly segmented by technological capability, differentiating between traditional batch-based ETL and modern stream-processing ELT tools, reflecting the industry shift toward real-time analytics. Cloud deployment dominates the forecast, driven by its inherent scalability and lower infrastructure management overhead, positioning it as the preferred choice for digitally native companies and organizations undergoing major digital transformation initiatives.

Analysis by component shows a high demand for ETL services, complementing the pure software tools, including consulting, managed services, and ongoing maintenance required for complex, hybrid data environments. Industrially, the BFSI sector represents the largest revenue share due to the intensive data requirements for compliance, risk assessment, and customer personalization. Meanwhile, the IT & Telecom segment is a leading adopter of the latest technologies, particularly those focused on high-speed, high-volume data integration essential for network monitoring and service delivery analytics. Geographically, market fragmentation highlights diverse levels of technological maturity and regulatory landscapes influencing purchasing decisions and tool complexity requirements across regions.

- By Component:

- Tools/Software (On-Premise and Cloud-based)

- Services (Consulting, Integration, Maintenance, Managed Services)

- By Deployment Model:

- On-Premise

- Cloud (Public, Private, Hybrid)

- By Organization Size:

- Small and Medium Enterprises (SMEs)

- Large Enterprises

- By End-User Industry:

- Banking, Financial Services, and Insurance (BFSI)

- IT and Telecom

- Retail and E-commerce

- Healthcare and Life Sciences

- Government and Public Sector

- Manufacturing

- Others (Energy, Media)

Value Chain Analysis For ETL Tools Market

The value chain for the ETL Tools Market begins with the upstream processes focused on sourcing and preparing the core technologies necessary for integration. This stage involves the development of proprietary database connectors, the acquisition of open-source frameworks (like Apache Spark or Kafka), and securing partnerships with major cloud infrastructure providers (e.g., hyperscalers). Upstream suppliers are focused on delivering foundational software components that optimize data retrieval and storage, emphasizing efficiency and compatibility with diverse data structures, including relational, NoSQL, and graph databases. Innovation at this stage, particularly in integrating AI/ML capabilities for automated metadata generation, is crucial for competitive differentiation.

The core midstream activities revolve around the ETL tool vendors themselves, encompassing product development, platform refinement, and the creation of highly scalable integration engines. Vendors invest heavily in developing user-friendly, low-code/no-code interfaces to democratize data integration, alongside robust security and governance frameworks. Distribution channels are varied: direct sales teams handle large enterprise deals requiring extensive customization and consulting, while indirect channels, including system integrators (SIs), value-added resellers (VARs), and cloud marketplaces, are crucial for reaching SMEs and facilitating rapid adoption of standardized, cloud-based offerings. The shift to a subscription-based, consumption-driven pricing model (SaaS/PaaS) has fundamentally reshaped this midstream distribution strategy.

Downstream activities center on deployment, maintenance, and maximizing end-user value. This includes professional services offered by vendors or third-party consultants to tailor the ETL solution to specific organizational workflows, data modeling requirements, and compliance mandates. End-users primarily leverage the integrated data for business intelligence, advanced analytics, and operational applications. The feedback loop from downstream users concerning performance bottlenecks, new data source requirements, and evolving governance needs drives continuous product refinement upstream, ensuring the value chain remains agile and responsive to the evolving demands for data reliability and velocity.

ETL Tools Market Potential Customers

Potential customers for ETL tools span the entirety of the digital economy, encompassing any organization that relies on data generated across multiple systems for strategic decision-making and operational excellence. The primary target demographic includes Chief Data Officers (CDOs), Data Engineering teams, and IT leaders responsible for maintaining the organization’s data architecture and ensuring data quality. These buyers are typically grappling with challenges related to data silo proliferation, migration to cloud data warehouses, or the need to consolidate disparate data sources following mergers and acquisitions.

Large enterprises, particularly those in the Banking, Financial Services, and Insurance (BFSI) sector, represent the most critical customer segment. BFSI institutions require extremely high-throughput ETL solutions to handle transaction volumes, perform real-time fraud detection, comply with stringent regulatory reporting requirements (e.g., Basel III, Solvency II), and develop personalized customer experiences. Their procurement criteria emphasize security, governance, and the ability to integrate securely across on-premise mainframes and public cloud environments, favoring established vendors with robust compliance track records.

A rapidly expanding customer base is found within Small and Medium Enterprises (SMEs) and high-growth technology startups. Driven by accessible, cloud-native ELT solutions that operate on a consumption model, these organizations seek rapid deployment and minimal administrative overhead. E-commerce and Retail sectors also constitute significant buyers, relying on ETL tools to integrate point-of-sale data, supply chain logistics, and digital marketing insights to optimize inventory and personalize online shopping experiences. Furthermore, government agencies are increasingly investing in modern ETL capabilities to improve public service delivery, manage large databases (like census data), and enhance transparency and accountability through consolidated data reporting.

| Report Attributes | Report Details |

|---|---|

| Market Size in 2026 | $12.5 Billion |

| Market Forecast in 2033 | $30.0 Billion |

| Growth Rate | 13.5% CAGR |

| Historical Year | 2019 to 2024 |

| Base Year | 2025 |

| Forecast Year | 2026 - 2033 |

| DRO & Impact Forces |

|

| Segments Covered |

|

| Key Companies Covered | Informatica, Microsoft (Azure Data Factory), IBM, Oracle, SAP, Talend, Google (Cloud Dataflow), AWS (Glue), Fivetran, Qlik, SAS Institute, SnapLogic, Stitch (Talend), Denodo, Hitachi Vantara, Striim, HVR (Fivetran), Precisely, Hevo Data, Boomi (Dell Technologies). |

| Regions Covered | North America, Europe, Asia Pacific (APAC), Latin America, Middle East, and Africa (MEA) |

| Enquiry Before Buy | Have specific requirements? Send us your enquiry before purchase to get customized research options. Request For Enquiry Before Buy |

ETL Tools Market Key Technology Landscape

The technology landscape of the ETL market is undergoing rapid modernization, moving distinctly toward solutions that prioritize agility, scalability, and real-time data ingestion. A major technological shift is the widespread adoption of cloud-native and serverless computing models. Tools leveraging platforms like AWS Lambda, Azure Functions, or Google Cloud Functions allow data pipelines to execute transformation logic without dedicated infrastructure management, enabling a consumption-based cost structure and infinite scalability during peak loads. This architecture facilitates the implementation of modern ELT paradigms where the transformation steps are executed directly within the powerful ecosystem of the cloud data warehouse (e.g., Snowflake’s virtual warehouses), greatly accelerating the process compared to traditional middleware processing.

Another crucial technological advancement is the focus on Change Data Capture (CDC) and stream processing capabilities. Organizations are moving beyond daily or hourly batch refreshes towards continuous, event-driven data flows. CDC technology captures and propagates only the data changes from source systems in real-time, drastically reducing network load and latency, which is vital for operational intelligence and timely customer interactions. Platforms integrating open-source stream processing frameworks like Apache Kafka and Apache Flink are highly sought after, enabling complex transformations and aggregations on data streams before they reach the final destination, supporting applications like real-time bidding, supply chain tracking, and immediate anomaly detection.

Furthermore, the development of sophisticated metadata management and data governance tools is defining the advanced capabilities of modern ETL platforms. Technologies such as automated data lineage tracking, semantic data cataloging, and AI-driven data observability are essential for compliance and ensuring data trustworthiness in complex hybrid environments. The push towards Data Mesh architectures—a decentralized approach where data is treated as a product managed by domain teams—requires ETL tools capable of supporting distributed governance models, exposing data assets via APIs, and ensuring interoperability across diverse infrastructure stacks, necessitating flexible, API-driven integration platforms.

Regional Highlights

- North America: This region holds the largest market share, characterized by high technological maturity, the early and pervasive adoption of cloud infrastructure, and the presence of major cloud service providers and leading ETL solution vendors. The robust regulatory environment, particularly in the BFSI and Healthcare sectors, drives consistent demand for advanced data governance and compliance-focused ETL solutions. Investment in sophisticated technologies like AI-driven ETL and Data Mesh architectures is highest here, fueled by significant venture capital funding and a strong corporate culture emphasizing data monetization.

- Europe: Europe represents a mature market with steady growth, heavily influenced by strict data protection regulations, primarily the General Data Protection Regulation (GDPR). This regulatory environment necessitates ETL tools with advanced features for data masking, pseudonymization, and comprehensive data lineage tracking to ensure cross-border data transfer compliance. The region demonstrates strong demand for hybrid and multi-cloud integration solutions as organizations seek to balance cloud benefits with requirements for data sovereignty and localized storage. The UK, Germany, and France are the major revenue contributors, focusing on integrating legacy industrial data with modern analytical platforms.

- Asia Pacific (APAC): APAC is projected to be the fastest-growing market during the forecast period. This rapid expansion is driven by massive digital transformation initiatives across developing economies (India, China, Southeast Asia), significant foreign direct investment, and booming adoption of public cloud services by SMEs. While price sensitivity remains a factor, the enormous volume of new digital consumers and the subsequent need for scalable data infrastructure (particularly in retail, e-commerce, and telecommunications) are boosting the demand for flexible, cloud-native ELT tools. Government initiatives to promote smart cities and digital governance also contribute significantly to market expansion.

- Latin America (LATAM): The LATAM market is characterized by emerging maturity, with significant growth potential driven by the modernization of financial services and increasing efforts to address infrastructure deficiencies through cloud computing adoption. Brazil and Mexico are key markets, demonstrating rising investment in data infrastructure to support growing digital consumption and address internal compliance needs. Economic volatility and the need for cost-effective solutions often favor SaaS-based, easily deployable ETL platforms over extensive on-premise implementations.

- Middle East and Africa (MEA): Growth in MEA is primarily concentrated in the Gulf Cooperation Council (GCC) countries, driven by massive government-led initiatives (e.g., Saudi Vision 2030, UAE National Agenda) aimed at economic diversification and technological advancement. Investment in large-scale smart city projects and digitalization of the oil & gas and financial sectors creates strong demand for high-performance ETL tools capable of handling complex industrial data and securing sensitive governmental information. Adoption is accelerating as regional enterprises increasingly partner with global cloud providers to build local data centers.

Top Key Players

The market research report includes a detailed profile of leading stakeholders in the ETL Tools Market.- Informatica

- Microsoft (Azure Data Factory)

- IBM

- Oracle

- SAP

- Talend

- Google (Cloud Dataflow)

- AWS (Glue)

- Fivetran

- Qlik

- SAS Institute

- SnapLogic

- Denodo

- Hitachi Vantara

- Precisely

- Stitch (Talend)

- Hevo Data

- Boomi (Dell Technologies)

- Striim

- TIBCO Software

Frequently Asked Questions

Analyze common user questions about the ETL Tools market and generate a concise list of summarized FAQs reflecting key topics and concerns.What is the primary difference between ETL and ELT, and which is dominating the market?

ETL (Extract, Transform, Load) performs data transformation on a staging server before loading it into the data warehouse. ELT (Extract, Load, Transform) loads raw data directly into the cloud data warehouse and leverages the cloud platform's computing power for transformation. ELT is currently dominating the market due to its scalability, efficiency with Big Data volumes, and alignment with modern cloud data architectures like Snowflake and AWS Redshift, offering faster time-to-insight.

How is the adoption of AI fundamentally changing the role of ETL tools?

AI is transforming ETL by enabling intelligent automation across the pipeline. This includes automated data mapping, proactive identification of data quality issues using machine learning, and self-optimization of integration jobs. AI enhances governance by automatically tagging sensitive data and significantly reduces the manual effort traditionally required by data engineers for routine pipeline maintenance and design, focusing their efforts on strategic data strategy.

Which deployment model (cloud or on-premise) is driving the most growth in the ETL market?

The Cloud deployment model is the primary growth driver, significantly outpacing on-premise solutions. Cloud-based ETL/ELT tools offer superior elasticity, minimal infrastructure management overhead, and a highly competitive total cost of ownership (TCO). This model allows businesses, particularly SMEs and large enterprises undertaking digital transformation, to scale their data integration capabilities rapidly based on demand without massive upfront capital expenditure.

What are the biggest challenges restricting ETL tool adoption in the enterprise sector?

The most significant restraints include ensuring stringent data security and regulatory compliance, particularly across hybrid and multi-cloud environments (e.g., GDPR, HIPAA requirements). Additionally, the complexity and high initial investment required to integrate modern ETL solutions with existing legacy systems, often involving custom coding and significant technical debt, pose substantial barriers for large, established enterprises.

Which industry sector is the largest consumer of ETL tools and why?

The Banking, Financial Services, and Insurance (BFSI) sector is the largest consumer. This dominance stems from the sector's immense volume of transaction data, coupled with critical needs for high-speed fraud detection, sophisticated risk modeling, personalized customer experience management, and mandatory regulatory compliance reporting that requires robust, highly secure, and compliant data integration pipelines.

This comprehensive market insights report analyzes the trajectory of the ETL Tools Market, detailing its growth drivers, technological evolution, and strategic segmentation across global regions and key industry verticals, providing essential intelligence for stakeholders navigating the complexities of modern data integration.

The imperative for seamless data flow, data quality assurance, and real-time processing underpins the foundational strength of the ETL tools segment. As data volumes continue their exponential surge, driven by IoT, generative AI, and multi-channel customer interactions, the sophistication of ETL platforms—incorporating features like Data Mesh compatibility, autonomous pipelines, and advanced data cataloging—will define the competitive landscape. Enterprises seeking long-term operational resilience and superior analytical performance must prioritize investment in cloud-native, scalable ELT solutions that can seamlessly adapt to evolving data sources and regulatory mandates, ensuring data readiness for future business intelligence and machine learning applications. The sustained innovation in stream processing and serverless technology confirms the market's shift towards high-velocity data management, solidifying its role as a critical pillar of enterprise digital architecture.

Market projections indicate sustained double-digit growth, influenced heavily by the ongoing consolidation among major technology vendors, who are rapidly acquiring specialized ELT startups to integrate sophisticated, niche capabilities into their core cloud offerings. This consolidation trend benefits end-users by simplifying vendor ecosystems but also places a premium on vendor lock-in mitigation strategies. Future market dynamics will be increasingly shaped by the adoption of low-code/no-code interfaces, democratizing data integration beyond specialized engineering teams and accelerating the creation of decentralized data products in line with Data Mesh principles. Successful market navigation requires a strategic focus on platforms that offer robust data governance alongside unparalleled performance and flexibility in managing hybrid and multi-cloud environments.

The North American region's continued technological leadership, particularly in adopting advanced ETL methodologies, sets the global standard for innovation, pushing Asian and European markets to quickly adopt similar cloud-centric strategies. Policy changes, such as new data privacy regulations in emerging economies, introduce localized complexities, necessitating ETL tools with highly configurable features for data residency and localized compliance. Strategic business investments are shifting towards integrated data management suites that combine ETL functionality with data quality, master data management (MDM), and comprehensive data governance under a single umbrella, offering a holistic approach to data enablement and strategic intelligence across the enterprise value chain.

Technological advancement is not limited to performance gains; significant effort is being directed toward making ETL solutions more environmentally sustainable. By optimizing computational efficiency and leveraging serverless, consumption-based architectures, vendors are indirectly contributing to reduced energy footprints associated with massive data processing operations. This "Green ETL" focus is becoming increasingly relevant in procurement decisions for environmentally conscious large enterprises, especially those operating under strict ESG (Environmental, Social, and Governance) mandates. This convergence of technical performance, governance, and sustainability criteria ensures the long-term viability and essential nature of the ETL tools market in the global digital ecosystem.

The competitive differentiation among major players is increasingly found in the breadth and depth of pre-built connectors (supporting both mainstream SaaS applications and specialized industry sources) and the effectiveness of their AI/ML augmentation for operational tasks. Companies that successfully bridge the gap between traditional data warehousing needs and modern streaming analytics requirements are best positioned for future market leadership. The shift from simply moving data to intelligently curating and preparing data for highly specific analytical models—including those underpinning generative AI applications—marks the next frontier for market development and technological innovation within the ETL landscape.

Furthermore, the segmentation analysis underscores the varying needs of different organizational sizes. While large enterprises demand robust, complex solutions capable of handling petabyte-scale data and intricate regulatory requirements, SMEs prioritize ease of use, rapid deployment, and affordability offered by SaaS-based, low-maintenance ELT platforms. This bifurcation in demand ensures that the market supports a diverse ecosystem of providers, ranging from established enterprise data giants offering comprehensive suites to niche startups focusing on hyper-specific cloud or vertical integration challenges. Understanding these distinct customer profiles is paramount for vendors in tailoring product offerings and go-to-market strategies effectively for sustainable growth across the global market.

The detailed value chain analysis highlights the pivotal role of system integrators (SIs) and consulting partners in facilitating complex deployments, especially within hybrid environments where legacy systems must coexist with new cloud data stacks. These partners provide the necessary expertise to bridge technological gaps and ensure successful transformation implementation, often driving the final adoption decision. Upstream technology providers, particularly those offering advanced processing frameworks and robust API connectivity, remain essential, serving as the foundational layer upon which vendors build their differentiating features, cementing the collaborative and interdependent nature of the ETL market ecosystem.

Investment in data lineage capabilities is escalating, driven by the need for clear audit trails in complex, multi-stage data pipelines, crucial for debugging, impact analysis, and regulatory compliance checks. Modern ETL tools are expected to provide end-to-end visibility, tracing data flow from the point of ingestion through all transformation steps to the final analytical report. This transparency is no longer a luxury but a fundamental necessity for organizations to maintain data trust and comply with global data governance standards, further reinforcing the sophisticated requirements now placed upon ETL platforms in both regulated and non-regulated industries worldwide.

The market trajectory is firmly aligned with broader cloud infrastructure spending and the global adoption rate of analytical platforms. As more enterprises move their core business operations to the cloud, the need for ETL tools to manage inter-cloud and cross-platform data flows increases. This demand for interoperability and vendor neutrality drives innovation in open-source standards and API design, ensuring that data pipelines remain portable and resilient against single-vendor reliance, a key strategic consideration for CIOs and CTOs planning their long-term data strategy.

Finally, the growing maturity of the ETL tools market allows for specialization, with many vendors developing purpose-built connectors and transformation templates specifically tailored to highly specialized applications, such as integrating electronic health records (EHR) data in healthcare or managing operational technology (OT) data from industrial sensors in manufacturing. This verticalization ensures deeper industry relevance and accelerates time-to-value for end-users, moving ETL solutions from generic tools to highly specialized, strategic enablers of industry-specific data intelligence and operational efficiency, reflecting the evolving complexity and diversity of global data requirements.

To check our Table of Contents, please mail us at: sales@marketresearchupdate.com

Research Methodology

The Market Research Update offers technology-driven solutions and its full integration in the research process to be skilled at every step. We use diverse assets to produce the best results for our clients. The success of a research project is completely reliant on the research process adopted by the company. Market Research Update assists its clients to recognize opportunities by examining the global market and offering economic insights. We are proud of our extensive coverage that encompasses the understanding of numerous major industry domains.

Market Research Update provide consistency in our research report, also we provide on the part of the analysis of forecast across a gamut of coverage geographies and coverage. The research teams carry out primary and secondary research to implement and design the data collection procedure. The research team then analyzes data about the latest trends and major issues in reference to each industry and country. This helps to determine the anticipated market-related procedures in the future. The company offers technology-driven solutions and its full incorporation in the research method to be skilled at each step.

The Company's Research Process Has the Following Advantages:

- Information Procurement

The step comprises the procurement of market-related information or data via different methodologies & sources.

- Information Investigation

This step comprises the mapping and investigation of all the information procured from the earlier step. It also includes the analysis of data differences observed across numerous data sources.

- Highly Authentic Source

We offer highly authentic information from numerous sources. To fulfills the client’s requirement.

- Market Formulation

This step entails the placement of data points at suitable market spaces in an effort to assume possible conclusions. Analyst viewpoint and subject matter specialist based examining the form of market sizing also plays an essential role in this step.

- Validation & Publishing of Information

Validation is a significant step in the procedure. Validation via an intricately designed procedure assists us to conclude data-points to be used for final calculations.

×

Request Free Sample:

Related Reports

Select License

Why Choose Us

We're cost-effective and Offered Best services:

We are flexible and responsive startup research firm. We adapt as your research requires change, with cost-effectiveness and highly researched report that larger companies can't match.

Information Safety

Market Research Update ensure that we deliver best reports. We care about the confidential and personal information quality, safety, of reports. We use Authorize secure payment process.

We Are Committed to Quality and Deadlines

We offer quality of reports within deadlines. We've worked hard to find the best ways to offer our customers results-oriented and process driven consulting services.

Our Remarkable Track Record

We concentrate on developing lasting and strong client relationship. At present, we hold numerous preferred relationships with industry leading firms that have relied on us constantly for their research requirements.

Best Service Assured

Buy reports from our executives that best suits your need and helps you stay ahead of the competition.

Customized Research Reports

Our research services are custom-made especially to you and your firm in order to discover practical growth recommendations and strategies. We don't stick to a one size fits all strategy. We appreciate that your business has particular research necessities.

Service Assurance

At Market Research Update, we are dedicated to offer the best probable recommendations and service to all our clients. You will be able to speak to experienced analyst who will be aware of your research requirements precisely.

Contact With Our Sales Team

Customer Testimonials

The content of the report is always up to the mark. Good to see speakers from expertise authorities.

Privacy requested , Managing Director

A lot of unique and interesting topics which are described in good manner.

Privacy requested, President

Well researched, expertise analysts, well organized, concrete and current topics delivered in time.

Privacy requested, Development Manager