AI Inference Server Market Size, By Region (North America, Europe, Asia-Pacific, Latin America, Middle East and Africa), By Statistics, Trends, Outlook and Forecast 2026 to 2033 (Financial Impact Analysis)

ID : MRU_ 441818 | Date : Feb, 2026 | Pages : 251 | Region : Global | Publisher : MRU

AI Inference Server Market Size

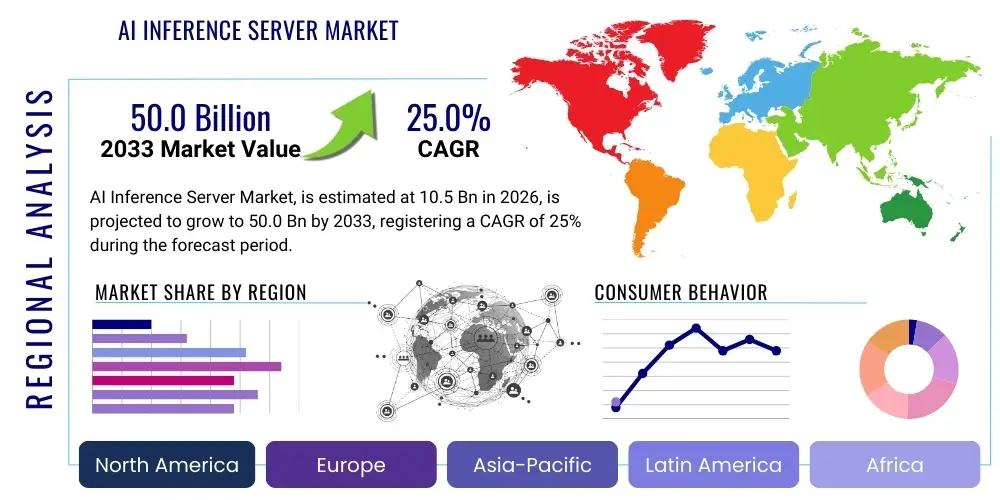

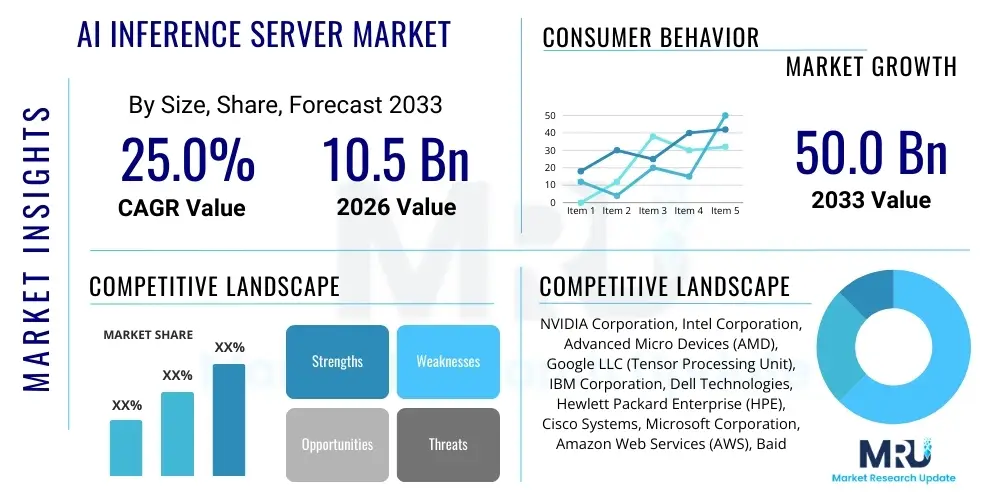

The AI Inference Server Market is projected to grow at a Compound Annual Growth Rate (CAGR) of 25.0% between 2026 and 2033. The market is estimated at USD 10.5 Billion in 2026 and is projected to reach USD 50.0 Billion by the end of the forecast period in 2033.

AI Inference Server Market introduction

The AI Inference Server Market encompasses specialized hardware systems engineered and optimized for the high-speed execution of trained Artificial Intelligence models in production environments. These servers are fundamentally distinct from AI training infrastructure, focusing intensely on ultra-low latency, energy efficiency, and high-throughput processing to deliver real-time AI services. The core components often include dedicated accelerators such as Graphics Processing Units (GPUs), Application-Specific Integrated Circuits (ASICs), and Field-Programmable Gate Arrays (FPGAs), which efficiently handle the massive tensor calculations required for deep learning inference across diverse applications.

The primary product function is to operationalize AI models across cloud and edge computing paradigms, supporting major applications like natural language processing (NLP), sophisticated computer vision systems, recommendation engines, and predictive maintenance. Key benefits derived from utilizing these dedicated servers include significant reductions in response latency, essential for critical applications such as autonomous driving and financial trading, along with improved computational efficiency compared to general-purpose CPUs. This operational efficiency translates directly into lower Total Cost of Ownership (TCO) for enterprises running high-volume AI workloads at scale.

Market expansion is primarily driven by the mass commercialization of Artificial Intelligence, particularly the explosive growth of Large Language Models (LLMs) and generative AI, which require unprecedented inference capabilities. The widespread adoption of IoT devices and 5G networks further amplifies demand, necessitating high-performance inference processing closer to the data source (the edge). Furthermore, technological advancements in hardware virtualization and optimized inference software frameworks (like model quantization and pruning) continually enhance server performance, broadening the market appeal to industries seeking actionable, instantaneous insights from their deployed AI solutions.

AI Inference Server Market Executive Summary

The global AI Inference Server Market is experiencing dynamic evolution, marked by intense strategic competition focused on architectural innovation and power efficiency. Business trends reveal a pronounced shift toward customized silicon (ASICs) developed internally by hyperscale cloud providers, aiming to reduce reliance on merchant silicon and achieve superior performance per watt for specific high-volume workloads. Furthermore, market players are increasingly investing in sophisticated software stacks that enhance hardware utilization, enabling optimized deployment of complex models like LLMs using techniques such as quantization and sparsity. Strategic alliances between hardware vendors and system integrators are vital for penetrating vertical markets that require complex, tailored inference solutions.

Regionally, North America maintains its leadership position, underpinned by the substantial capital investments made by technology titans in AI infrastructure and the rapid development cycle of cutting-edge AI software. Asia Pacific is projected to be the fastest-growing region, fueled by governmental support for digital infrastructure, rapid deployment of 5G networks, and soaring demand for localized AI services in manufacturing and consumer electronics, particularly across China and India. Europe exhibits stable growth, prioritizing deployment models that comply with strict data privacy regulations, thereby driving demand for secure, high-performance on-premise and regional cloud inference capabilities.

Segment trends underscore the enduring importance of specialized hardware, with the accelerator sub-segment (GPUs and ASICs) remaining the largest revenue contributor. While the Cloud deployment model currently holds the majority market share, the Edge deployment segment is set for exponential growth as organizations seek to minimize latency and leverage distributed processing for tasks such as autonomous navigation and industrial quality control. The proliferation of generative AI specifically drives demand across the Application segment, mandating servers equipped with high-bandwidth memory to manage the vast parameter sets of foundation models efficiently, compelling vendors to focus on memory architecture improvements and advanced interconnect technologies like CXL.

AI Impact Analysis on AI Inference Server Market

Common user questions regarding AI's influence on the Inference Server Market often center on hardware scalability concerning increasingly large and complex models. Users frequently ask how existing infrastructure can economically handle the memory demands of Large Language Models (LLMs), which can require hundreds of gigabytes of high-bandwidth memory (HBM) for effective inference. Key concerns include the feasibility of deploying these giant models at the edge and the competitive viability of specialized ASICs versus general-purpose GPUs in terms of flexibility and Total Cost of Ownership (TCO). The market expectation is that AI innovations will continually force specialized server hardware evolution, demanding continuous optimization in memory, interconnects, and cooling to prevent performance bottlenecks and unsustainable energy costs.

The most profound impact of AI advancement, particularly the widespread adoption of transformer-based models and generative AI, is the architectural pivot required in inference server design. These models introduce sequential processing demands and memory access patterns fundamentally different from traditional computer vision tasks, prioritizing memory bandwidth and latency consistency over raw computational FLOPS. This necessitates the integration of high-density HBM and sophisticated caching mechanisms directly onto the server accelerators. Consequently, server manufacturers are focusing on developing highly customized boards and chiplet technologies designed to pool memory resources efficiently, ensuring that even the most complex LLMs can deliver fast and consistent responses to users and applications globally.

This dynamic technological shift directly strengthens the need for specialized inference infrastructure, rather than rendering it obsolete. As AI models become ubiquitous, the requirement for high-efficiency, purpose-built hardware for deployment grows exponentially. The market is witnessing accelerated innovation in software optimization (e.g., advanced model quantization down to INT4) that works in conjunction with specialized hardware to maximize operational performance. This synergy ensures that AI services remain scalable, economical, and responsive, thereby cementing the AI Inference Server as critical infrastructure for the modern digital economy and compelling continuous investment in dedicated acceleration technology.

- Architectural Shift Mandate: LLMs necessitate a shift from FLOPS-centric design to memory bandwidth and low-latency optimization for sequential processing.

- Accelerated ASIC Development: Greater investment in custom silicon (ASICs/NPUs) by hyperscalers to achieve optimal TCO and efficiency for specific generative AI workloads.

- Increased Edge Complexity: AI innovation pushes sophisticated models to the edge, requiring ruggedized, low-power inference servers with enhanced local processing capabilities.

- Software-Hardware Co-optimization: Critical dependence on advanced inference engines and frameworks (quantization, sparsity) to maximize the utility of specialized hardware.

- Focus on Interconnect Technology: Growing adoption of CXL (Compute Express Link) to enable memory pooling and resource disaggregation, crucial for handling fluctuating inference demands efficiently.

DRO & Impact Forces Of AI Inference Server Market

The AI Inference Server Market trajectory is defined by powerful Drivers, structural Restraints, and transformative Opportunities, collectively shaping the competitive and technological landscape. Primary drivers include the massive deployment of generative AI across enterprise services, the critical need for real-time decision-making systems in autonomous vehicles and robotics, and the continuous enhancement of semiconductor performance. These factors collectively push enterprises toward dedicated inference solutions. Conversely, major restraints involve the prohibitive initial capital expenditure associated with purchasing high-end accelerators, significant challenges related to thermal management and energy consumption in large-scale deployments, and the fragmentation of the software ecosystem required to efficiently run models across diverse hardware platforms.

Opportunities for market growth are abundant, particularly driven by the deployment of edge computing solutions enabled by 5G networks, allowing AI to move closer to data sources in smart cities, factories, and healthcare facilities. Further opportunities lie in the commercialization of novel, energy-efficient accelerators (e.g., photonics computing) and the establishment of standardized, open-source inference frameworks that reduce implementation complexity for small-to-medium enterprises. The demand for robust security features integrated into the inference hardware, protecting proprietary AI models from theft or tampering, also presents a substantial differentiation opportunity for specialized vendors.

The Impact Forces exert strong external pressure on market participants. Technological obsolescence is a major factor, driven by yearly advances in chip architecture (Moore's Law and beyond), forcing organizations to continually upgrade infrastructure to remain competitive in performance metrics. Economic forces, particularly global semiconductor shortages and geopolitical instability affecting supply chains, introduce cost volatility and deployment delays. Lastly, regulatory forces, specifically increasing mandates for energy efficiency (Green IT initiatives) and data sovereignty requirements, influence regional deployment strategies, compelling vendors to develop servers optimized for specific geopolitical compliance standards and lower Power Usage Effectiveness (PUE) metrics.

- Drivers:

- Widespread deployment and commercialization of Large Language Models (LLMs) and generative AI.

- Increasing demand for low-latency, real-time AI processing in mission-critical applications (e.g., autonomous driving, fraud detection).

- Proliferation of IoT and Edge devices demanding localized inference processing capabilities.

- Continuous performance improvements and cost reductions in specialized accelerator hardware (GPUs, ASICs).

- Restraints:

- High initial procurement costs and complexity associated with specialized server infrastructure.

- Significant operational expenditure related to power consumption and demanding thermal management requirements.

- Lack of standardized software interoperability across various specialized accelerator platforms.

- Supply chain constraints and long lead times for high-end semiconductor components.

- Opportunities:

- Development of highly efficient inference methods (e.g., 4-bit quantization, hardware sparsity acceleration).

- Expansion into new vertical markets (e.g., industrial automation, smart agriculture) leveraging Edge AI.

- Adoption of composable infrastructure (CXL) to improve resource utilization and flexibility in data centers.

- Focus on energy-efficient server designs to meet sustainability goals and reduce operational costs.

- Impact Forces:

- Technological Obsolescence: Rapid generational evolution of semiconductor technology requiring frequent infrastructure refreshes.

- Geopolitical Risks: Impact of trade tensions and regional policies on global semiconductor supply and pricing.

- Environmental Regulations: Increasing pressure from governments and stakeholders to reduce the carbon footprint of data centers.

Segmentation Analysis

The segmentation analysis dissects the AI Inference Server market based on core technology components, deployment methodologies, processor types, and specific end-use applications, offering a nuanced view of market dynamics and targeted growth areas. Segmentation by Component distinguishes between the essential hardware infrastructure, comprising the accelerators, CPUs, and high-speed memory systems, and the enabling software layer, which includes inference optimization engines and management orchestration tools. The prominence of hardware is undeniable, given the proprietary nature and high value of specialized silicon necessary to handle modern AI computational loads efficiently.

Further categorization by Deployment Model highlights the dual strategy of the industry: catering to the vast scalability and elastic compute demands of Cloud Service Providers, versus addressing the latency-sensitive and privacy-focused requirements of Edge/On-Premise deployments. While cloud remains dominant in sheer computational volume, the Edge segment is positioned for rapid acceleration driven by 5G rollout and the imperative for real-time local processing. The segmentation by Processor Type reveals the fierce competition between flexible GPUs, highly efficient ASICs (like TPUs), and customized FPGAs, each optimized for different stages of the AI lifecycle and specific latency/throughput objectives.

Analyzing the segmentation by End-Use Industry underscores the tailored nature of AI infrastructure requirements. For instance, the Healthcare sector demands high-reliability servers for diagnostic imaging and regulatory compliance, whereas the Telecommunications industry focuses on density and low power consumption for network function virtualization (NFV) at base stations. This detailed breakdown allows vendors to align their product development—from system integration to software optimization—with the precise operational priorities and computational bottlenecks experienced by major vertical customers, ensuring maximum market relevance and penetration across diverse global economies.

- By Component:

- Hardware (AI Accelerators, CPUs, Memory, Storage)

- Software (Inference Engines, Optimization Frameworks, Management Platforms and APIs)

- By Processor Type:

- Graphics Processing Units (GPUs)

- Application-Specific Integrated Circuits (ASICs)

- Field-Programmable Gate Arrays (FPGAs)

- Central Processing Units (CPUs)

- By Deployment Model:

- Cloud (Public and Private Cloud)

- Edge/On-Premise (Data Center, Local Server, Device-Specific)

- By Application:

- Computer Vision (Image/Video Analysis, Surveillance)

- Natural Language Processing (NLP)

- Speech Recognition and Synthesis

- Recommendation Engines and Personalization

- Predictive Maintenance and Industrial IoT

- Generative AI (Content Creation, LLMs)

- By End-Use Industry:

- BFSI (Banking, Financial Services, and Insurance)

- Healthcare and Life Sciences

- Retail and E-commerce

- Automotive and Transportation (Autonomous Vehicles)

- Telecommunications

- Manufacturing and Industrial Automation

- Government and Defense

Value Chain Analysis For AI Inference Server Market

The AI Inference Server value chain commences with the upstream segment, dominated by the highly concentrated semiconductor design and manufacturing industry. Key activities here include the intricate design of specialized silicon (AI accelerators, high-performance CPUs, and high-bandwidth memory chips) by entities such as NVIDIA, Intel, and AMD, alongside foundry operations (TSMC, Samsung). The specialized nature of these components means that technological leadership and control over manufacturing capacity at this stage dictate performance potential and cost structures across the entire downstream market, establishing significant competitive barriers to entry.

The midstream phase involves Original Equipment Manufacturers (OEMs) and Original Design Manufacturers (ODMs) like Dell, HPE, and Inspur. These companies integrate the specialized silicon, memory, networking, and cooling systems into optimized, ready-to-deploy server racks. System integration is crucial, focusing on thermal engineering and ensuring high-speed interconnectivity (e.g., NVLink, PCIe Gen5) to maximize inference throughput and power efficiency. Distribution channels then move the completed servers to the end-users, primarily utilizing direct sales channels for major hyperscalers, ensuring highly customized and volume-optimized deliveries, while relying on indirect channels (VARs, system integrators) to provide tailored deployment and maintenance support to diverse enterprise clients.

The downstream segment centers on the consumers of AI compute power, including large Cloud Service Providers (CSPs) managing public AIaaS infrastructure, and enterprise IT departments deploying private, on-premise solutions. This final stage involves deployment, software optimization (using specialized inference engines), resource orchestration, and ongoing infrastructure maintenance. The direct distribution model facilitates quicker iteration based on CSP needs, crucial for the highly competitive cloud market. Conversely, the reliance on indirect channels for enterprise clients ensures that customers receive necessary professional services for integrating complex AI infrastructure into existing, often heterogeneous, IT environments, thereby enabling wider market adoption.

AI Inference Server Market Potential Customers

The primary cohort of potential customers for high-performance AI Inference Servers is constituted by Hyperscale Cloud Service Providers (CSPs). These technological giants invest billions annually to procure vast fleets of highly specialized servers to power their global AI services, including hosting Large Language Models, offering machine learning platforms, and supporting generalized AI workloads for their global enterprise clientele. Their purchasing decisions are primarily driven by metrics related to performance per dollar, power efficiency (PUE), and density, often leading them to co-develop or exclusively utilize custom silicon (ASICs) to gain a competitive edge in the highly elastic AI computing market.

The second major customer segment includes telecommunications operators and network providers. As 5G infrastructure expands, telcos require robust, low-latency edge inference servers deployed at central offices and cell sites. These servers are essential for network traffic optimization, advanced subscriber analytics, and enabling ultra-low-latency applications like real-time augmented reality and industrial internet applications, demanding servers that are both ruggedized and highly power efficient for non-traditional data center environments.

Furthermore, organizations across critical vertical industries represent significant purchasing power. In the Automotive and Transportation sector, manufacturers and autonomous vehicle developers require dedicated inference servers for simulation and real-time decision-making in fleet vehicles and testing labs. The Healthcare sector mandates high-throughput inference for rapid diagnostic imaging analysis and genomic sequencing, often requiring on-premise solutions due to stringent data privacy regulations. Financial institutions (BFSI) utilize these servers for real-time risk assessment, instantaneous fraud detection, and high-frequency trading algorithms, where the tolerance for latency is exceptionally low, emphasizing the need for computational reliability and speed.

| Report Attributes | Report Details |

|---|---|

| Market Size in 2026 | USD 10.5 Billion |

| Market Forecast in 2033 | USD 50.0 Billion |

| Growth Rate | 25.0% CAGR |

| Historical Year | 2019 to 2024 |

| Base Year | 2025 |

| Forecast Year | 2026 - 2033 |

| DRO & Impact Forces |

|

| Segments Covered |

|

| Key Companies Covered | NVIDIA Corporation, Intel Corporation, Advanced Micro Devices (AMD), Google LLC (Tensor Processing Unit), IBM Corporation, Dell Technologies, Hewlett Packard Enterprise (HPE), Cisco Systems, Microsoft Corporation, Amazon Web Services (AWS), Baidu, Inspur Group, T-Systems International GmbH, SambaNova Systems, Cerebras Systems, Groq, Graphcore, Fujitsu, Lenovo, Oracle Corporation. |

| Regions Covered | North America, Europe, Asia Pacific (APAC), Latin America, Middle East, and Africa (MEA) |

| Enquiry Before Buy | Have specific requirements? Send us your enquiry before purchase to get customized research options. Request For Enquiry Before Buy |

AI Inference Server Market Key Technology Landscape

The technological landscape of the AI Inference Server market is fundamentally defined by specialized computational acceleration designed to maximize efficiency and minimize latency for deep learning workloads. The core technology remains high-performance Graphics Processing Units (GPUs), notably architectures from NVIDIA and AMD, which utilize massively parallel processing capabilities combined with extremely high-bandwidth memory (HBM) to address the immense memory footprints and tensor operations required by contemporary AI models. The continuous development of these GPUs, integrating features specifically for inference (like sparsity support and specialized tensor cores), drives performance scaling across cloud environments.

Parallel to GPUs, Application-Specific Integrated Circuits (ASICs) represent another crucial technological pillar. Custom-designed ASICs, such as Google’s Tensor Processing Units (TPUs) and specialized chips from startups, offer superior energy efficiency and cost effectiveness when deployed at scale for specific, repetitive inference tasks. While lacking the flexibility of GPUs, ASICs excel in delivering predictable, high-throughput performance for major proprietary workloads, compelling large organizations to invest heavily in in-house silicon development. Field-Programmable Gate Arrays (FPGAs) also retain relevance, providing a unique blend of hardware customization and flexibility, allowing for rapid adaptation to evolving AI model structures in scenarios where power constraints or highly custom data paths are prioritized.

Crucial enabling technologies beyond the silicon include advanced server architectures and high-speed interconnections. Technologies like Compute Express Link (CXL) are revolutionizing server design by allowing dynamic memory sharing and resource pooling across multiple CPUs and accelerators, optimizing resource allocation for fluctuating inference workloads and combating memory bottlenecks associated with LLMs. On the software side, the proliferation of optimized inference runtimes (e.g., OpenVINO, TensorRT) and precision reduction techniques (quantization down to INT4) are essential technological tools, enabling servers to perform more inference operations per watt and effectively extending the performance envelope of existing hardware infrastructure.

Regional Highlights

- North America:

- Acts as the global epicenter for AI innovation and market demand, attributed to the presence of all major hyperscale cloud service providers (CSPs) and leading AI research institutions.

- Characterized by exceptionally high investment in next-generation data center infrastructure and advanced accelerator procurement, particularly driven by the demand for large-scale generative AI deployment.

- The region leads in the adoption of complex liquid cooling technologies and advanced interconnection standards (CXL) necessary for ultra-dense, high-wattage inference server installations.

- Asia Pacific (APAC):

- Forecasted to demonstrate the fastest CAGR, propelled by robust governmental support for digitalization and AI development, notably in emerging tech hubs like China, South Korea, and India.

- The region’s growth is fueled by massive domestic demand for consumer AI applications, rapid urbanization necessitating smart city infrastructure, and the widespread rollout of 5G, driving edge inference requirements.

- Local manufacturers and cloud providers are increasingly investing in proprietary AI silicon to address regional needs and achieve technological self-sufficiency in the face of geopolitical pressures.

- Europe:

- Exhibits strong, steady market growth driven primarily by industrial automation (Industry 4.0) and healthcare advancements, relying heavily on computer vision and predictive maintenance applications.

- The regulatory landscape, specifically the enforcement of GDPR and anticipated AI Act stipulations, favors on-premise, localized inference solutions, emphasizing security and data sovereignty in procurement decisions.

- High focus on energy efficiency and sustainable computing ensures that European deployments prioritize servers with superior performance per watt and efficient cooling mechanisms.

- Latin America (LATAM):

- Represents an expanding market with increasing investment in cloud infrastructure and enterprise digitalization, particularly in the finance and retail sectors for tasks like personalized customer service and fraud detection.

- Market adoption remains centralized in major economic hubs like Brazil, Mexico, and Argentina, driven by the need to modernize existing IT infrastructure with scalable and accessible cloud-based AI services.

- Middle East and Africa (MEA):

- Growth is spearheaded by ambitious national visions and strategic government initiatives (e.g., UAE and Saudi Arabia) aimed at becoming global data and AI centers.

- Significant capital is being allocated toward building state-of-the-art hyperscale data centers, establishing localized AI infrastructure for smart government services, national security, and optimizing the critical energy sector.

Top Key Players

The market research report includes a detailed profile of leading stakeholders in the AI Inference Server Market.- NVIDIA Corporation

- Intel Corporation

- Advanced Micro Devices (AMD)

- Google LLC (Tensor Processing Unit)

- IBM Corporation

- Dell Technologies

- Hewlett Packard Enterprise (HPE)

- Cisco Systems

- Microsoft Corporation

- Amazon Web Services (AWS)

- Baidu

- Inspur Group

- T-Systems International GmbH

- SambaNova Systems

- Cerebras Systems

- Groq

- Graphcore

- Fujitsu

- Lenovo

- Oracle Corporation

Frequently Asked Questions

Analyze common user questions about the AI Inference Server market and generate a concise list of summarized FAQs reflecting key topics and concerns.What is the primary difference between AI training servers and AI inference servers?

AI training servers are optimized for massive parallel computation (often FP32 or FP64 precision) over extended durations to build and refine models, focusing on maximizing total throughput. AI inference servers are optimized for deploying trained models using high-speed, low-latency processing (often INT8/INT4 precision) in production environments, prioritizing fast response times and superior energy efficiency.

Which component is driving the most revenue within the AI Inference Server market?

The Hardware component, specifically high-performance AI accelerators such as GPUs and dedicated ASICs, constitutes the dominant revenue source. The continuous increase in complexity and size of AI models, particularly LLMs, necessitates substantial investment in specialized silicon featuring high-bandwidth memory (HBM).

How is the rise of Large Language Models (LLMs) affecting inference server design?

LLMs are fundamentally shifting design focus toward maximizing memory bandwidth and capacity, rather than just raw computational power (TFLOPS). Servers must integrate extensive HBM and utilize advanced interconnects like CXL to pool resources and effectively manage the vast memory required for low-latency, real-time token generation and sequential processing.

What is the key advantage of using Edge AI Inference servers?

The primary advantage of Edge AI Inference servers is the realization of ultra-low latency, as processing occurs immediately at the data source (e.g., factory floor, autonomous vehicle). This reduces reliance on network bandwidth, improves real-time decision-making capabilities, and ensures compliance with data sovereignty regulations.

What are the major restraint challenges for market growth?

Major restraints include the significant initial capital expenditure (CapEx) associated with specialized AI accelerators, high operational costs due to power consumption and cooling requirements in data centers, and the technical complexity involved in optimizing diverse AI models across a fragmented landscape of specialized hardware and software frameworks.

To check our Table of Contents, please mail us at: sales@marketresearchupdate.com

Research Methodology

The Market Research Update offers technology-driven solutions and its full integration in the research process to be skilled at every step. We use diverse assets to produce the best results for our clients. The success of a research project is completely reliant on the research process adopted by the company. Market Research Update assists its clients to recognize opportunities by examining the global market and offering economic insights. We are proud of our extensive coverage that encompasses the understanding of numerous major industry domains.

Market Research Update provide consistency in our research report, also we provide on the part of the analysis of forecast across a gamut of coverage geographies and coverage. The research teams carry out primary and secondary research to implement and design the data collection procedure. The research team then analyzes data about the latest trends and major issues in reference to each industry and country. This helps to determine the anticipated market-related procedures in the future. The company offers technology-driven solutions and its full incorporation in the research method to be skilled at each step.

The Company's Research Process Has the Following Advantages:

- Information Procurement

The step comprises the procurement of market-related information or data via different methodologies & sources.

- Information Investigation

This step comprises the mapping and investigation of all the information procured from the earlier step. It also includes the analysis of data differences observed across numerous data sources.

- Highly Authentic Source

We offer highly authentic information from numerous sources. To fulfills the client’s requirement.

- Market Formulation

This step entails the placement of data points at suitable market spaces in an effort to assume possible conclusions. Analyst viewpoint and subject matter specialist based examining the form of market sizing also plays an essential role in this step.

- Validation & Publishing of Information

Validation is a significant step in the procedure. Validation via an intricately designed procedure assists us to conclude data-points to be used for final calculations.

×

Request Free Sample:

Related Reports

Select License

Why Choose Us

We're cost-effective and Offered Best services:

We are flexible and responsive startup research firm. We adapt as your research requires change, with cost-effectiveness and highly researched report that larger companies can't match.

Information Safety

Market Research Update ensure that we deliver best reports. We care about the confidential and personal information quality, safety, of reports. We use Authorize secure payment process.

We Are Committed to Quality and Deadlines

We offer quality of reports within deadlines. We've worked hard to find the best ways to offer our customers results-oriented and process driven consulting services.

Our Remarkable Track Record

We concentrate on developing lasting and strong client relationship. At present, we hold numerous preferred relationships with industry leading firms that have relied on us constantly for their research requirements.

Best Service Assured

Buy reports from our executives that best suits your need and helps you stay ahead of the competition.

Customized Research Reports

Our research services are custom-made especially to you and your firm in order to discover practical growth recommendations and strategies. We don't stick to a one size fits all strategy. We appreciate that your business has particular research necessities.

Service Assurance

At Market Research Update, we are dedicated to offer the best probable recommendations and service to all our clients. You will be able to speak to experienced analyst who will be aware of your research requirements precisely.

Contact With Our Sales Team

Customer Testimonials

The content of the report is always up to the mark. Good to see speakers from expertise authorities.

Privacy requested , Managing Director

A lot of unique and interesting topics which are described in good manner.

Privacy requested, President

Well researched, expertise analysts, well organized, concrete and current topics delivered in time.

Privacy requested, Development Manager