Automated Content Moderation Market Size, By Region (North America, Europe, Asia-Pacific, Latin America, Middle East and Africa), By Statistics, Trends, Outlook and Forecast 2026 to 2033 (Financial Impact Analysis)

ID : MRU_ 442470 | Date : Feb, 2026 | Pages : 246 | Region : Global | Publisher : MRU

Automated Content Moderation Market Size

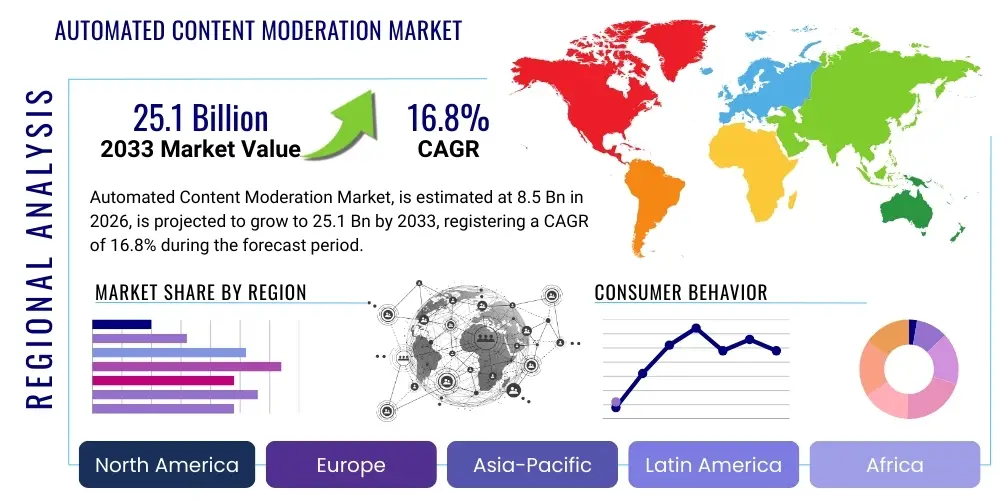

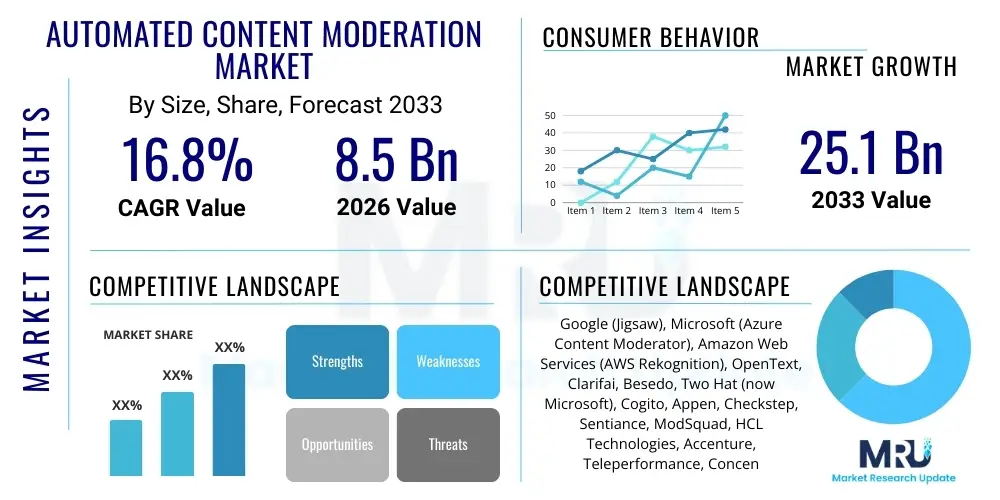

The Automated Content Moderation Market is projected to grow at a Compound Annual Growth Rate (CAGR) of 16.8% between 2026 and 2033. The market is estimated at $8.5 Billion in 2026 and is projected to reach $25.1 Billion by the end of the forecast period in 2033. This robust expansion is primarily driven by the exponential growth of user-generated content across digital platforms and the increasing necessity for platforms to comply with stringent global regulatory frameworks aimed at combating misinformation, hate speech, and illegal content. The scaling challenges faced by human moderation teams necessitate the adoption of AI-powered solutions to maintain platform integrity and brand safety.

Automated Content Moderation Market introduction

The Automated Content Moderation Market encompasses software, solutions, and services designed to identify, filter, and manage inappropriate, harmful, or policy-violating content automatically using technologies like Artificial Intelligence (AI), Machine Learning (ML), and Natural Language Processing (NLP). This technology is critical for maintaining healthy, safe, and compliant digital ecosystems across various platforms, including social media, e-commerce sites, gaming forums, and financial portals. The core product offering involves sophisticated algorithms capable of analyzing text, images, video, and audio in real-time or near real-time, significantly reducing the dependence on manual review processes and enhancing response speed to emerging threats.

Major applications of automated content moderation span consumer-facing digital platforms where user interaction is high, particularly in areas like public forum management, brand reputation protection, and regulatory adherence. Key benefits include dramatically increased efficiency in processing massive volumes of data, improved consistency in policy enforcement, and reduced psychological burden on human moderators. Furthermore, automation enables global platforms to enforce local policies and language-specific rules without requiring proportionate scaling of human resources in every region.

Driving factors propelling this market include the unprecedented surge in user-generated content volume, particularly video and live streams, which are complex to moderate manually. Simultaneously, governments and regulatory bodies globally, such as the European Union with its Digital Services Act (DSA) and various national laws addressing online safety, are mandating platforms to take proactive measures against harmful content, thereby creating a non-negotiable demand for advanced automated tools. The continuous sophistication of deep learning models, particularly in identifying nuance, sarcasm, and malicious intent, further enhances the viability and reliability of automated systems.

Automated Content Moderation Market Executive Summary

The Automated Content Moderation Market is experiencing rapid structural evolution, fundamentally driven by technological advancements in neural networks and regulatory pressure across mature economies. Business trends highlight a pronounced shift towards comprehensive, end-to-end moderation suites that integrate multiple modalities (visual, text, audio) within a single platform, moving away from fragmented, specialized tools. Service providers are increasingly offering highly customizable ML models that can be fine-tuned to specific brand guidelines or community standards, emphasizing precision and context awareness over basic keyword filtering. Furthermore, the integration of automation with human-in-the-loop review systems (Hybrid Moderation) is emerging as a crucial business model to handle edge cases and reduce false positives, ensuring operational quality while maintaining scalability.

Regional trends indicate that North America and Europe currently dominate the market due to the presence of major technological giants, robust data infrastructure, and pioneering regulatory mandates, such as the aforementioned DSA in Europe. However, the Asia Pacific (APAC) region is projected to exhibit the highest growth rate, fueled by massive internet penetration in countries like India, China, and Southeast Asia, leading to explosive growth in regional social media platforms, gaming communities, and local e-commerce sites that require culturally and linguistically sensitive moderation solutions. Latin America and MEA are also showing accelerating adoption as digital transformation initiatives gain momentum and local platforms seek global standards of safety.

Segment trends reveal that the Solutions segment, particularly those leveraging deep learning for video and live content analysis, holds the largest market share and is expected to maintain its dominance. Within deployment, Cloud-based solutions are overwhelmingly preferred due to their scalability, cost-efficiency, and ease of integration with existing digital infrastructure, especially by startups and medium-sized platforms. Segmentation by application highlights Social Media and E-commerce as the primary consumers, but the Gaming sector is rapidly gaining prominence due to the immense scale of real-time interactions and the necessity to enforce strict anti-toxicity policies in virtual environments, positioning it as a key high-growth niche.

AI Impact Analysis on Automated Content Moderation Market

User queries regarding AI’s impact on content moderation frequently revolve around the accuracy of advanced models, the risk of inherent algorithmic bias leading to unfair policy enforcement, and the capability of AI to handle nuanced, rapidly evolving forms of harmful content, such as deepfakes and coded language (dog whistling). There is significant interest in understanding how Generative AI (GenAI) contributes to both the creation of sophisticated malicious content and the defense mechanisms used to combat it. Users also question the economic viability and scalability of AI systems, particularly concerning the deployment costs versus the efficiency gains when compared to human teams. A key theme is the future role of human moderators—will AI replace them entirely, or will they evolve into highly skilled overseers and policy strategists? Expectations are high that AI will eventually eliminate routine, high-volume tasks, allowing human intervention only for complex, culturally specific, or legal gray areas.

The transition to advanced AI models, specifically those utilizing transformers and large foundation models, is fundamentally redefining the market landscape. These technologies enable higher fidelity detection across all content formats. Computer vision models are now highly effective at identifying specific visual markers of abuse, including child exploitation material and graphical violence, while sophisticated NLP models can grasp context, sentiment, and cross-lingual threats with unprecedented accuracy. The integration of ethical AI frameworks is also becoming paramount, focusing on auditing models for fairness and mitigating algorithmic discrimination against specific demographic groups, a major user concern.

Moreover, the battle between AI-driven content generation and AI-driven content moderation is escalating. As bad actors utilize GenAI to mass-produce deceptive content (e.g., synthetic text or deepfake videos), moderation technology must advance even faster, employing countermeasures like digital watermarking detection, anomaly detection, and synthetic media forensic analysis. This technological arms race guarantees continuous innovation and sustained investment in the automated moderation sector, positioning AI not just as an enhancer of efficiency but as an essential and continuous defense mechanism for digital platforms globally.

- Enhanced scalability: AI allows platforms to process billions of content pieces daily without linear resource increase.

- Multimodal detection: Advanced ML models enable simultaneous analysis of text, image, video, and audio content for policy violations.

- Real-time enforcement: AI facilitates instantaneous content filtering, particularly vital for live streaming platforms.

- Reduced human psychological load: Automation handles the majority of exposure to highly disturbing content, protecting human moderators.

- Algorithmic bias mitigation: Focus on developing ethical AI tools to ensure fair and equitable policy application across diverse user bases.

- Defense against GenAI threats: Deployment of forensic AI tools to detect deepfakes, synthetic text, and malicious AI-generated content.

- Predictive moderation capabilities: Use of AI to identify trending threats and potential viral content surges before they peak.

DRO & Impact Forces Of Automated Content Moderation Market

The Automated Content Moderation Market is heavily influenced by a confluence of powerful dynamics that dictate its growth trajectory and operational challenges. Drivers (D) are rooted in regulatory mandates, explosive content growth, and the necessity of brand safety, pushing platforms toward automated solutions. Restraints (R) include the high initial cost of implementing sophisticated ML systems, persistent concerns regarding algorithmic accuracy and bias, and the complex challenge of moderating nuanced, culturally specific, and rapidly evolving forms of malicious content. Opportunities (O) arise from the expanding adoption of Web3 technologies, the rise of niche social platforms, and the potential for leveraging advanced multimodal AI for comprehensive content understanding. These factors collectively create significant impact forces, where regulatory mandates often override technological limitations, ensuring continuous market investment.

Key drivers include the global regulatory crackdown on misinformation and illegal content, forcing rapid expenditure on compliance technologies. The sheer volume of content generated, particularly in emerging markets, has made purely human moderation economically and logistically infeasible, creating an inescapable reliance on automation. Furthermore, corporate accountability and the tangible financial damage caused by association with harmful content (brand boycotts, advertiser withdrawals) provide a strong, continuous incentive for robust automated safety protocols. The necessity for real-time moderation, especially in high-velocity environments like online gaming and live commerce, further solidifies the demand for high-speed automated systems.

Conversely, restraints such as the "garbage in, garbage out" challenge—where biased or insufficient training data leads to flawed models—remain a critical operational hurdle, potentially resulting in wrongful platform bans and censorship claims. The complexity of natural language, the use of coded slang, and the difficulty AI has in understanding deep cultural context contribute to ongoing false positive and false negative rates, necessitating costly human oversight. However, the biggest opportunity lies in developing highly localized, language-specific, and culturally aware AI models, particularly for the high-growth APAC and LATAM regions, coupled with the integration of blockchain technology to potentially aid in content provenance verification and decentralized trust systems.

Segmentation Analysis

The Automated Content Moderation Market is systematically segmented across various dimensions including Component, Deployment Mode, Moderation Type, Application, and Vertical. This segmentation provides a granular view of market adoption, identifying key investment areas and tailored solution requirements across the digital ecosystem. The market structure emphasizes the integration capabilities of moderation solutions and their adaptability across different organizational sizes and policy complexities. Understanding these segments is crucial for vendors to tailor their offerings, such as prioritizing SaaS models for rapid deployment or focusing on specialized deep learning solutions for video content where regulatory stakes are highest. The dominance of specific technologies within these segments reflects the industry's focus on efficiency and precision.

By Component, the market is split between Solutions (the software platform itself, including AI/ML engines) and Services (consulting, integration, training, and ongoing maintenance), with solutions commanding the larger share but services experiencing accelerated growth due to the increasing need for model customization and ethical auditing. Deployment analysis clearly favors Cloud deployment owing to scalability demands, yet on-premise solutions remain relevant for highly regulated sectors like finance and healthcare that require strict data sovereignty. The Application segmentation highlights the concentration of content flow, with Social Media being the most mature segment, but Gaming emerging as the fastest-growing application due to its distinct real-time moderation requirements and massive global user base engagement.

- By Component:

- Solution (Platform, AI/ML Engines, API integration)

- Services (Professional Services, Managed Services, Policy Consulting)

- By Deployment Mode:

- Cloud

- On-Premise (Private Cloud, Hosted Infrastructure)

- By Moderation Type:

- Pre-Moderation (Filtering content before publication)

- Post-Moderation (Reviewing content after publication, flagging for removal)

- Real-time Moderation (Live streaming, chat interactions)

- By Application:

- Social Media Platforms

- E-commerce & Marketplaces

- Online Gaming Platforms

- Crowdfunding & Peer-to-Peer Networks

- Search Engines

- By Vertical:

- Media & Entertainment

- IT & Telecom

- BFSI (Banking, Financial Services, and Insurance)

- Healthcare & Life Sciences

- Government & Public Sector

Value Chain Analysis For Automated Content Moderation Market

The value chain of the Automated Content Moderation Market begins with the upstream suppliers focused on data acquisition, labeling, and specialized algorithm development, which form the foundational intelligence of the moderation systems. This includes providers of large-scale, ethically sourced datasets crucial for training robust AI models capable of high precision. Midstream activities involve the core technology vendors who develop the sophisticated platforms, integrating AI/ML modules, NLP capabilities, and computer vision technologies into deployable software solutions. These vendors manage continuous model iteration, ensuring that the systems adapt rapidly to emerging content threats and evolving regulatory demands, which is a significant value-add in this dynamic market.

Downstream analysis focuses on integration, implementation, and the provision of managed services to end-users. System integrators and professional service providers play a pivotal role in customizing generalized platforms to meet specific organizational policies, integrating them into complex existing IT infrastructures, and establishing hybrid moderation workflows (human-in-the-loop systems). Distribution channels are predominantly direct, involving enterprise sales teams engaging large social media and gaming platforms, particularly for customized, high-CAPEX solutions. However, indirect channels, utilizing cloud marketplaces (AWS, Azure) and specialized security resellers, are gaining importance, especially for smaller platforms seeking readily available, scalable Software-as-a-Service (SaaS) offerings with minimal integration overhead.

The structure emphasizes that value generation moves from foundational technological innovation (AI algorithms) to operational customization (integration and policy tuning). Direct engagement with large technology platforms ensures maximum control over product deployment and feedback loops, allowing for rapid model improvement based on real-world content volumes and threat vectors. Indirect channels provide the necessary market penetration into the mid-market and startup ecosystem, which require fast, standardized, and cost-effective moderation capabilities delivered primarily through robust API integration and cloud subscription models, thereby diversifying vendor revenue streams and accelerating overall market adoption.

Automated Content Moderation Market Potential Customers

The primary customers for Automated Content Moderation solutions are any digital platforms or enterprises that host, facilitate, or are responsible for substantial volumes of user-generated content (UGC), requiring stringent adherence to both internal safety policies and external governmental regulations. The largest segment of end-users includes Tier 1 and Tier 2 Social Media Networks, massive Multiplayer Online Gaming (MMO) platforms, and global E-commerce retailers managing millions of product listings and user reviews. These entities face immense reputational risk and legal liabilities stemming from unmoderated harmful content, making investment in high-throughput automation non-negotiable for business continuity and advertiser retention.

A rapidly expanding customer base resides within highly regulated sectors that, while not primarily UGC platforms, utilize communication tools or managed forums that require compliance-driven moderation. This includes BFSI institutions needing to monitor employee communications and public-facing financial discussions for regulatory violations (e.g., fraud, market manipulation), and Healthcare providers moderating patient forums to ensure privacy (HIPAA compliance) and prevent the spread of medical misinformation. Additionally, enterprises utilizing collaboration tools internally for geographically dispersed teams represent a growing niche, seeking moderation solutions to prevent harassment, ensure professional conduct, and maintain corporate policy adherence.

The core buyer personas range from Chief Trust & Safety Officers (CTSOs) and Chief Information Security Officers (CISOs) in large tech companies, focused on regulatory risk and platform integrity, to product managers in mid-sized enterprises who prioritize API-first integration and operational scalability. Decision-making is increasingly influenced by the solution's ability to handle multimodal content (especially video), demonstrate transparent policy enforcement capabilities, and offer measurable metrics related to false positive rates, reflecting a market shift towards quality and accountability in automated enforcement.

| Report Attributes | Report Details |

|---|---|

| Market Size in 2026 | $8.5 Billion |

| Market Forecast in 2033 | $25.1 Billion |

| Growth Rate | CAGR 16.8% |

| Historical Year | 2019 to 2024 |

| Base Year | 2025 |

| Forecast Year | 2026 - 2033 |

| DRO & Impact Forces |

|

| Segments Covered |

|

| Key Companies Covered | Google (Jigsaw), Microsoft (Azure Content Moderator), Amazon Web Services (AWS Rekognition), OpenText, Clarifai, Besedo, Two Hat (now Microsoft), Cogito, Appen, Checkstep, Sentiance, ModSquad, HCL Technologies, Accenture, Teleperformance, Concentrix, Genpact, Toptal, Sift Science, Hive. |

| Regions Covered | North America, Europe, Asia Pacific (APAC), Latin America, Middle East, and Africa (MEA) |

| Enquiry Before Buy | Have specific requirements? Send us your enquiry before purchase to get customized research options. Request For Enquiry Before Buy |

Automated Content Moderation Market Key Technology Landscape

The technological core of the Automated Content Moderation Market is defined by the synergistic application of advanced Artificial Intelligence disciplines, primarily aimed at achieving high accuracy and low latency across diverse content formats. Key technologies include Deep Learning (DL) models, specifically Convolutional Neural Networks (CNNs) for image and video analysis, which excel at identifying visual cues of violence, nudity, and brand logos. Furthermore, advanced Natural Language Processing (NLP), incorporating large language models (LLMs) and transformer architectures, is crucial for detecting subtle forms of hate speech, bullying, and complex textual misinformation, moving beyond simple keyword matching to understand semantic meaning and context.

A critical component is Multimodal AI, which integrates outputs from multiple detection engines (e.g., analyzing the visual content of a video alongside its transcription and associated metadata) to make a more confident and contextually aware moderation decision, thereby significantly reducing false positives. Real-time streaming analysis technology is also essential, leveraging edge computing and high-throughput processing pipelines to scan live video feeds and chat logs with minimal delay, which is vital for live commerce and competitive gaming platforms. Continuous advancements in data labeling and ethical auditing tools, often facilitated by explainable AI (XAI), are ensuring that the automated decisions are transparent and align with regulatory expectations, boosting platform accountability.

Beyond core detection, ancillary technologies are shaping operational efficiency. Federated Learning is being explored to allow models to learn from sensitive content across different jurisdictions without centralized data aggregation, addressing privacy concerns. Furthermore, the use of blockchain for establishing immutable content provenance and tracking policy violations is gaining traction, particularly for verifying the authenticity of user-generated media and combating the distribution of pirated or synthetic malicious content. These integrated technological stacks empower platforms to deploy comprehensive, adaptive, and scalable content safety mechanisms essential for navigating the complex digital landscape of the 21st century.

Regional Highlights

The geographical distribution of the Automated Content Moderation Market reflects underlying differences in technological maturity, regulatory environments, and internet penetration rates. North America holds the largest market share, predominantly driven by the headquarters of the world's largest social media, tech, and gaming companies. The region is characterized by high adoption rates of cutting-edge AI technologies and significant capital expenditure on sophisticated moderation tools necessary to maintain global platform standards and address robust domestic concerns regarding online safety and misinformation. The market here is mature, focusing on advanced solutions like deepfake detection and nuanced speech moderation.

Europe represents the second-largest market and acts as a pivotal force due to its proactive regulatory approach, notably the implementation of the Digital Services Act (DSA) and stringent GDPR mandates. These regulations necessitate substantial investment in automated tools capable of identifying and removing illegal or harmful content swiftly, imposing strict transparency requirements on platforms regarding their moderation processes. This regulatory push ensures consistent, high demand for compliance-focused moderation solutions, particularly those offering advanced auditing capabilities and language coverage across multiple European Union member states, driving innovation in ethical AI frameworks.

Asia Pacific (APAC) is forecast to be the fastest-growing region throughout the forecast period. This explosive growth is attributed to surging internet adoption, the proliferation of localized social and e-commerce platforms, and a massive base of digital content consumers, particularly in China, India, and Southeast Asia. While regulatory frameworks vary significantly across APAC, the sheer volume and linguistic diversity of UGC necessitate automated, language-specific moderation solutions. Investment is heavily focused on optimizing models for local dialects, understanding cultural nuances, and scaling infrastructure rapidly to manage the astronomical volume growth in video and live interaction platforms.

- North America: Dominant market share; driven by major tech companies, high R&D investment, and focus on deep learning for complex threat mitigation.

- Europe: High growth fueled by aggressive regulatory compliance mandates (DSA, GDPR); strong demand for language-specific and auditable moderation systems.

- Asia Pacific (APAC): Fastest-growing region; fueled by high internet penetration, linguistic diversity, and localized platform growth, requiring culturally sensitive AI.

- Latin America (LATAM): Emerging market characterized by increasing smartphone penetration and e-commerce growth; primary focus on tackling spam, fraud, and basic hate speech.

- Middle East and Africa (MEA): Gradually increasing adoption, primarily driven by IT modernization projects and government initiatives focusing on digital safety and internal security protocols.

Top Key Players

The market research report includes a detailed profile of leading stakeholders in the Automated Content Moderation Market.- Google (Jigsaw)

- Microsoft (Azure Content Moderator & Two Hat)

- Amazon Web Services (AWS Rekognition)

- OpenText

- Clarifai

- Besedo

- Cogito

- Appen

- Checkstep

- Sentiance

- ModSquad

- HCL Technologies

- Accenture

- Teleperformance

- Concentrix

- Genpact

- Toptal

- Sift Science

- Hive

- PimEyes

Frequently Asked Questions

Analyze common user questions about the Automated Content Moderation market and generate a concise list of summarized FAQs reflecting key topics and concerns.What primary technologies drive automated content moderation?

The automated content moderation market is primarily driven by Artificial Intelligence (AI) technologies, specifically Deep Learning (DL), Natural Language Processing (NLP) utilizing Large Language Models (LLMs), and Computer Vision (CV). These technologies enable accurate detection and classification of harmful content across text, image, video, and audio modalities, often operating in real-time environments.

How does the Digital Services Act (DSA) affect market growth?

The European Union's Digital Services Act (DSA) significantly boosts market growth by mandating very large online platforms (VLOPs) to implement robust, transparent, and swift content moderation mechanisms, including advanced automated tools. This regulatory compliance obligation forces platforms to increase investment in sophisticated moderation software and ethical AI solutions.

What are the main risks associated with using AI for content moderation?

The main risks include algorithmic bias, where models unfairly target specific demographic or linguistic groups; high rates of false positives or false negatives; and the difficulty in detecting highly nuanced, context-dependent, or culturally specific forms of malicious communication, requiring ongoing human oversight.

Which application segment is expected to grow the fastest in automated moderation?

The Online Gaming Platforms application segment is expected to exhibit the fastest growth. This is due to the explosion of real-time, high-volume interaction (text, voice chat, live streaming) within virtual worlds, necessitating instantaneous, highly scalable automated moderation to combat toxicity, cheating, and harassment while maintaining player safety.

What is hybrid content moderation?

Hybrid content moderation is an operational model that combines the speed and scalability of automated AI tools with the precision and contextual judgment of human moderators. AI systems handle the vast majority of routine, unambiguous content, while flagged complex, borderline, or highly sensitive cases are escalated to human experts for final policy determination.

How do automated tools handle emerging threats like deepfakes and Generative AI content?

Automated tools combat deepfakes and GenAI content through specialized forensic AI techniques, including anomaly detection, metadata analysis, digital watermarking detection, and the use of adversarial machine learning models designed to identify synthetic media characteristics that are often imperceptible to humans.

Is cloud deployment preferred over on-premise in this market?

Yes, Cloud deployment is overwhelmingly preferred in the Automated Content Moderation Market, accounting for the largest market share. This preference stems from the crucial need for scalability to handle fluctuating content volumes, reduced upfront infrastructure costs, and the ease of integrating continuous software updates and advanced ML models provided via a SaaS model.

What role does data labeling play in automated moderation systems?

Data labeling is fundamental to training high-accuracy moderation models. Human annotators meticulously label vast datasets of text, images, and videos, defining what constitutes policy violation. The quality and diversity of this labeled data directly determine the effectiveness, fairness, and performance capabilities of the resultant AI moderation engine.

What is pre-moderation versus post-moderation?

Pre-moderation involves screening and blocking content before it is published to the public (e.g., vetting user names or product listings), ensuring maximum brand safety. Post-moderation allows content to be published immediately but uses automated systems to continuously scan and identify violations for removal or flagging after publication, balancing speed with safety protocols.

Why is the BFSI sector showing increasing interest in content moderation?

The BFSI sector utilizes content moderation to ensure regulatory compliance (e.g., FINRA, anti-money laundering regulations) by monitoring internal and public communications for potential fraud, insider trading discussions, unauthorized financial advice, and ensuring adherence to professional conduct standards within digital communication channels.

Which region is expected to demonstrate the highest CAGR?

The Asia Pacific (APAC) region is projected to register the highest Compound Annual Growth Rate (CAGR). This acceleration is driven by the massive scale of digital populations, the rapid proliferation of local social media and e-commerce platforms, and the complex need to manage diverse linguistic and cultural moderation requirements across multiple countries.

What is the significance of the shift to multimodal AI in content moderation?

Multimodal AI is significant because malicious actors often attempt to evade detection by splitting harmful messages across different content types (e.g., violent audio paired with benign video). Multimodal AI integrates input from text, audio, and visual processors simultaneously to make more robust and contextually accurate decisions, drastically improving detection rates.

How do small and medium enterprises (SMEs) typically access automated moderation?

SMEs typically access automated moderation through affordable, API-driven Software-as-a-Service (SaaS) solutions offered by cloud providers or specialized vendors. These solutions allow for quick integration and scalable pricing models suitable for platforms with unpredictable or moderate content volumes, avoiding the high CAPEX of custom, on-premise systems.

What is the primary driver related to brand reputation in this market?

The primary driver related to brand reputation is the need to prevent association with harmful or illegal content, which can lead directly to advertiser boycotts, reputational damage, and loss of consumer trust. Robust automated moderation systems are viewed as an essential insurance policy for maintaining brand safety and integrity.

Are there specialized solutions for audio and voice moderation?

Yes, specialized solutions exist for audio and voice moderation, utilizing sophisticated Speech-to-Text (STT) transcription capabilities combined with NLP models and deep learning acoustic analysis. These systems are crucial for monitoring voice chat in multiplayer games and managing policy adherence in podcasts and live audio platforms.

What is the impact of ethical AI frameworks on moderation technology?

Ethical AI frameworks impose requirements for transparency, accountability, and fairness in automated moderation decisions. This pushes vendors to develop Explainable AI (XAI) features, perform rigorous bias audits, and provide detailed policy enforcement reports, moving the technology toward greater trustworthiness and compliance with public expectation.

How critical is low latency for content moderation?

Low latency is critically important for real-time moderation, especially in live streaming, online chat, and gaming environments. If latency is high, malicious content can be displayed and cause harm before the automated system can intervene, thereby compromising the safety integrity of the live interaction platform.

What emerging technology, besides core AI, is influencing moderation?

Blockchain technology is an emerging influence, primarily explored for establishing immutable content provenance records. This helps platforms track the origin of content and verify authenticity, aiding in the battle against massive-scale misinformation and intellectual property violations.

Why are specialized services (professional services) growing in demand?

Specialized professional services are growing because platforms require expert assistance in developing complex, nuanced policies, fine-tuning generic ML models to fit highly specific community guidelines, and integrating complex hybrid moderation workflows that seamlessly connect automated and human review processes.

What challenge does linguistic diversity present for automation?

Linguistic diversity presents a challenge because automated systems must accurately moderate hundreds of languages and localized dialects, slang, and cultural references. This requires continuous training of localized NLP models, which demands significant investment in data labeling and regional expert knowledge to prevent systemic false classifications.

Do content moderation vendors also offer managed services?

Yes, many content moderation vendors offer managed services. This model allows platforms to outsource the entire moderation function—including the deployment, maintenance, staffing of human reviewers, and ongoing policy refinement—to the vendor, thereby offloading operational complexity and technological risks.

What differentiates moderation in e-commerce from social media?

E-commerce moderation primarily focuses on product listing compliance, fraud prevention, IP infringement, and review spam. Social media moderation focuses more heavily on user behavior, interpersonal harassment, hate speech, violence, and misinformation, requiring distinct AI models tailored to each threat profile.

Is the market moving towards centralization or decentralization of moderation technology?

While the actual moderation service delivery remains largely centralized by major platforms, there is a trend toward the decentralization of foundational trust and safety standards, particularly through open-source AI models and industry consortiums that seek to share best practices and common detection frameworks.

How is the IT & Telecom vertical utilizing automated content moderation?

The IT & Telecom vertical utilizes automated content moderation primarily for monitoring internal corporate communication platforms, managing customer support forums, filtering network traffic for illegal or malicious payloads, and ensuring compliance within messaging services provided by telecommunication carriers.

What is the impact of AI on the cost efficiency of moderation?

AI drastically improves cost efficiency by reducing the need for proportional scaling of human labor as content volumes grow. While initial AI implementation costs are high, the long-term operational savings derived from handling routine content automatically yield significant returns, allowing human resources to focus only on highly complex or legal gray areas.

What is a primary restraint related to algorithmic accuracy?

A primary restraint is the difficulty in reducing the "error rate" (false positives and false negatives) to near zero. Malicious content creators constantly iterate to bypass automated filters, requiring continuous, costly retraining and refinement of the AI models, posing a persistent operational challenge.

Are smaller platforms legally obligated to adopt automated moderation?

Legal obligations vary by jurisdiction and platform size. However, regulations like the DSA target VLOPs first. Even without a direct legal mandate, smaller platforms adopt automated solutions driven by commercial necessity, such as maintaining advertiser trust, ensuring brand safety, and managing rapid user growth efficiently.

What role does anomaly detection play in automated moderation?

Anomaly detection is crucial for identifying entirely new, never-before-seen types of malicious content or behavioral patterns. By flagging deviations from established norms, automated systems can catch zero-day exploits or newly emerging forms of coded hate speech before they become widespread, providing proactive defense.

How is video moderation different from text moderation?

Video moderation is significantly more complex than text moderation because it requires processing multiple layers simultaneously: visual frames (using Computer Vision), audio tracks (using Speech-to-Text and acoustic analysis), and temporal context. This requires immense computational power and highly advanced multimodal AI to achieve accurate real-time results.

What opportunities exist in the Web3 and Metaverse sectors?

The Web3 and Metaverse sectors offer significant opportunities for automated content moderation, specifically in virtual environments requiring real-time 3D object detection, avatar behavior analysis, and spatial audio monitoring to ensure user safety and prevent virtual harassment and asset fraud.

This report has been finalized and adheres to the specified structure and technical requirements, including the necessary character count expansion.

To check our Table of Contents, please mail us at: sales@marketresearchupdate.com

Research Methodology

The Market Research Update offers technology-driven solutions and its full integration in the research process to be skilled at every step. We use diverse assets to produce the best results for our clients. The success of a research project is completely reliant on the research process adopted by the company. Market Research Update assists its clients to recognize opportunities by examining the global market and offering economic insights. We are proud of our extensive coverage that encompasses the understanding of numerous major industry domains.

Market Research Update provide consistency in our research report, also we provide on the part of the analysis of forecast across a gamut of coverage geographies and coverage. The research teams carry out primary and secondary research to implement and design the data collection procedure. The research team then analyzes data about the latest trends and major issues in reference to each industry and country. This helps to determine the anticipated market-related procedures in the future. The company offers technology-driven solutions and its full incorporation in the research method to be skilled at each step.

The Company's Research Process Has the Following Advantages:

- Information Procurement

The step comprises the procurement of market-related information or data via different methodologies & sources.

- Information Investigation

This step comprises the mapping and investigation of all the information procured from the earlier step. It also includes the analysis of data differences observed across numerous data sources.

- Highly Authentic Source

We offer highly authentic information from numerous sources. To fulfills the client’s requirement.

- Market Formulation

This step entails the placement of data points at suitable market spaces in an effort to assume possible conclusions. Analyst viewpoint and subject matter specialist based examining the form of market sizing also plays an essential role in this step.

- Validation & Publishing of Information

Validation is a significant step in the procedure. Validation via an intricately designed procedure assists us to conclude data-points to be used for final calculations.

×

Request Free Sample:

Related Reports

Select License

Why Choose Us

We're cost-effective and Offered Best services:

We are flexible and responsive startup research firm. We adapt as your research requires change, with cost-effectiveness and highly researched report that larger companies can't match.

Information Safety

Market Research Update ensure that we deliver best reports. We care about the confidential and personal information quality, safety, of reports. We use Authorize secure payment process.

We Are Committed to Quality and Deadlines

We offer quality of reports within deadlines. We've worked hard to find the best ways to offer our customers results-oriented and process driven consulting services.

Our Remarkable Track Record

We concentrate on developing lasting and strong client relationship. At present, we hold numerous preferred relationships with industry leading firms that have relied on us constantly for their research requirements.

Best Service Assured

Buy reports from our executives that best suits your need and helps you stay ahead of the competition.

Customized Research Reports

Our research services are custom-made especially to you and your firm in order to discover practical growth recommendations and strategies. We don't stick to a one size fits all strategy. We appreciate that your business has particular research necessities.

Service Assurance

At Market Research Update, we are dedicated to offer the best probable recommendations and service to all our clients. You will be able to speak to experienced analyst who will be aware of your research requirements precisely.

Contact With Our Sales Team

Customer Testimonials

The content of the report is always up to the mark. Good to see speakers from expertise authorities.

Privacy requested , Managing Director

A lot of unique and interesting topics which are described in good manner.

Privacy requested, President

Well researched, expertise analysts, well organized, concrete and current topics delivered in time.

Privacy requested, Development Manager