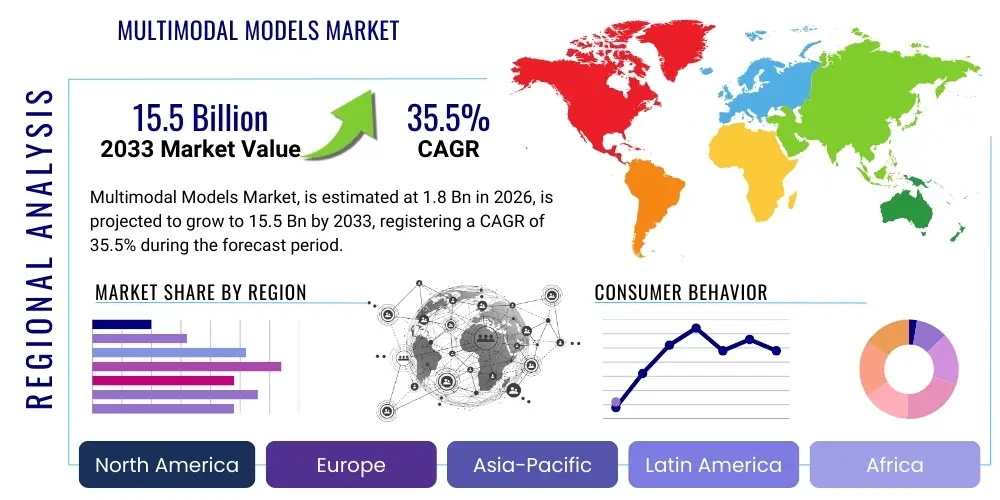

Multimodal Models Market Size, By Region (North America, Europe, Asia-Pacific, Latin America, Middle East and Africa), By Statistics, Trends, Outlook and Forecast 2026 to 2033 (Financial Impact Analysis)

ID : MRU_ 440944 | Date : Feb, 2026 | Pages : 241 | Region : Global | Publisher : MRU

Multimodal Models Market Size

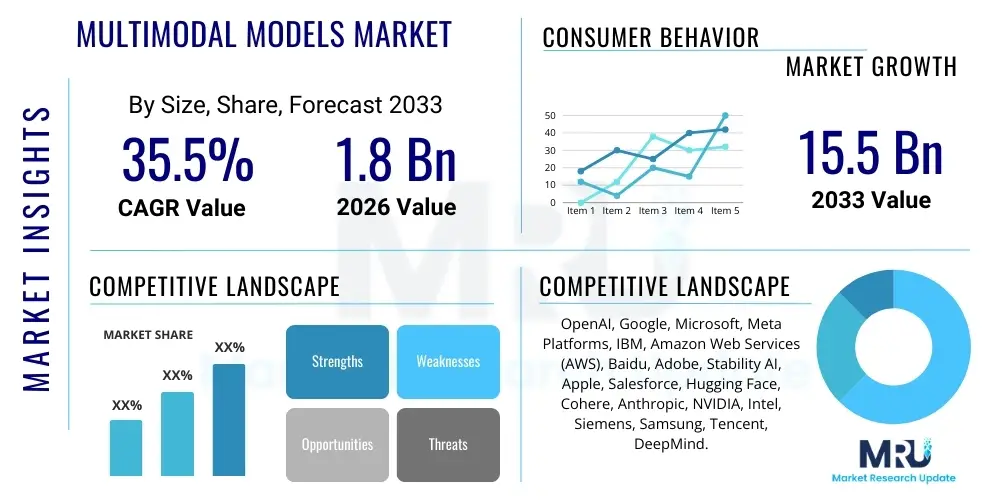

The Multimodal Models Market is projected to grow at a Compound Annual Growth Rate (CAGR) of 35.5% between 2026 and 2033. The market is estimated at $1.8 Billion in 2026 and is projected to reach $15.5 Billion by the end of the forecast period in 2033. This exponential growth trajectory is fundamentally driven by the escalating demand across enterprise sectors for sophisticated AI systems capable of processing and synthesizing diverse data inputs, including text, image, audio, and video, to generate richer and more contextually relevant outputs. The transition from monolithic, single-domain AI architectures to integrated, multimodal frameworks represents a critical technological pivot, underpinning the rapid market expansion observed globally, particularly in areas requiring complex sensory fusion like autonomous driving and advanced diagnostic tools.

Multimodal Models Market introduction

The Multimodal Models Market encompasses the development, deployment, and utilization of Artificial Intelligence systems designed to process, interpret, and generate outputs across multiple modalities simultaneously. These models move beyond traditional AI limitations by integrating disparate data types—such as text, images, speech, and structured data—into a unified representational space, allowing for deeper contextual understanding and more human-like reasoning capabilities. The core technology leverages advanced neural networks, including transformers and diffusion models, specifically engineered to handle the complexities of cross-modal relationships. This confluence of technological innovation is enabling groundbreaking applications across various industries, fundamentally altering how enterprises interact with and derive insights from vast, complex data environments, leading to significant advancements in automation and decision support systems.

Major applications of multimodal models span across several high-value sectors, including the creation of sophisticated digital content, enhancement of accessibility features through comprehensive translation services (e.g., image-to-text-to-speech), and powering highly reliable autonomous systems that require instantaneous fusion of visual and sensory inputs for safe operation. Furthermore, the market benefits greatly from their use in complex tasks such as medical image analysis coupled with electronic health record data, significantly improving diagnostic accuracy and efficiency in the healthcare domain. These models offer substantial benefits, including increased accuracy in classification and prediction tasks, improved robustness in real-world scenarios due to redundancy across modalities, and the ability to unlock previously unattainable levels of creativity in content generation, solidifying their role as pivotal tools in the next generation of enterprise technology stacks.

Driving factors for this market expansion are multifaceted, anchored by the increasing availability of massive, labeled multimodal datasets essential for training these sophisticated models, alongside continuous breakthroughs in computational efficiency through specialized hardware (like GPUs and TPUs). Corporate investment in AI research and development has surged, recognizing multimodal capabilities as key competitive differentiators. Furthermore, the maturation of foundational models (large language models and large vision models) provides a robust platform upon which complex, custom multimodal architectures can be efficiently built, accelerating time-to-market for enterprise solutions. Regulatory pushes in certain sectors, such as enhanced safety standards for autonomous vehicles, also necessitate the reliable, comprehensive understanding provided by multimodal AI, thus sustaining the strong momentum of market adoption.

Multimodal Models Market Executive Summary

The Multimodal Models Market is characterized by intense innovation and rapid commercialization, positioning it as one of the fastest-growing segments within the broader AI landscape. Current business trends indicate a strong focus on platform development, where leading technology firms are investing heavily in creating scalable APIs and specialized cloud services that democratize access to multimodal capabilities for smaller enterprises and developers. A critical trend involves the shift towards smaller, more efficient models that can operate effectively at the edge (on devices like smartphones and robotics), reducing latency and dependency on central cloud infrastructure. Furthermore, strategic partnerships between hardware manufacturers and AI software developers are becoming commonplace, aimed at optimizing model performance for specific compute environments, thus ensuring the viability of real-time multimodal applications across diverse operational settings.

Regionally, North America maintains its dominance due to high levels of venture capital funding, the presence of major technological pioneers, and robust adoption across key industries like media and autonomous technology. However, the Asia Pacific (APAC) region is demonstrating the highest growth velocity, fueled by expansive digital transformation initiatives in countries like China, India, and South Korea, coupled with significant governmental investment in AI infrastructure and applications, particularly within smart city projects and localized content generation. Europe is showing consistent growth, driven by stringent regulatory frameworks focusing on ethical AI development and strong application uptake in specialized sectors such as advanced manufacturing and pharmaceutical research, positioning it as a significant hub for ethical and trustworthy multimodal AI solutions.

Segmentation trends highlight a pronounced shift towards application-specific model development, moving away from purely generalized architectures. The Content Generation segment (especially video and synthetic media creation) is experiencing explosive demand due to its immediate commercial value in marketing and entertainment. Concurrently, the Healthcare sector's adoption, specifically utilizing multimodal fusion for radiology, pathology, and clinical data interpretation, represents a high-growth, high-impact vertical. Deployment preferences are increasingly leaning towards hybrid or cloud-based solutions, allowing enterprises the flexibility to scale computational resources as data complexity and processing demands fluctuate, although on-premise deployments remain critical for security-sensitive industries like defense and finance where data sovereignty is paramount.

AI Impact Analysis on Multimodal Models Market

User queries regarding the impact of AI on the Multimodal Models Market commonly revolve around themes of generative capabilities, ethical concerns regarding synthetic content, and the technological feasibility of achieving true cross-modal understanding. Specifically, users frequently ask about the role of Large Language Models (LLMs) and Large Vision Models (LVMs) as foundational elements, the speed at which multimodal systems can replace human tasks (e.g., complex transcription or asset creation), and the cybersecurity implications associated with deepfakes and manipulated synthetic data. The pervasive expectation is that continuous advancements in neural network architectures, coupled with increasing accessibility to massive, diverse datasets, will accelerate the development of highly integrated systems that demonstrate near-human levels of perception and reasoning, fundamentally redefining computational tasks across all enterprise functions. Conversely, concerns focus on the potential for increased bias propagation when fusing disparate datasets and the necessary governance structures required to manage the deployment of powerful generative models responsibly.

The primary impact of AI itself—specifically, the maturation of deep learning techniques—is inherently intertwined with the existence and growth of the Multimodal Models Market. The advent of transformer architecture has been the single most crucial enabler, allowing models to weigh the relevance of different data types (attention mechanisms) and integrate them into a unified representation space. This technological backbone facilitates the market's current focus on creating truly generalist AI systems capable of handling complex, real-world inputs that are inherently noisy and heterogeneous. Furthermore, optimization algorithms and techniques like prompt engineering, originally refined for LLMs, are now being adapted to multimodal contexts, significantly streamlining the process of fine-tuning these complex systems for specialized commercial applications and improving user interaction efficiency.

The continuous feedback loop within the AI development ecosystem—where deployment data informs model refinement—ensures sustained market momentum. As multimodal models become deployed in high-stakes environments, such as autonomous vehicles or medical diagnostics, the rigorous performance requirements drive further innovation in robustness, interpretability (explainable AI), and efficiency. This iterative improvement cycle, fueled by competitive pressures among leading technology providers, ensures that the market for multimodal models remains dynamic, constantly pushing the boundaries of what is computationally possible and rapidly converting theoretical capabilities into tangible enterprise solutions across diverse global economic sectors.

- Accelerated development of foundation models capable of processing and synthesizing text, image, and audio inputs seamlessly.

- Increased market demand for unified AI platforms that minimize latency and complexity in cross-modal data interpretation.

- Enhancement of Generative AI (GenAI) capabilities, moving from single-output creation to complex, context-aware multimodal content generation (e.g., generating video from text and audio descriptions).

- Greater emphasis on ethical AI frameworks and governance due to the potential for sophisticated misuse, such as creating hyper-realistic deepfakes.

- Driving demand for specialized AI hardware (e.g., NPUs, customized ASICs) optimized for the high computational demands of fusing large-scale modalities.

- Improvement in AI interpretability (XAI) tools designed to explain decisions derived from the fusion of diverse data streams.

DRO & Impact Forces Of Multimodal Models Market

The Multimodal Models Market is simultaneously propelled by powerful drivers, constrained by critical limitations, and shaped by compelling opportunities, all interacting to determine its trajectory. Key drivers include the overwhelming proliferation of complex, unstructured data, which necessitates advanced AI tools capable of holistic interpretation, and the demonstrated superior performance of multimodal systems over unimodal counterparts in accuracy and resilience across tasks like scene understanding and complex data query resolution. Restraints largely center on the intensive computational demands and high costs associated with training and deploying these large-scale models, alongside persistent challenges regarding data harmonization—the difficulty of aligning and cleaning disparate, large-scale datasets from varied sources—and addressing inherent biases that can be magnified when integrating multiple potentially biased data streams. Opportunities are abundant, specifically in emerging high-growth verticals like robotics, personalized education platforms, and specialized diagnostic healthcare, where the fusion of information delivers essential value propositions that cannot be met by current technology. These factors, alongside the pervasive influence of continuous innovation in transformer architectures and efficient model compression techniques, define the market's dynamic landscape and its potential for exponential growth over the forecast period.

Impact forces acting upon the market dictate the speed and direction of technology adoption. Technological advancement serves as a massive positive force, as breakthroughs in efficient model scaling, transfer learning across modalities, and the democratization of open-source models rapidly lower the barrier to entry and deployment. Economic factors, particularly the increasing willingness of large enterprises to allocate substantial R&D budgets toward integrated AI solutions, provide the financial impetus for continued innovation and commercial scale-up. Regulatory and ethical forces act as both restraints and drivers; while stringent data privacy laws (like GDPR) impose compliance burdens and restrict data access, the demand for transparent and ethically governed AI systems drives specialized market opportunities for solutions that embed explainability and fairness by design. Competitive intensity among major tech giants—Google, Microsoft, OpenAI, and Meta—is forcing rapid feature iteration and aggressive pricing strategies, ultimately benefiting end-users by accelerating the availability of high-quality multimodal solutions and fostering a robust ecosystem of complimentary services.

The equilibrium between these opposing forces is highly sensitive to external factors, most notably the rate of innovation in specialized AI hardware. If computational costs decrease significantly faster than anticipated due to chip breakthroughs (e.g., neuromorphic computing), the restraint related to high deployment cost will diminish, allowing for much broader enterprise adoption globally. Conversely, if high-profile ethical failures or security breaches related to synthetic media lead to restrictive global regulations, the market might experience a temporary slowdown as companies prioritize compliance and robustness over aggressive feature rollout. Ultimately, the long-term momentum is secured by the fundamental recognition that future complex decision-making and automated intelligence require the integrated, holistic understanding inherent only in multimodal AI systems.

- Drivers: Proliferation of complex, unstructured data; demonstrated superior performance over unimodal systems; surging investment in AI infrastructure; advancements in unified transformer architectures.

- Restraints: High computational costs for training and deployment; challenges in multimodal data harmonization and cleaning; complexity in model fine-tuning; regulatory hurdles concerning data privacy and synthetic content governance.

- Opportunity: Expansion into high-value domains like autonomous robotics and personalized learning; creation of novel human-computer interaction paradigms; development of proprietary, domain-specific foundation models; edge computing integration for real-time applications.

- Impact Forces: Technological breakthroughs in model efficiency; high competitive pressure among leading tech firms; increasing regulatory scrutiny necessitating ethical AI solutions; expanding accessibility of open-source multimodal toolkits.

Segmentation Analysis

The Multimodal Models Market is meticulously segmented across multiple dimensions, including Component, Deployment Mode, Application, and End-Use Industry, reflecting the diverse ways in which this technology is commercialized and utilized across the global economy. This granular segmentation allows vendors to tailor their offerings—whether they are core platform technologies or highly specialized integration services—to meet the specific operational and compliance needs of various end-users. The analysis of these segments reveals that while core Platform components drive the initial investment, the Services segment, covering customization, integration, and maintenance, represents a significant recurring revenue stream due to the complexity inherent in deploying and maintaining sophisticated, cross-modal AI systems within existing enterprise architectures. Understanding these market divisions is crucial for strategic planning, resource allocation, and identifying lucrative, untapped vertical market niches where multimodal AI can deliver transformative value.

- By Component: Platform, Services.

- By Deployment: Cloud, On-Premise, Hybrid.

- By Application: Content Generation (Synthetic Media, Video), Autonomous Systems (Robotics, Vehicles), Healthcare Diagnostics & Imaging, Financial Risk Modeling, Education & Personalized Learning, Retail & E-commerce (Visual Search, Product Recommendations).

- By End-Use Industry: IT & Telecom, Healthcare & Life Sciences, Automotive & Transportation, Retail & Consumer Goods, Media & Entertainment, BFSI (Banking, Financial Services, and Insurance), Government & Defense.

Value Chain Analysis For Multimodal Models Market

The value chain for the Multimodal Models Market is characterized by highly specialized stages, beginning with the foundational upstream activities crucial for model creation and culminating in sophisticated downstream deployment and user interaction. Upstream analysis focuses heavily on data acquisition and preprocessing—securing vast, high-quality, and ethically sourced multimodal datasets (text, image, audio) and the subsequent labor-intensive process of data annotation and alignment, often involving specialized outsourced labeling services. Following data preparation, the next critical upstream stage is foundational model research and development, dominated by large technology firms and academic institutions that possess the necessary compute resources (advanced supercomputers, specialized data centers) and expertise to train massive transformer-based architectures capable of cross-modal understanding. This stage requires continuous investment in proprietary intellectual property and specialized hardware optimization, creating significant barriers to entry for smaller players and establishing a clear hierarchy of foundational model providers.

The midstream of the value chain involves platform creation and customization, where the foundational models are refined, compressed, and made accessible via APIs or integrated development environments (IDEs). Key activities here include creating model serving infrastructure, developing tools for prompt engineering and fine-tuning, and packaging models for specific industry use cases. Distribution channels are varied, involving both direct and indirect routes. Direct distribution is common for hyperscalers (like AWS, Google Cloud, Microsoft Azure) offering proprietary multimodal models directly through their cloud marketplaces, providing integrated billing and infrastructure management. Indirect channels are crucial for market penetration, relying heavily on partnerships with system integrators, specialized AI consulting firms, and independent software vendors (ISVs) who tailor and deploy these complex solutions within client enterprise environments. These indirect partners provide the necessary domain expertise and localized support often required for successful, complex multimodal implementation.

Downstream analysis centers on end-user application and solution deployment, focusing on delivering tangible business outcomes. This stage involves deep integration into existing enterprise workflows, continuous performance monitoring, and iterative user feedback loops to maintain model accuracy and relevance. The commercial realization occurs when the multimodal capabilities are embedded into consumer products (e.g., smart home devices, advanced search engines) or high-value enterprise applications (e.g., autonomous driving stacks, clinical decision support systems). The efficiency and reliability of the distribution channel—whether direct SaaS delivery or complex custom deployment via integrators—significantly impacts the total cost of ownership and the speed of market adoption, cementing the distribution network's importance as a critical determinant of commercial success in this rapidly evolving technology market.

Multimodal Models Market Potential Customers

Potential customers for Multimodal Models span a wide array of sophisticated entities requiring automated interpretation and generation across complex data streams, moving beyond the capabilities of conventional AI or simple automation solutions. The primary end-users are large enterprises across regulated and non-regulated sectors that possess vast quantities of disparate, complex data (e.g., medical images combined with text reports, sensor data combined with geographic information systems inputs, and customer interaction logs combined with social media imagery). High-value buyers include technology-intensive industries like the Automotive sector, which requires real-time fusion of LiDAR, radar, camera, and map data for safe autonomous operation, and the Media & Entertainment industry, which leverages these models for generating complex synthetic assets, automating localization, and enhancing content accessibility. The demand is driven by the necessity for enhanced operational efficiency, superior predictive accuracy, and the capability to create novel, engaging customer experiences that are difficult to replicate using unimodal systems.

Another significant segment of buyers comprises research institutions and governmental organizations, particularly those involved in defense, national security, and academic science. These entities require multimodal analysis for tasks such as complex surveillance data fusion, threat detection across varied inputs, and accelerating scientific discovery by interpreting complex experimental results alongside published literature. Furthermore, the burgeoning field of personalized medicine positions healthcare providers and pharmaceutical companies as crucial buyers; they seek to integrate genomics data, patient records, and diagnostic imagery to develop highly targeted and individualized treatment plans. These customers are typically motivated by the ability to achieve superior classification, reduce error rates in critical operations, and unlock deeper insights that were previously inaccessible due to the difficulty of unifying diverse data modalities.

| Report Attributes | Report Details |

|---|---|

| Market Size in 2026 | $1.8 Billion |

| Market Forecast in 2033 | $15.5 Billion |

| Growth Rate | 35.5% CAGR |

| Historical Year | 2019 to 2024 |

| Base Year | 2025 |

| Forecast Year | 2026 - 2033 |

| DRO & Impact Forces |

|

| Segments Covered |

|

| Key Companies Covered | OpenAI, Google, Microsoft, Meta Platforms, IBM, Amazon Web Services (AWS), Baidu, Adobe, Stability AI, Apple, Salesforce, Hugging Face, Cohere, Anthropic, NVIDIA, Intel, Siemens, Samsung, Tencent, DeepMind. |

| Regions Covered | North America, Europe, Asia Pacific (APAC), Latin America, Middle East, and Africa (MEA) |

| Enquiry Before Buy | Have specific requirements? Send us your enquiry before purchase to get customized research options. Request For Enquiry Before Buy |

Multimodal Models Market Key Technology Landscape

The technological landscape of the Multimodal Models Market is fundamentally defined by the convergence of several advanced computing and AI disciplines, underpinned by architectural breakthroughs in deep neural networks. The central pillar is the Transformer architecture, which, through sophisticated self-attention mechanisms, enables the system to weigh the relevance of input tokens, regardless of their modality (text, visual patches, or audio spectrograms), thereby creating a unified latent space for cross-modal comprehension. Key technologies also include specialized encoding techniques such as visual transformers (ViT) for image processing and various speech recognition models, all integrated under a unified framework. Furthermore, techniques related to contrastive learning, such as those used in CLIP (Contrastive Language–Image Pre-training), are crucial, as they allow models to learn robust associations between pairs of data from different modalities without requiring overly strict, paired labeling, significantly enhancing the efficiency and generalizability of model training across diverse datasets.

The efficiency of training and deployment is heavily reliant on advanced hardware and software optimization. The utilization of high-performance computing clusters, predominantly leveraging specialized GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units), is essential to handle the massive parameter counts and complex matrix operations inherent in multimodal model training. Software optimization frameworks, including PyTorch and TensorFlow, alongside specialized distribution and parallelization libraries (e.g., DeepSpeed), are critical for managing the memory constraints and computational load associated with models containing billions or trillions of parameters. Moreover, the growing emphasis on model quantization and pruning techniques allows for the deployment of these large, complex models on resource-constrained environments, such as mobile devices and edge computing platforms, effectively expanding the accessibility and real-time application potential of multimodal capabilities across various industrial sectors and consumer electronics.

Emerging technologies like retrieval-augmented generation (RAG) are gaining prominence, especially in enterprise multimodal search and knowledge retrieval systems. RAG combines the generative power of multimodal models with external, verifiable knowledge bases, allowing the models to generate outputs that are not only creative and coherent but also factually grounded across different modalities. This hybrid approach significantly improves the trustworthiness and applicability of multimodal models in high-stakes environments, such as legal, financial, and medical contexts, by mitigating issues related to hallucination and non-verifiable outputs. The continuous push toward developing more efficient attention mechanisms and techniques for modality alignment will continue to shape the competitive technological environment, focusing on achieving superior performance while aggressively reducing inference time and operational costs for widespread commercial viability.

Regional Highlights

- North America: This region dominates the Multimodal Models Market, primarily driven by the concentration of global tech giants (such as Google, Microsoft, and OpenAI) and unparalleled access to venture capital funding, fostering cutting-edge research and rapid commercialization. High adoption rates are seen in autonomous vehicle development, high-end media production, and complex defense applications. The robust ecosystem of specialized AI talent and advanced cloud infrastructure provides a foundational advantage, ensuring continued leadership in foundational model development and early deployment of sophisticated cross-modal solutions.

- Asia Pacific (APAC): APAC is projected to exhibit the highest growth rate, fueled by aggressive government investments in AI (notably in China, South Korea, and Singapore) and expansive smart city initiatives that inherently require multimodal data fusion for effective management and security. The vast and diverse digital populations provide immense, unique training data pools, accelerating localized application development in retail, e-commerce (e.g., visual search), and personalized education systems, positioning the region as a critical consumer and developer of application-specific multimodal AI.

- Europe: The European market is characterized by a strong focus on ethical AI development and regulatory compliance, particularly emphasized by the EU’s AI Act, which drives demand for explainable and secure multimodal systems, especially in highly regulated sectors like manufacturing, finance, and advanced healthcare diagnostics. While venture capital investment is lower than in North America, Europe maintains high academic excellence and robust industrial adoption, concentrating on using multimodal AI to optimize complex industrial processes (Industry 4.0) and drive R&D in life sciences.

- Latin America (LATAM): The LATAM region represents an emerging market with significant potential. Growth is accelerating, driven by increasing digital penetration and modernization efforts in banking and retail. Multimodal models are increasingly being deployed to enhance customer experience through advanced conversational AI that integrates voice, text, and visual context, addressing language diversity and accessibility challenges across the region.

- Middle East and Africa (MEA): Growth in MEA is primarily centralized around major investment hubs like the UAE and Saudi Arabia, heavily investing in large-scale strategic digital transformation projects, including NEOM and other smart city developments. The need for sophisticated surveillance, security applications, and advanced oil and gas exploration tools that fuse satellite imagery with textual geological reports drives initial high-value adoption, supported by state-backed initiatives to build regional technological self-sufficiency in AI capabilities.

Top Key Players

The market research report includes a detailed profile of leading stakeholders in the Multimodal Models Market.- OpenAI

- Microsoft

- Meta Platforms

- IBM

- Amazon Web Services (AWS)

- Baidu

- Adobe

- Stability AI

- Apple

- Salesforce

- Hugging Face

- Cohere

- Anthropic

- NVIDIA

- Intel

- Siemens

- Samsung

- Tencent

- DeepMind

Frequently Asked Questions

Analyze common user questions about the Multimodal Models market and generate a concise list of summarized FAQs reflecting key topics and concerns.What defines a Multimodal Model and how does it differ from traditional AI?

A Multimodal Model is an AI system that processes and integrates data from two or more modalities (e.g., text and images) simultaneously. It differs from traditional, unimodal AI by achieving a holistic understanding, resulting in higher accuracy and contextual relevance, especially in complex tasks like autonomous navigation or sophisticated content creation.

Which industries are adopting Multimodal Models most rapidly?

The Automotive (Autonomous Systems), Healthcare (Diagnostics and Imaging), and Media & Entertainment (Generative Content) industries are leading adoption, driven by the critical need to fuse diverse data inputs for safety, clinical accuracy, and high-quality synthetic asset generation.

What are the primary technical challenges facing the deployment of these models?

Key technical challenges include the massive computational resources required for training and inference, difficulties in harmonizing and aligning large, disparate datasets, and ensuring model efficiency for real-time operation on edge devices with limited processing power.

How is Generative AI influencing the Multimodal Models Market?

Generative AI is a major driver, utilizing multimodal capabilities to create sophisticated synthetic content, such as generating detailed videos or interactive 3D environments from simple text prompts, fundamentally transforming digital content workflows and production efficiencies across commercial sectors.

Is the Multimodal Models Market facing significant regulatory hurdles?

Yes, the market is increasingly subject to regulatory scrutiny, particularly concerning data privacy, intellectual property rights, and the ethical management of deepfakes and biased outputs, necessitating robust compliance frameworks and explainable AI (XAI) features within deployed models.

To check our Table of Contents, please mail us at: sales@marketresearchupdate.com

Research Methodology

The Market Research Update offers technology-driven solutions and its full integration in the research process to be skilled at every step. We use diverse assets to produce the best results for our clients. The success of a research project is completely reliant on the research process adopted by the company. Market Research Update assists its clients to recognize opportunities by examining the global market and offering economic insights. We are proud of our extensive coverage that encompasses the understanding of numerous major industry domains.

Market Research Update provide consistency in our research report, also we provide on the part of the analysis of forecast across a gamut of coverage geographies and coverage. The research teams carry out primary and secondary research to implement and design the data collection procedure. The research team then analyzes data about the latest trends and major issues in reference to each industry and country. This helps to determine the anticipated market-related procedures in the future. The company offers technology-driven solutions and its full incorporation in the research method to be skilled at each step.

The Company's Research Process Has the Following Advantages:

- Information Procurement

The step comprises the procurement of market-related information or data via different methodologies & sources.

- Information Investigation

This step comprises the mapping and investigation of all the information procured from the earlier step. It also includes the analysis of data differences observed across numerous data sources.

- Highly Authentic Source

We offer highly authentic information from numerous sources. To fulfills the client’s requirement.

- Market Formulation

This step entails the placement of data points at suitable market spaces in an effort to assume possible conclusions. Analyst viewpoint and subject matter specialist based examining the form of market sizing also plays an essential role in this step.

- Validation & Publishing of Information

Validation is a significant step in the procedure. Validation via an intricately designed procedure assists us to conclude data-points to be used for final calculations.

×

Request Free Sample:

Related Reports

Select License

Why Choose Us

We're cost-effective and Offered Best services:

We are flexible and responsive startup research firm. We adapt as your research requires change, with cost-effectiveness and highly researched report that larger companies can't match.

Information Safety

Market Research Update ensure that we deliver best reports. We care about the confidential and personal information quality, safety, of reports. We use Authorize secure payment process.

We Are Committed to Quality and Deadlines

We offer quality of reports within deadlines. We've worked hard to find the best ways to offer our customers results-oriented and process driven consulting services.

Our Remarkable Track Record

We concentrate on developing lasting and strong client relationship. At present, we hold numerous preferred relationships with industry leading firms that have relied on us constantly for their research requirements.

Best Service Assured

Buy reports from our executives that best suits your need and helps you stay ahead of the competition.

Customized Research Reports

Our research services are custom-made especially to you and your firm in order to discover practical growth recommendations and strategies. We don't stick to a one size fits all strategy. We appreciate that your business has particular research necessities.

Service Assurance

At Market Research Update, we are dedicated to offer the best probable recommendations and service to all our clients. You will be able to speak to experienced analyst who will be aware of your research requirements precisely.

Contact With Our Sales Team

Customer Testimonials

The content of the report is always up to the mark. Good to see speakers from expertise authorities.

Privacy requested , Managing Director

A lot of unique and interesting topics which are described in good manner.

Privacy requested, President

Well researched, expertise analysts, well organized, concrete and current topics delivered in time.

Privacy requested, Development Manager