Multimodal UI Market Size By Region (North America, Europe, Asia-Pacific, Latin America, Middle East and Africa), By Statistics, Trends, Outlook and Forecast 2025 to 2032 (Financial Impact Analysis)

ID : MRU_ 428078 | Date : Oct, 2025 | Pages : 253 | Region : Global | Publisher : MRU

Multimodal UI Market Size

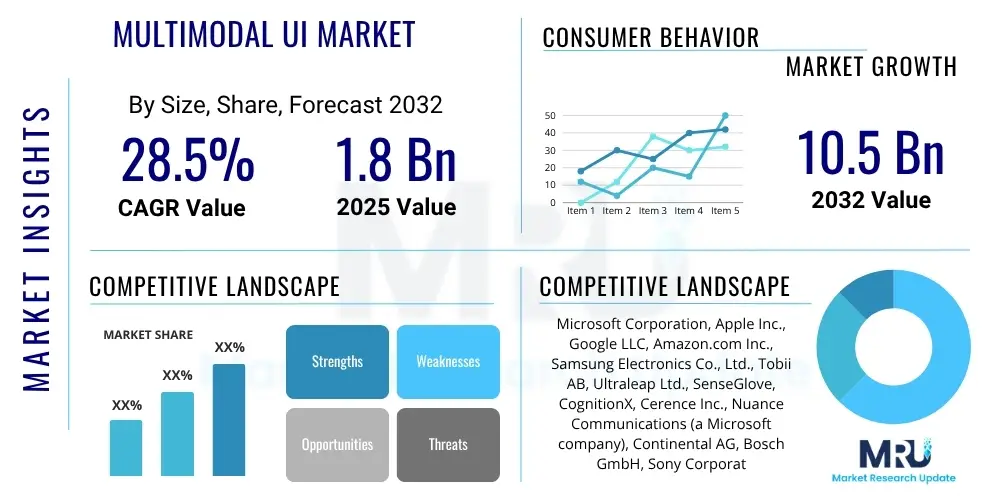

The Multimodal UI Market is projected to grow at a Compound Annual Rate (CAGR) of 28.5% between 2025 and 2032. This impressive growth trajectory reflects the escalating demand for more intuitive and natural human-computer interaction, driven by continuous technological advancements and widespread adoption across diverse sectors. The market is estimated at USD 1.8 billion in 2025, a valuation underpinned by nascent but rapidly expanding applications in consumer electronics, automotive, and enterprise solutions. It is strategically projected to reach a substantial USD 10.5 billion by the end of the forecast period in 2032, indicating a profound shift in user interface design and a strong market acceptance of integrated input modalities. This expansion will be fueled by continuous innovation in AI, sensor technology, and haptic feedback systems, coupled with increasing investments in smart infrastructure and immersive computing experiences. The anticipated market size in 2032 underscores the transformative potential of multimodal interfaces to redefine how individuals and industries interact with digital environments, paving the way for ubiquitous, context-aware, and highly personalized user experiences across the globe.

Multimodal UI Market introduction

The Multimodal User Interface (UI) market encompasses a cutting-edge technological domain dedicated to fostering human-computer interaction through a rich tapestry of input modalities. Unlike conventional interfaces that often restrict users to a single mode such as touch or keyboard input, multimodal UIs seamlessly integrate various methods, including voice commands, intuitive gestures, direct touch interactions, gaze tracking, and sophisticated haptic feedback. This holistic approach allows users to engage with digital systems in a manner that closely mirrors natural human communication, offering unparalleled flexibility and efficiency. Users can choose the most appropriate modality for a given task or context, or even combine modalities simultaneously for complex operations, thereby enhancing both usability and user satisfaction. The advent of robust AI algorithms and advanced sensor technologies has been instrumental in making these sophisticated interactions a practical reality across a multitude of applications. This innovation is evident in enhancing safety in automotive infotainment, where drivers use voice and gestures, and revolutionizing smart home environments where users effortlessly control devices through combined commands and movements.

Product offerings within this vibrant market span a wide technological spectrum, ranging from integrated hardware solutions featuring advanced sensors and processing units to sophisticated software platforms that interpret and fuse diverse input streams. Key applications are incredibly broad, touching upon critical sectors such as consumer electronics, where smartphones, wearables, and smart speakers leverage multimodal capabilities for enhanced personalization and ease of use. In healthcare, multimodal interfaces are transforming medical imaging, surgical navigation, and patient monitoring, offering sterile and precise interaction options. The automotive industry is experiencing a revolution with multimodal UIs enabling intuitive control of vehicle functions and navigation systems, paving the way for advanced driver-assistance systems (ADAS) and autonomous vehicles. Furthermore, industrial and manufacturing sectors are adopting these interfaces for complex machinery control and augmented reality-assisted maintenance, improving operational efficiency and safety. The aerospace and defense sector also benefits from advanced multimodal cockpits and simulation environments that demand high precision and reduced cognitive load for operators.

The inherent benefits of Multimodal UIs are manifold and significantly contribute to their increasing adoption. These include dramatically enhanced accessibility, catering to diverse user populations, including individuals with disabilities, by offering alternative interaction pathways. Multimodal UIs reduce cognitive load by presenting information and accepting input in the most natural and least taxing manner, thereby improving overall operational efficiency and reducing error rates in demanding environments. They foster more immersive and engaging user experiences, particularly in gaming, virtual reality (VR), and augmented reality (AR) applications, where realistic and intuitive interaction is paramount. The primary driving forces behind this market's accelerated expansion are deeply rooted in the continuous innovation cycles of artificial intelligence and machine learning, which provide the intelligence layer necessary for understanding and responding to complex multimodal inputs. Coupled with this is the ever-increasing consumer and industrial demand for seamless, natural, and context-aware human-computer interaction. Advancements in miniaturized, high-precision sensor technology and improvements in haptic feedback mechanisms further enable the creation of more sophisticated and responsive interfaces. Moreover, the burgeoning complexity of modern digital ecosystems and the proliferation of connected devices (IoT) necessitate more intuitive and versatile control mechanisms, positioning multimodal UIs as an indispensable component for future technological landscapes.

Multimodal UI Market Executive Summary

The Multimodal UI Market is currently undergoing a period of profound transformation and accelerated expansion, marked by dynamic shifts in business models, pervasive technological integration, and evolving user expectations. Business trends indicate a strong impetus towards horizontal and vertical integration, with leading technology companies acquiring specialized AI and sensor startups to consolidate their multimodal capabilities. There is a discernible shift from niche applications to mainstream adoption, driven by the desire for ubiquitous and friction-less interaction across all smart devices and digital platforms. Strategic alliances and robust partnerships between hardware manufacturers, software developers, and AI research institutions are becoming increasingly vital to develop comprehensive, end-to-end multimodal solutions that can address complex industry challenges. Furthermore, the market is witnessing an intensified focus on personalization and adaptive learning, where UIs are designed to evolve with individual user preferences and contextual changes, moving towards truly intelligent and proactive systems.

Regional trends reveal a global landscape with varying adoption rates and innovation hubs. North America and Europe continue to lead in terms of R&D investments, early market penetration, and the development of sophisticated multimodal applications, particularly in high-value sectors such as automotive, healthcare, and defense. These regions benefit from mature technological infrastructures, high disposable incomes, and a strong culture of innovation. However, the Asia Pacific (APAC) region is rapidly emerging as a dominant growth engine, propelled by its enormous consumer base, rapid digital transformation initiatives across industries, and substantial governmental investments in AI and smart city projects. Countries like China, Japan, and South Korea are at the forefront of adopting and developing multimodal solutions for consumer electronics, smart manufacturing, and retail. Latin America and the Middle East & Africa (MEA) are also showing promising signs of growth, albeit from a smaller base, driven by increasing internet penetration, smart device adoption, and infrastructure development, particularly in smart home and public sector applications.

Segment trends underscore the multifaceted nature of the Multimodal UI Market. By component, the software segment, particularly AI/ML frameworks and middleware, is exhibiting the highest growth due to the increasing sophistication required for processing and fusing diverse input data. Hardware advancements, especially in miniaturized and high-performance sensors, remain critical enablers. Technologically, voice and gesture recognition continue to dominate, but eye-tracking, haptics, and even early-stage brain-computer interfaces (BCI) are gaining traction, indicating a move towards more immersive and hands-free interaction paradigms. In terms of applications, consumer electronics and automotive remain primary revenue generators, while healthcare, industrial automation, and retail are demonstrating significant untapped potential for innovative multimodal solutions. The market is also seeing a diversification of end-users, extending beyond individual consumers to encompass large enterprises, small and medium-sized businesses (SMBs), and various governmental and public sector entities, each seeking to leverage multimodal UIs for enhanced operational efficiency, improved customer engagement, and superior user experience.

AI Impact Analysis on Multimodal UI Market

The pervasive integration of Artificial Intelligence (AI) serves as the foundational cornerstone for the evolution and expansive capabilities of the Multimodal UI market. Users frequently inquire about how AI transcends basic automation to deliver truly intelligent and adaptive interactions, questioning its role in deciphering complex human intent, managing data privacy across diverse input streams, and ensuring seamless contextual understanding. There is a strong user expectation that AI should not merely process inputs but proactively anticipate needs, learn individual preferences over time, and provide personalized, highly relevant responses without explicit commands. Concerns often center on the accuracy and latency of AI models in real-world, dynamic environments, particularly when integrating multiple, potentially ambiguous inputs. Furthermore, the ethical implications of AI-driven data collection from various modalities and its potential for bias in interpretation remain critical points of discussion among developers and end-users alike. The core theme emerging from these discussions is the transformative power of AI to elevate Multimodal UIs from a collection of discrete input methods to a cohesive, intelligent, and human-centric interaction paradigm that fundamentally changes the nature of user engagement with technology.

AI's influence is profound, enabling systems to move beyond simple rule-based responses to complex, nuanced understanding. Through sophisticated machine learning models, multimodal UIs can interpret subtle cues from various sources, such as detecting emotion from voice tone, understanding user focus from gaze direction, or inferring intent from a combination of gestures and spoken words. This level of interpretation is crucial for creating truly natural and effective interfaces that adapt to human behavior rather than forcing humans to adapt to machines. Deep learning techniques, especially in natural language processing (NLP) and computer vision, have dramatically improved the accuracy and robustness of these systems, allowing for real-time processing of high-dimensional data streams. This intelligence layer is not just about recognition; it's about synthesis, correlation, and prediction, transforming fragmented inputs into a unified understanding of user intent and context. As AI continues to advance, multimodal UIs will become even more predictive, proactive, and personalized, anticipating user needs before they are explicitly articulated, and seamlessly guiding interactions across an increasing array of devices and environments, from smart homes to highly automated industrial settings.

- AI enables sophisticated natural language processing (NLP) for voice commands, dramatically improving accuracy, understanding of complex queries, and conversational nuances, facilitating seamless verbal interaction.

- Machine learning algorithms significantly enhance gesture recognition capabilities, allowing for the precise interpretation of subtle, natural hand movements and body language, reducing reliance on explicit commands.

- Predictive AI analyzes user behavior across all integrated modalities, including past interactions, current context, and environmental data, to anticipate needs and offer proactive assistance, streamlining tasks and improving overall system efficiency.

- Contextual awareness, driven by AI, integrates diverse data streams from various sensors (e.g., location, time, environmental factors, biometric data) with multimodal inputs to provide highly personalized, situationally relevant, and adaptive responses.

- AI facilitates adaptive learning mechanisms, allowing multimodal UIs to continuously improve their understanding of individual user preferences, accents, interaction styles, and even emotional states over time, leading to increasingly personalized and intuitive user experiences.

- Deep learning models power advanced facial recognition and emotion detection, enabling UIs to gauge user engagement, frustration, or confusion, and adapt responses or offer assistance accordingly, fostering more empathetic interactions.

- AI-driven fusion engines intelligently combine data from disparate input modalities (e.g., voice, gesture, gaze) to resolve ambiguities, infer deeper user intent, and provide a more robust and coherent understanding of complex commands than any single modality could offer.

DRO & Impact Forces Of Multimodal UI Market

The Multimodal UI Market is driven by a powerful confluence of factors, primarily the surging demand for more intuitive and natural human-computer interaction (HCI) paradigms. Traditional single-mode interfaces often impose cognitive burdens or limit accessibility, whereas multimodal systems offer a richer, more flexible, and inherently human-like interaction experience. This fundamental shift in user expectation is a core driver. Furthermore, the exponential advancements in Artificial Intelligence (AI) and Machine Learning (ML), particularly in areas such as natural language processing, computer vision, and predictive analytics, serve as crucial enablers, providing the intelligence layer necessary for multimodal systems to understand and synthesize diverse inputs seamlessly. The proliferation of smart devices, the ubiquitous spread of the Internet of Things (IoT), and the rapid evolution of augmented and virtual reality (AR/VR) technologies create vast ecosystems that inherently benefit from and demand sophisticated multimodal interfaces for intuitive control and immersive experiences. Growing awareness of accessibility issues also pushes for multimodal solutions that cater to a broader range of users, including those with physical limitations, fostering inclusive design principles across technology development.

Despite these strong tailwinds, the market faces several notable restraints that could temper its growth. A significant challenge lies in the substantial development costs associated with integrating diverse technologies and ensuring seamless, real-time performance across multiple modalities. The complexity involved in synchronizing various input streams – such as simultaneous voice commands and gestures – and fusing their data intelligently without ambiguity requires sophisticated algorithms and robust engineering. Furthermore, data privacy concerns pose a considerable restraint; multimodal UIs often collect extensive and highly personal user data (voice patterns, biometric data from gestures or gaze, location), necessitating stringent security measures and clear ethical guidelines to build user trust. Technical limitations such as latency in processing multiple inputs, potential for misinterpretation across modalities, and the risk of increased cognitive load if interfaces are poorly designed, also present hurdles. The absence of universal standards for multimodal interaction development can fragment the market and increase integration challenges for developers and manufacturers seeking interoperability.

Opportunities within the Multimodal UI Market are vast and largely untapped, particularly in the development of specialized applications for niche markets where traditional interfaces fall short. This includes precision control in advanced robotics, intuitive interfaces for complex medical diagnostics and surgical procedures, and highly adaptive assistive technologies for individuals with disabilities, offering significant social and economic impact. The ongoing standardization of multimodal interaction protocols and the expansion of robust developer ecosystems, complete with readily available SDKs and APIs, will significantly lower entry barriers and accelerate innovation, enabling a broader range of companies to integrate multimodal capabilities. Furthermore, the increasing adoption of edge computing, which allows for processing multimodal data closer to the source, reduces latency and enhances privacy, opening new avenues for real-time and secure applications. The convergence of 5G networks with multimodal UIs will unlock ultra-low latency applications, crucial for mission-critical systems and highly immersive AR/VR experiences. The impact forces are substantial, driving a fundamental paradigm shift in user experience design across nearly every industry. This transformation fosters intense competition among technology giants to deliver the most seamless, intelligent, and human-centric interaction platforms. Concurrently, regulatory frameworks concerning data privacy (e.g., GDPR, CCPA) and security will exert an increasingly influential force, shaping market development, dictating data handling practices, and ultimately influencing consumer adoption patterns, necessitating a proactive and ethical approach to multimodal UI design and deployment.

Segmentation Analysis

The Multimodal UI market is characterized by a rich and intricate segmentation, reflecting the diverse technological components, underlying technologies, varied applications across industries, and the distinct needs of end-users. This comprehensive segmentation provides a crucial framework for stakeholders to deeply understand market dynamics, identify high-growth niches, and strategically tailor product development and marketing initiatives. Analyzing these segments helps in pinpointing specific opportunities for investment, navigating the competitive landscape, and forecasting future innovation trajectories. The market's segmentation by Component, Technology, Application, and End-User reveals a complex interplay of hardware, software, and services that collectively enable the sophisticated interactions characteristic of multimodal interfaces. Each segment possesses unique drivers and challenges, demanding tailored solutions and strategic approaches to harness their full potential. Understanding these distinctions is paramount for effective market penetration and sustained growth in this rapidly evolving domain.

For instance, the segmentation by Component highlights the fundamental building blocks of multimodal systems, from the physical sensors and processing units to the intelligent software that orchestrates and interprets inputs, and the critical services that ensure seamless integration and ongoing support. The Technology segmentation, on the other hand, delves into the specific methods of interaction, such as voice recognition or haptics, each with its own set of technical complexities and application strengths. The Application segment illustrates the widespread utility of multimodal UIs across various industries, demonstrating how these interfaces address unique challenges in fields as diverse as healthcare and automotive. Finally, the End-User segmentation categorizes the ultimate beneficiaries, distinguishing between the sophisticated requirements of enterprise clients and the intuitive needs of individual consumers, allowing for targeted product design and deployment strategies that maximize user adoption and satisfaction across different demographic and professional contexts.

- By Component: This segment differentiates between the tangible and intangible elements crucial for multimodal system functionality.

- Hardware: Includes the physical devices and components that capture, process, and deliver multimodal interactions, such as advanced sensors (cameras, microphones, depth sensors), specialized processors (GPUs, NPUs), high-resolution displays, and haptic actuators for tactile feedback.

- Software: Comprises the intelligent algorithms and platforms that enable multimodal processing, including AI/ML frameworks (for NLP, computer vision), application programming interfaces (APIs) and software development kits (SDKs) for integration, middleware for data fusion, and application-specific software for various use cases.

- Services: Encompasses the support and expertise required for deploying and maintaining multimodal solutions, such as integration services, consulting services for design and implementation, and ongoing maintenance and technical support to ensure optimal performance.

- By Technology: This segmentation categorizes the distinct interaction methods employed within multimodal UIs.

- Voice Recognition: Utilizes natural language processing to interpret spoken commands, queries, and conversational inputs, often with sentiment analysis, for hands-free operation.

- Gesture Recognition: Involves the interpretation of hand, body, or facial movements via optical sensors, enabling intuitive control and interaction without physical contact.

- Eye Tracking: Monitors a user's gaze direction and pupil movements to understand focus, intent, and facilitate selection or navigation, particularly useful in sterile environments or for accessibility.

- Haptics: Provides tactile feedback through vibrations, force, or texture simulation, enhancing the realism and intuitiveness of virtual interactions and confirming commands.

- Brain-Computer Interface (BCI): An emerging technology that directly interprets neural signals to control devices, offering profound potential for accessibility and advanced interaction in specialized fields.

- Facial Recognition: Identifies individuals and interprets facial expressions to understand emotions or confirm identities, contributing to personalized and secure multimodal experiences.

- Mixed Reality (AR/VR) Interactions: Combines virtual and real-world elements, using multimodal inputs to navigate and interact within immersive augmented and virtual environments.

- By Application: This segment details the diverse industries and use cases where Multimodal UIs are deployed.

- Consumer Electronics: Integration into smartphones, smart speakers, wearables, smart TVs, and gaming consoles for enhanced user experience and hands-free control.

- Automotive: Employed in in-vehicle infotainment systems, navigation, climate control, and advanced driver-assistance systems (ADAS) for safer and more intuitive vehicle interaction.

- Healthcare: Used in medical imaging systems, surgical navigation, patient monitoring, robotic surgery, and prosthetic control, offering precision and sterile interaction options.

- Retail & E-commerce: Implemented in interactive kiosks, smart mirrors, personalized shopping assistants, and augmented reality try-on experiences to enhance customer engagement.

- Industrial & Manufacturing: Utilized for human-robot collaboration, predictive maintenance via AR, control of complex machinery, and quality control systems, improving efficiency and safety.

- Aerospace and Defense: Applied in cockpit controls, flight simulators, drone operation, and advanced training systems, requiring highly reliable and efficient interfaces.

- Smart Home & Building Automation: Enables intuitive control of lighting, climate, security systems, and appliances through voice, gesture, and contextual awareness.

- Education: Used in interactive learning platforms, language acquisition tools, and virtual laboratories to create more engaging and accessible educational content.

- By End-User: This segmentation differentiates between the primary types of organizations and individuals adopting multimodal UI solutions.

- Enterprises: Businesses of all sizes (SMEs and Large Enterprises) adopting multimodal UIs for internal operations, customer service, employee training, and specialized industrial applications to boost productivity and innovation.

- Individual Consumers: Everyday users integrating multimodal devices and applications into their personal lives for entertainment, communication, smart home management, and personal assistance.

- Government & Public Sector: Governmental bodies and public institutions leveraging multimodal UIs for smart city initiatives, public safety, defense, and enhanced citizen services.

Value Chain Analysis For Multimodal UI Market

The value chain of the Multimodal UI Market is an intricate ecosystem, characterized by deep interdependencies and specialized expertise at each stage, from fundamental component manufacturing to final end-user delivery and ongoing support. Understanding this chain is crucial for identifying key players, potential bottlenecks, and opportunities for strategic collaboration and differentiation. The upstream segment of the value chain is fundamentally driven by innovation in core technological enablers. This includes a diverse array of component manufacturers providing sophisticated sensor technologies such as high-resolution cameras for advanced computer vision and gesture recognition, sensitive omnidirectional microphones for precise voice capture, depth sensors for 3D spatial awareness, and advanced haptic actuators for delivering realistic tactile feedback. Furthermore, this segment involves semiconductor companies developing specialized processors like Graphics Processing Units (GPUs) and Neural Processing Units (NPUs) optimized for real-time AI workloads, which are essential for processing the vast amounts of data generated by multimodal inputs. Material science firms also contribute significantly by developing advanced display technologies, flexible electronics, and tactile surfaces that enhance the physical interaction points. Crucially, the upstream also includes leading AI research institutions and software companies that innovate in core algorithms for natural language processing, machine learning, computer vision, and data fusion, which together form the intelligence layer interpreting human intent from disparate inputs.

The downstream segment of the value chain focuses on the integration, application development, and delivery of complete multimodal solutions to end-users. This stage involves product developers and system integrators who take the core hardware and software components and combine them into functional, user-centric products and services across various industries. For instance, automotive OEMs integrate multimodal UIs into vehicle cockpits, consumer electronics manufacturers embed them into smart devices, and healthcare technology providers incorporate them into medical equipment. These integrators are responsible for designing the overall user experience, ensuring seamless operation, and customizing solutions to meet specific industry requirements, often collaborating closely with end-users to refine functionalities. Furthermore, this segment includes software developers creating specific applications (apps) that leverage multimodal capabilities, as well as testing and validation services that ensure the reliability, accuracy, and security of the integrated systems before deployment. The success of the downstream activities heavily relies on the quality and interoperability of the upstream components and intellectual property.

Distribution channels for Multimodal UI products and services are diverse, catering to the varied nature of the market. Direct sales channels are prevalent for large enterprise clients and Original Equipment Manufacturers (OEMs), where complex, customized solutions require direct engagement, extensive consultation, and bespoke integration services. This ensures a deep understanding of client-specific needs and facilitates tailored deployments. Conversely, indirect channels, such as a global network of distributors, resellers, and value-added integrators, are crucial for achieving broader market reach, particularly for standardized multimodal components, software licenses, and developer tools. Online digital marketplaces and app stores also play a significant role, providing platforms for software components, SDKs, and end-user applications. The co-existence of both direct and indirect models allows companies to strategically engage with different customer segments, from highly specialized industrial clients requiring custom solutions to mass-market consumers seeking off-the-shelf multimodal devices. This multi-channel approach is critical for maximizing market penetration, fostering widespread adoption, and ensuring that advanced multimodal capabilities are accessible to a diverse range of users across various geographical and industrial landscapes.

Multimodal UI Market Potential Customers

The Multimodal UI Market targets a vast and increasingly diverse array of potential customers across virtually every sector of the global economy, driven by a universal desire for more intuitive, efficient, and accessible technological interaction. The primary end-users and buyers of these advanced products and services include a wide spectrum of enterprises, individual consumers, and governmental entities, each with unique motivations and requirements. In the enterprise sector, automotive manufacturers represent a significant customer base, consistently seeking to enhance in-car experiences with cutting-edge voice and gesture controls for infotainment, navigation, and comfort functions, thereby improving both driver safety and passenger satisfaction. Healthcare providers are increasingly adopting multimodal UIs for applications requiring high precision and sterile environments, such as touchless interfaces in operating rooms, advanced surgical assistance systems that respond to voice and gaze, and sophisticated patient monitoring devices that integrate various biometric inputs for real-time analysis. Industrial and manufacturing companies are also pivotal customers, leveraging multimodal interfaces to improve operational efficiency, enhance worker safety through hands-free control of robotics and machinery, and facilitate augmented reality-assisted maintenance and quality control processes. These enterprise customers value multimodal solutions for their ability to streamline workflows, reduce errors, and foster innovation within their operational frameworks, leading to tangible returns on investment.

Beyond the enterprise realm, individual consumers constitute a massive and rapidly expanding segment of potential customers. This includes a broad demographic that integrates multimodal capabilities into their daily lives through smartphones, smart home devices, wearables, and personal entertainment systems. The appeal for consumers lies in the promise of seamless, personalized digital experiences that allow for natural interaction with technology – whether it's through simple voice commands to control smart speakers, intuitive gestures to navigate a smartwatch, or a combination of inputs to manage a comprehensive smart home ecosystem. As technology becomes more embedded in everyday life, the demand for user interfaces that adapt to human behavior, rather than the other way around, grows exponentially. Consumers are increasingly seeking devices that can anticipate their needs, learn their preferences, and offer truly effortless interaction, making multimodal UIs an indispensable feature for future consumer electronics.

Furthermore, the education sector is exploring multimodal interfaces for creating more engaging and accessible learning experiences, from interactive

| Report Attributes | Report Details |

|---|---|

| Market Size in 2025 | USD 1.8 billion |

| Market Forecast in 2032 | USD 10.5 billion |

| Growth Rate | 28.5% CAGR |

| Historical Year | 2019 to 2023 |

| Base Year | 2024 |

| Forecast Year | 2025 - 2032 |

| DRO & Impact Forces |

|

| Segments Covered |

|

| Key Companies Covered | Microsoft Corporation, Apple Inc., Google LLC, Amazon.com Inc., Samsung Electronics Co., Ltd., Tobii AB, Ultraleap Ltd., SenseGlove, CognitionX, Cerence Inc., Nuance Communications (a Microsoft company), Continental AG, Bosch GmbH, Sony Corporation, Meta Platforms Inc., NVIDIA Corporation, Intel Corporation, PWC (Haptics), Accenture, Capgemini |

| Regions Covered | North America, Europe, Asia Pacific (APAC), Latin America, Middle East, and Africa (MEA) |

| Enquiry Before Buy | Have specific requirements? Send us your enquiry before purchase to get customized research options. Request For Enquiry Before Buy |

Multimodal UI Market Key Technology Landscape

The Multimodal UI market is intricately defined by a dynamic and continually evolving technological landscape, where a convergence of foundational and bleeding-edge innovations coalesce to enable highly sophisticated and intuitive human-computer interactions. At its core, this landscape relies heavily on advanced sensor technologies, which serve as the primary conduits for capturing diverse human inputs. This includes high-resolution, low-light cameras capable of precise gesture and facial recognition, alongside arrays of sensitive microphones designed for accurate voice input and natural language understanding even in noisy environments. Furthermore, depth sensors play a critical role in providing 3D spatial awareness for accurate hand and body tracking, while specialized haptic actuators are essential for delivering realistic and nuanced tactile feedback, thereby enriching the sensory experience and confirming user actions. These hardware components are inextricably linked with the intelligence layer provided by powerful Artificial Intelligence (AI) and Machine Learning (ML) algorithms, which are paramount for processing, interpreting, and fusing the vast streams of raw data generated by these diverse sensors. AI models, particularly deep learning networks, enable sophisticated natural language processing, advanced computer vision for object and gesture detection, and predictive analytics that anticipate user intent, transforming raw input into actionable commands.

Beyond the fundamental hardware and AI, the technological landscape is bolstered by robust software development kits (SDKs) and application programming interfaces (APIs) that provide developers with the essential tools and frameworks to seamlessly integrate various modalities into cohesive and functional applications. These tools abstract away much of the underlying complexity, accelerating development cycles and fostering broader adoption. Edge computing is rapidly gaining prominence within this ecosystem, representing a crucial architectural shift. By enabling the processing of multimodal data closer to the source (e.g., directly on a device rather than in a distant cloud server), edge computing significantly reduces latency, enhances responsiveness, and improves data privacy by minimizing the transmission of sensitive information. This is particularly critical for real-time applications such as autonomous vehicles, industrial control systems, and augmented reality where immediate feedback is non-negotiable. Furthermore, advanced display technologies, including transparent, flexible, and volumetric displays, coupled with sophisticated projection mapping, enhance the visual dimension of multimodal interactions, creating more immersive and accessible interfaces. The integration of high-bandwidth, low-latency communication protocols, such as 5G, is also becoming increasingly vital, ensuring that distributed multimodal systems can operate synchronously and reliably.

Emerging technologies are continually pushing the boundaries of what multimodal UIs can achieve, promising even more profound transformations in human-computer interaction. Brain-Computer Interfaces (BCI), while still largely in nascent stages for mainstream applications, offer entirely new paradigms for interaction by directly interpreting neural signals, holding immense potential for accessibility, medical applications, and highly specialized control systems. Advanced haptic feedback devices, including those employing ultrasonic waves for mid-air haptics, are creating touchable interfaces without physical contact, broadening the scope of immersive experiences. The convergence of these sophisticated technologies, coupled with ongoing interdisciplinary research in human-computer interaction (HCI), cognitive science, and user psychology, is continuously driving innovation. This sustained technological evolution is leading to the creation of UIs that are not only more intuitive and adaptive but also context-aware, capable of understanding and responding to human intent with unprecedented accuracy and personalization. This rich tapestry of technological advancements ensures that the Multimodal UI market will remain a hotbed of innovation, consistently redefining the frontier of digital interaction for years to come.

Regional Highlights

- North America: This region stands as a dominant force in the Multimodal UI Market, characterized by its robust technological infrastructure, a high concentration of leading technology companies, and substantial investments in research and development. The United States, in particular, drives significant innovation, with early adoption across the automotive sector for advanced in-vehicle infotainment and driver assistance systems, in consumer electronics with prominent smart home devices and wearables, and within the burgeoning healthcare technology segment. Strong governmental support for AI and IoT initiatives, coupled with a high demand for cutting-edge user experiences and accessibility solutions, further accelerates market growth and penetration. Canada also contributes significantly with its strong research ecosystem and growing tech industry.

- Europe: Europe represents another key market, showcasing steady and robust growth, often driven by stringent safety regulations in the automotive industry, advanced manufacturing capabilities, and a pronounced focus on digital inclusion and accessibility in public and private sector digital interfaces. Countries like Germany are at the forefront of integrating multimodal solutions into industrial automation and advanced robotics, capitalizing on their strong industrial base. The UK is a hub for AI and software development, contributing significantly to multimodal software platforms. France and Nordic countries demonstrate high adoption rates in smart home technologies and consumer electronics. The region's emphasis on data privacy and ethical AI development also shapes the market, pushing for secure and transparent multimodal solutions.

- Asia Pacific (APAC): The APAC region is poised to be the fastest-growing market globally for Multimodal UIs, primarily fueled by rapid urbanization, a burgeoning middle class with increasing disposable incomes, and the widespread proliferation of smart devices and connected ecosystems. China is a major powerhouse, leading in AI investments, smart city initiatives, and the rapid deployment of multimodal solutions in consumer electronics, retail, and smart manufacturing, supported by a vast domestic market and robust manufacturing capabilities. Japan and South Korea are pioneers in advanced robotics, automotive technology, and immersive computing (AR/VR), creating significant demand for sophisticated multimodal interfaces. India's rapidly growing digital economy and substantial investments in IT infrastructure also present immense opportunities, particularly for voice-enabled multimodal UIs due to linguistic diversity.

- Latin America: This region is an emerging market for Multimodal UIs, witnessing progressive growth driven by increasing internet penetration, expanding smartphone adoption, and growing investments in digital transformation initiatives across various industries. Countries such as Brazil and Mexico are leading the charge, with nascent but growing adoption in consumer electronics, automotive, and smart retail sectors. The demand for localized and accessible digital experiences also fuels the need for voice and gesture-based interfaces. As economic development continues and technological awareness rises, Latin America is expected to present significant growth opportunities for companies offering adaptable and cost-effective multimodal solutions.

- Middle East and Africa (MEA): The MEA market for Multimodal UIs is characterized by gradual but steady growth, largely spurred by ambitious smart city projects in the UAE and Saudi Arabia, diversification efforts away from traditional oil-based economies, and a rising technological awareness among the younger population. Investments in healthcare, tourism, and smart infrastructure are driving the demand for advanced interactive systems, particularly in public spaces and premium consumer segments. The region's focus on innovative urban development and digital services creates a fertile ground for multimodal technologies, though challenges related to infrastructure development and cultural context-specific adaptations remain key considerations for market players.

Top Key Players

The market research report includes a detailed profile of leading stakeholders in the Multimodal UI Market, recognizing their significant contributions through innovation, product development, strategic partnerships, and market penetration across various segments. These companies are instrumental in shaping the technological landscape and driving the adoption of multimodal interfaces globally.- Microsoft Corporation

- Apple Inc.

- Google LLC

- Amazon.com Inc.

- Samsung Electronics Co., Ltd.

- Tobii AB

- Ultraleap Ltd.

- SenseGlove

- CognitionX

- Cerence Inc.

- Nuance Communications (a Microsoft company)

- Continental AG

- Bosch GmbH

- Sony Corporation

- Meta Platforms Inc.

- NVIDIA Corporation

- Intel Corporation

- PWC (Haptics, Consulting & Solutions)

- Accenture (Integration & Consulting Services)

- Capgemini (Digital Transformation & IT Services)

Frequently Asked Questions

Analyze common user questions about the Multimodal UI market and generate a concise list of summarized FAQs reflecting key topics and concerns.What is Multimodal UI and how does it differ from traditional interfaces?

Multimodal UI (User Interface) enables interaction with a system through multiple input methods like voice, gesture, touch, and gaze, simultaneously or sequentially. It differs from traditional single-mode interfaces by offering a more natural, intuitive, and flexible user experience that mimics human communication, enhancing accessibility and efficiency.

How does Artificial Intelligence (AI) specifically contribute to the effectiveness of Multimodal UIs?

AI is fundamental to Multimodal UIs, significantly enhancing their effectiveness by powering advanced natural language processing for voice commands, improving accuracy in gesture and facial recognition, enabling predictive analytics for user intent, and creating context-aware, adaptive systems that personalize interactions and seamlessly fuse diverse input streams.

What are the most promising industries for the adoption and growth of Multimodal UI technology?

The most promising industries for Multimodal UI adoption and growth include automotive for in-vehicle systems, consumer electronics for smart devices, healthcare for sterile and precise interactions, industrial automation for enhanced operational control, and smart home/building sectors for intuitive automation, all driven by demand for advanced user experiences.

What are the key challenges faced during the development and implementation of Multimodal UIs?

Key challenges in Multimodal UI development involve the high costs of integrating diverse advanced technologies, ensuring seamless synchronization and data fusion across multiple input modalities, addressing significant data privacy and security concerns stemming from extensive user data collection, and designing interfaces to prevent increased cognitive load for users.

What future trends and technologies are expected to impact the Multimodal UI Market?

Future trends include further advancements in AI (e.g., explainable AI, federated learning), broader adoption of edge computing for lower latency, integration with 5G for enhanced connectivity, greater personalization and adaptive learning, and the emergence of advanced haptics and early-stage Brain-Computer Interfaces (BCI), all contributing to more immersive and intuitive digital interactions.

To check our Table of Contents, please mail us at: sales@marketresearchupdate.com

Research Methodology

The Market Research Update offers technology-driven solutions and its full integration in the research process to be skilled at every step. We use diverse assets to produce the best results for our clients. The success of a research project is completely reliant on the research process adopted by the company. Market Research Update assists its clients to recognize opportunities by examining the global market and offering economic insights. We are proud of our extensive coverage that encompasses the understanding of numerous major industry domains.

Market Research Update provide consistency in our research report, also we provide on the part of the analysis of forecast across a gamut of coverage geographies and coverage. The research teams carry out primary and secondary research to implement and design the data collection procedure. The research team then analyzes data about the latest trends and major issues in reference to each industry and country. This helps to determine the anticipated market-related procedures in the future. The company offers technology-driven solutions and its full incorporation in the research method to be skilled at each step.

The Company's Research Process Has the Following Advantages:

- Information Procurement

The step comprises the procurement of market-related information or data via different methodologies & sources.

- Information Investigation

This step comprises the mapping and investigation of all the information procured from the earlier step. It also includes the analysis of data differences observed across numerous data sources.

- Highly Authentic Source

We offer highly authentic information from numerous sources. To fulfills the client’s requirement.

- Market Formulation

This step entails the placement of data points at suitable market spaces in an effort to assume possible conclusions. Analyst viewpoint and subject matter specialist based examining the form of market sizing also plays an essential role in this step.

- Validation & Publishing of Information

Validation is a significant step in the procedure. Validation via an intricately designed procedure assists us to conclude data-points to be used for final calculations.

×

Request Free Sample:

Related Reports

Select License

Why Choose Us

We're cost-effective and Offered Best services:

We are flexible and responsive startup research firm. We adapt as your research requires change, with cost-effectiveness and highly researched report that larger companies can't match.

Information Safety

Market Research Update ensure that we deliver best reports. We care about the confidential and personal information quality, safety, of reports. We use Authorize secure payment process.

We Are Committed to Quality and Deadlines

We offer quality of reports within deadlines. We've worked hard to find the best ways to offer our customers results-oriented and process driven consulting services.

Our Remarkable Track Record

We concentrate on developing lasting and strong client relationship. At present, we hold numerous preferred relationships with industry leading firms that have relied on us constantly for their research requirements.

Best Service Assured

Buy reports from our executives that best suits your need and helps you stay ahead of the competition.

Customized Research Reports

Our research services are custom-made especially to you and your firm in order to discover practical growth recommendations and strategies. We don't stick to a one size fits all strategy. We appreciate that your business has particular research necessities.

Service Assurance

At Market Research Update, we are dedicated to offer the best probable recommendations and service to all our clients. You will be able to speak to experienced analyst who will be aware of your research requirements precisely.

Contact With Our Sales Team

Customer Testimonials

The content of the report is always up to the mark. Good to see speakers from expertise authorities.

Privacy requested , Managing Director

A lot of unique and interesting topics which are described in good manner.

Privacy requested, President

Well researched, expertise analysts, well organized, concrete and current topics delivered in time.

Privacy requested, Development Manager